Data Brokerage: Bridging the Gap Between Data Buyers and Sellers for Enhanced Intelligence

1. Platform Category: Data Exchange Service

2. Core Technology/Architecture: Large-scale Data Aggregation and Processing

3. Key Data Governance Feature: Data Privacy Compliance and Consent Management

4. Primary AI/ML Integration: Providing Data for AI/ML Model Training

5. Main Competitors/Alternatives: Other Data Brokerage Firms, Cloud Data Marketplaces

In our increasingly data-driven global economy, information has solidified its position as one of the most valuable commodities. The intricate process of connecting those who possess vast amounts of data with those who critically need it is efficiently managed through the specialized function of Data Brokerage. This essential service facilitates the seamless exchange of diverse datasets, optimizing market efficiency and enabling businesses to unlock unparalleled insights. World2Data.com delves deep into this fascinating ecosystem, analyzing how data brokers operate as pivotal intermediaries in the complex information supply chain.

Introduction to the Data Brokerage Ecosystem

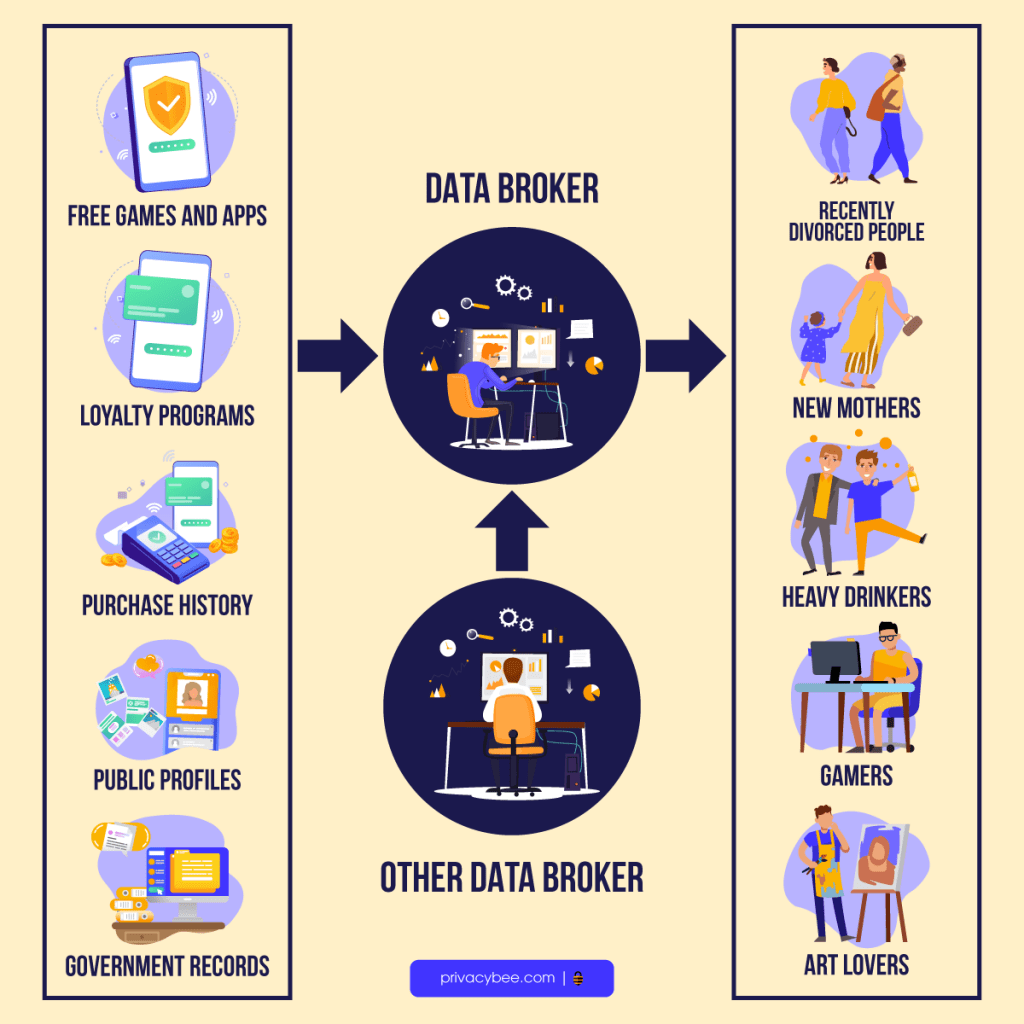

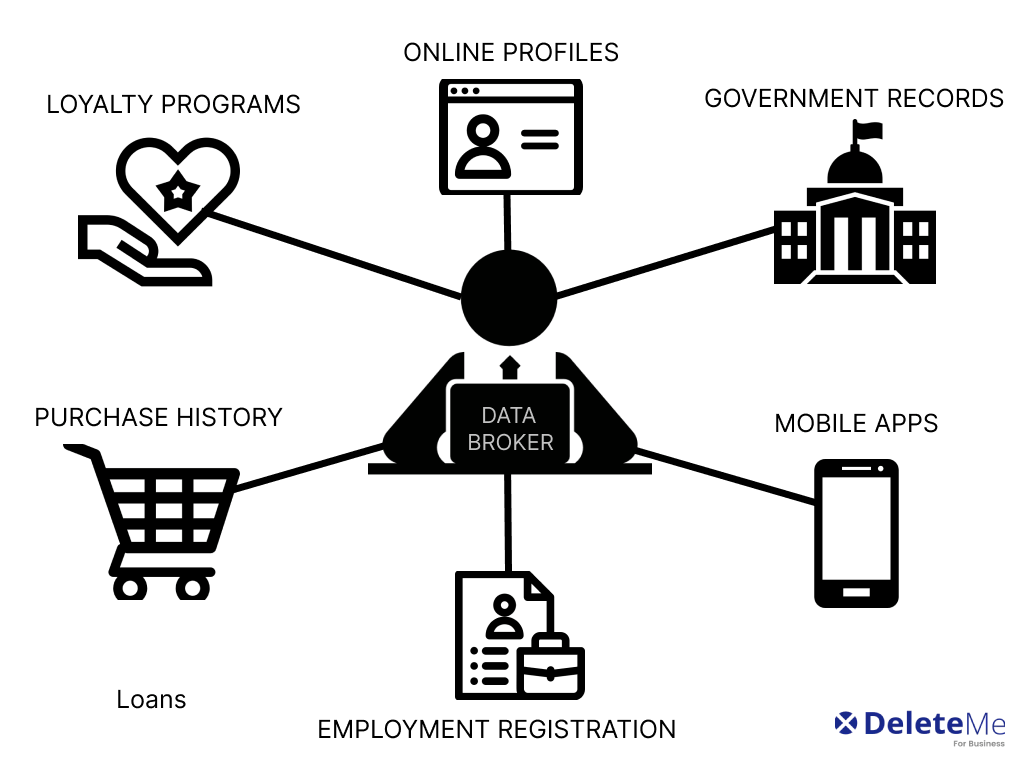

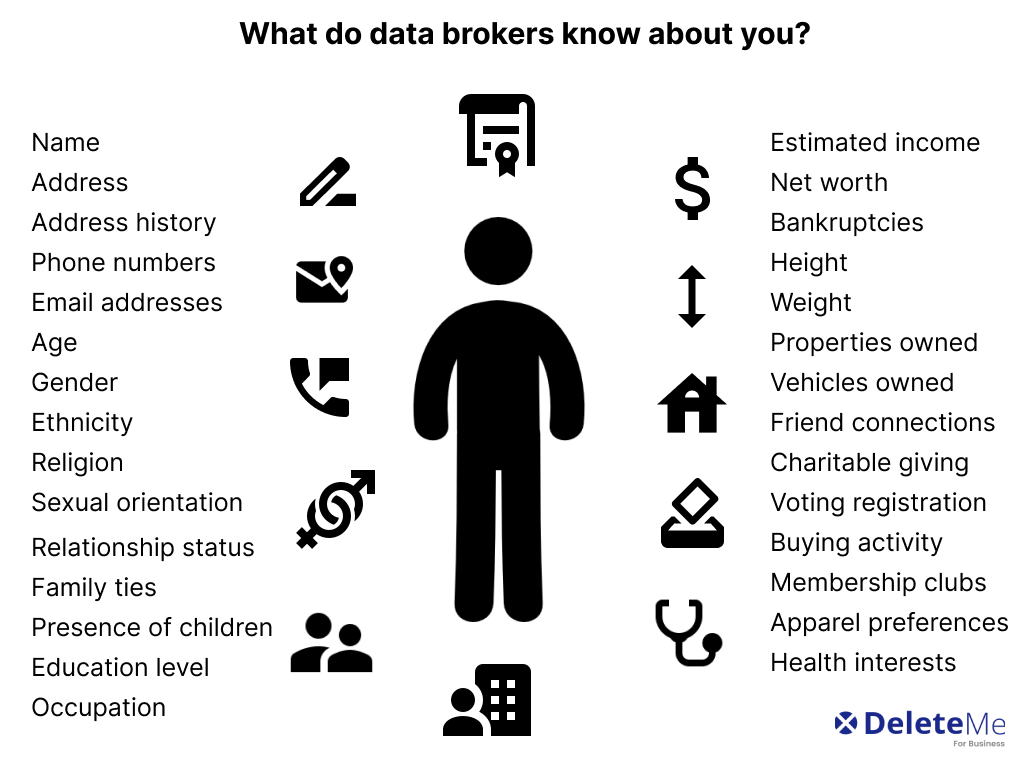

The concept of Data Brokerage is a cornerstone of modern digital commerce, representing a sophisticated intermediary service that aggregates, refines, and distributes information. These brokers acquire colossal volumes of data from an extensive array of sources—ranging from publicly accessible records and commercial transactions to web activity, social media engagement, and even specialized sensor networks. Once acquired, this raw data undergoes rigorous processing, including cleaning, standardization, enrichment, and categorization, transforming it into structured, actionable intelligence. The primary objective of a data broker is to serve as a crucial bridge, ensuring that high-quality, relevant data flows efficiently and purposefully between sellers and buyers across diverse industries. This intricate dance of information exchange truly powers modern innovation, making data accessible to fuel everything from hyper-targeted marketing to advanced scientific research.

The objective of this deep dive is to dissect the operational mechanics of data brokerage, illuminate its profound impact on business intelligence and AI development, and critically examine the ethical and regulatory challenges inherent in this powerful industry. We will explore the technical architecture that underpins large-scale data aggregation, the stringent governance features employed to manage privacy and consent, and how this service integrates with artificial intelligence and machine learning initiatives. Understanding Data Brokerage is no longer optional; it is fundamental to comprehending the future trajectory of business and technology.

Core Breakdown: The Mechanics and Value of Data Brokerage

At its technical core, Data Brokerage relies on robust infrastructure designed for massive-scale data ingestion, processing, and storage. Data acquisition is often automated, utilizing APIs, web scraping tools, and direct data feeds from partners. Once ingested, the data undergoes a multi-stage pipeline: data cleaning removes inconsistencies and errors; data standardization ensures uniformity across disparate sources; data enrichment adds value by merging datasets or appending additional attributes; and data categorization organizes information into usable segments (e.g., demographics, behavioral patterns, firmographics). Advanced analytical tools and machine learning algorithms are frequently employed here to identify patterns, validate data accuracy, and predict its potential value to buyers. These processes are critical for transforming raw, often chaotic, information into precise, high-value data products.

A key component of modern data brokerage is the sophisticated matching algorithm. These algorithms analyze buyer requirements against the vast aggregated datasets, identifying optimal matches based on specific criteria such as target demographics, industry focus, geographic location, or behavioral indicators. This intelligent matching ensures that data buyers receive highly relevant and actionable information, significantly reducing their time-to-insight and improving the efficacy of their data-driven initiatives. Throughout this entire process, adherence to legal and ethical standards is paramount, necessitating robust privacy safeguards, consent management systems, and stringent security protocols to protect sensitive information.

Challenges and Barriers to Adoption in Data Brokerage

Despite its undeniable value, the Data Brokerage industry faces several significant challenges and barriers to widespread adoption and continued growth:

- Data Quality and Verification: One of the persistent hurdles is ensuring the accuracy, completeness, and recency of aggregated data. Poor data quality can lead to flawed insights and erode trust, necessitating continuous investment in sophisticated validation and cleansing technologies. Data drift, where the statistical properties of a target variable change over time, also poses a significant threat to the long-term utility of datasets.

- Regulatory Complexity and Compliance Overhead: The proliferation of data privacy regulations like GDPR, CCPA, LGPD, and similar frameworks worldwide creates an immensely complex compliance landscape. Brokers must meticulously manage consent, data subject rights (e.g., right to access, right to be forgotten), and cross-border data transfer rules, requiring substantial legal and technical resources. Non-compliance can lead to hefty fines and severe reputational damage.

- Ethical Concerns and Public Trust: The sheer volume and granularity of data collected by brokers often raise ethical questions about surveillance, profiling, and potential misuse of personal information. Negative public perception and skepticism about data handling practices can hinder market acceptance and lead to calls for stricter regulation. Building and maintaining trust through transparency and robust ethical guidelines is paramount.

- Security Risks: Aggregating vast quantities of sensitive data makes brokers attractive targets for cyberattacks. Protecting this data from breaches, unauthorized access, and insider threats requires state-of-the-art cybersecurity infrastructure, continuous monitoring, and incident response capabilities.

- MLOps Complexity: While brokers provide data for AI/ML, the integration into complex MLOps pipelines can be challenging. Ensuring data format compatibility, managing version control of datasets, and providing continuous data feeds for model retraining adds layers of operational complexity for both brokers and buyers.

Business Value and ROI Derived from Data Brokerage

For both data buyers and sellers, the returns on investment in Data Brokerage are substantial and multifaceted:

- For Data Buyers:

- Enhanced Market Intelligence: Access to external, diverse datasets provides unparalleled insights into market trends, competitor strategies, and emerging opportunities, enabling more informed strategic decision-making.

- Superior Customer Segmentation & Targeted Marketing: Brokers provide rich demographic, psychographic, and behavioral data that allows businesses to create highly precise customer segments, leading to more effective and personalized marketing campaigns with higher conversion rates.

- Improved Predictive Analytics and AI Model Training: High-quality, diverse datasets are the lifeblood of robust AI and ML models. Brokers supply the necessary training data for predictive analytics in areas like fraud detection, churn prediction, and demand forecasting, leading to more accurate and reliable models.

- Competitive Advantage: Timely access to unique or hard-to-acquire datasets can provide businesses with a significant edge, allowing them to innovate faster, understand customer needs better, and react swiftly to market shifts.

- Reduced Costs and Time-to-Insight: Outsourcing data acquisition and processing to brokers eliminates the need for businesses to build and maintain their own costly data gathering infrastructure, accelerating their time-to-insight and allowing them to focus on core competencies.

- For Data Sellers:

- Data Monetization: Organizations holding valuable proprietary data can generate new revenue streams by selling access to their anonymized or aggregated datasets through brokers, transforming an otherwise dormant asset into a profitable one.

- Expanded Market Reach: Brokers provide a global platform, connecting sellers with a broad spectrum of potential buyers they might not otherwise reach, without the need for extensive direct sales and marketing efforts.

- Compliance and Security Expertise: Leveraging a broker’s established infrastructure often means benefiting from their expertise in data privacy compliance, consent management, and cybersecurity, reducing the burden and risk for data owners.

Comparative Insight: Data Brokerage vs. Traditional Data Models

To fully appreciate the unique value proposition of Data Brokerage, it’s essential to compare it against more traditional data acquisition and management paradigms, such as internal data lakes/warehouses and direct B2B data exchanges.

- Data Brokerage vs. Traditional Data Lakes/Warehouses:

- Scope of Data: Traditional data lakes and warehouses primarily house an organization’s internal, first-party data (operational data, customer interactions, ERP systems). While rich for internal analysis, they often lack external market context, competitor insights, or broad demographic patterns. Data brokers, conversely, specialize in aggregating vast quantities of *external*, third-party data, offering a panoramic view beyond an organization’s four walls.

- Acquisition & Processing: With internal systems, data acquisition is typically confined to organizational boundaries, and processing pipelines are designed for specific internal use cases. Brokers, however, are experts in large-scale, multi-source data ingestion, cleansing, standardization, and enrichment from countless external origins, a monumental task for individual companies to replicate.

- Use Cases: Data lakes/warehouses are excellent for operational reporting, internal analytics, and building models based on proprietary customer behavior. Data Brokerage data is invaluable for market segmentation, competitive intelligence, trend analysis, external risk assessment, and enriching existing internal datasets for a more holistic view.

- Data Brokerage vs. Direct B2B Data Exchanges/APIs:

- Efficiency and Reach: Direct data exchanges require establishing individual agreements, technical integrations, and ongoing relationship management with each data provider. This can be time-consuming and resource-intensive, limiting the breadth of data sources. Data brokers act as a single point of contact, aggregating data from hundreds or thousands of sources, offering unparalleled efficiency and reach for buyers seeking diverse datasets.

- Standardization & Quality: Data from direct APIs often comes in varying formats and quality levels, requiring significant in-house effort for integration and standardization. Brokers perform this critical work upfront, providing curated, often standardized and enriched datasets, significantly reducing the integration burden on the buyer.

- Compliance Burden: Managing privacy compliance, consent, and data governance across numerous direct relationships can be overwhelming. Reputable data brokers invest heavily in robust compliance frameworks, abstracting much of this complexity for both buyers and sellers.

- Data Brokerage vs. Cloud Data Marketplaces:

- Scope and Specialization: Cloud data marketplaces (e.g., AWS Data Exchange, Snowflake Marketplace) offer convenient access to various datasets, often from specific vendors or within a particular cloud ecosystem. While growing, they might not offer the same depth of specialized, aggregated, and highly refined datasets that dedicated data brokers, with their vast networks and processing capabilities, can provide.

- Intermediation Level: Cloud marketplaces often act as a platform facilitating direct transactions. Data brokers often offer a more ‘managed service’ approach, actively curating, enhancing, and even custom-tailoring datasets for specific client needs beyond just listing available data.

In essence, while traditional models are crucial for internal data leverage, and direct exchanges offer bespoke solutions, Data Brokerage excels in providing broad, curated, and easily accessible external intelligence at scale. It fills a critical void by democratizing access to diverse, high-value data, enabling organizations of all sizes to make data-driven decisions that would otherwise be out of reach.

World2Data Verdict: The Future Imperative of Trust and Transparency

The journey of Data Brokerage from a nascent industry to a critical component of the global information economy is undeniable. As we look ahead, the evolution of this sector will be inextricably linked to two paramount factors: trust and transparency. For World2Data.com, the future success of data brokers hinges not just on their ability to aggregate and process vast quantities of data, but more critically, on their unwavering commitment to ethical data practices, robust privacy protections, and clear communication with both data providers and consumers. As regulatory frameworks continue to tighten and public awareness of data privacy intensifies, brokers who prioritize consent management, provide clear provenance of data, and implement auditable security measures will differentiate themselves and thrive. Furthermore, the integration of advanced explainable AI (XAI) within their data processing pipelines will become crucial, allowing buyers to understand not just ‘what’ data they are getting, but ‘how’ it was derived and curated. We predict that the most successful data brokers of tomorrow will be those who actively champion data stewardship, transforming potential liabilities into powerful competitive advantages built on a foundation of integrity, fostering a more sustainable and responsible data ecosystem for all.