IoT Data Streams: The Real-time Engine Powering Smart Devices and Automation

At the heart of modern technological progress lies the continuous, real-time flow of information known as IoT Data Streams. These dynamic data pipelines are the unseen force driving intelligent systems, forming the very essence of how smart devices gather, process, and act on information across diverse environments. Indispensable for translating raw sensor readings into actionable insights, IoT Data Streams enable everything from seamless smart home experiences to advanced, highly automated industrial operations, transforming our interaction with the physical world.

Introduction: The Unseen Flow That Drives Intelligence

The proliferation of the Internet of Things (IoT) has dramatically reshaped industries and daily life, introducing a new paradigm where physical objects are imbued with digital intelligence. Central to this transformation are IoT Data Streams, the continuous torrents of information generated by countless connected devices. These streams are not merely static datasets; they are living arteries that transmit vital telemetry, status updates, and environmental readings in real time, serving as the critical infrastructure that bridges the physical world with digital intelligence. For World2Data.com, understanding and leveraging these streams is paramount to unlocking the full potential of data-driven automation and smart ecosystems.

From wearable fitness trackers to industrial sensors monitoring intricate machinery, every IoT device contributes to an ever-expanding universe of data. The objective of this deep dive is to explore the architecture, challenges, and immense value proposition of IoT Data Streams. We will analyze how these streams facilitate real-time decision-making, power advanced automation, and underpin sophisticated AI/ML integrations, fundamentally altering how businesses operate and how individuals interact with their environments. This analysis will also highlight the critical role of robust stream processing platforms and the essential governance features required to manage this deluge of information effectively.

Core Breakdown: Architecture, Challenges, and Business Value of IoT Data Streams

IoT Data Streams are far more than just data conduits; they represent a complex, event-driven ecosystem designed for high-volume, low-latency data processing. The architecture supporting these streams is multifaceted, involving a blend of edge computing, cloud services, and sophisticated stream processing engines. This section delves into the technical underpinnings, key challenges, and tangible business benefits derived from effectively managing these continuous data flows.

The Foundation of Smart Ecosystems: Architectural Components and Technologies

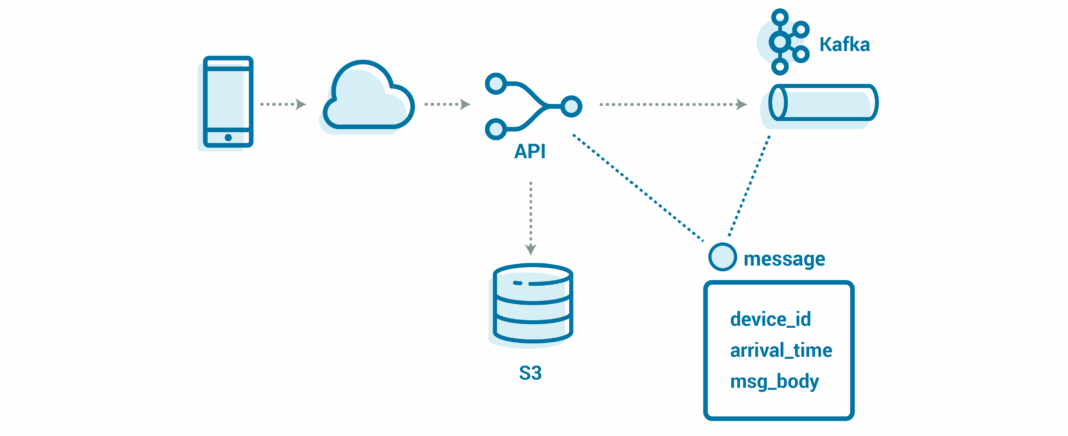

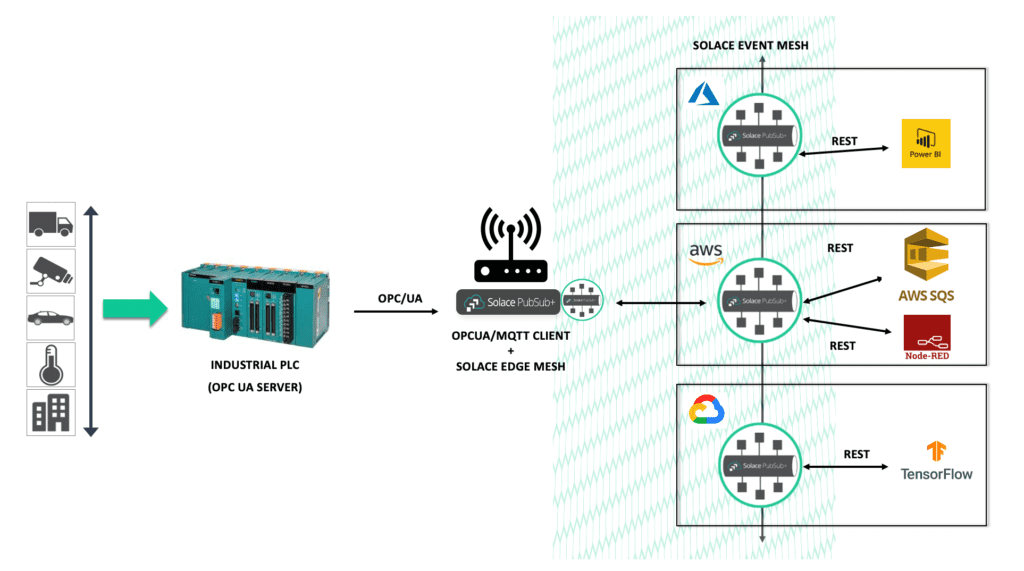

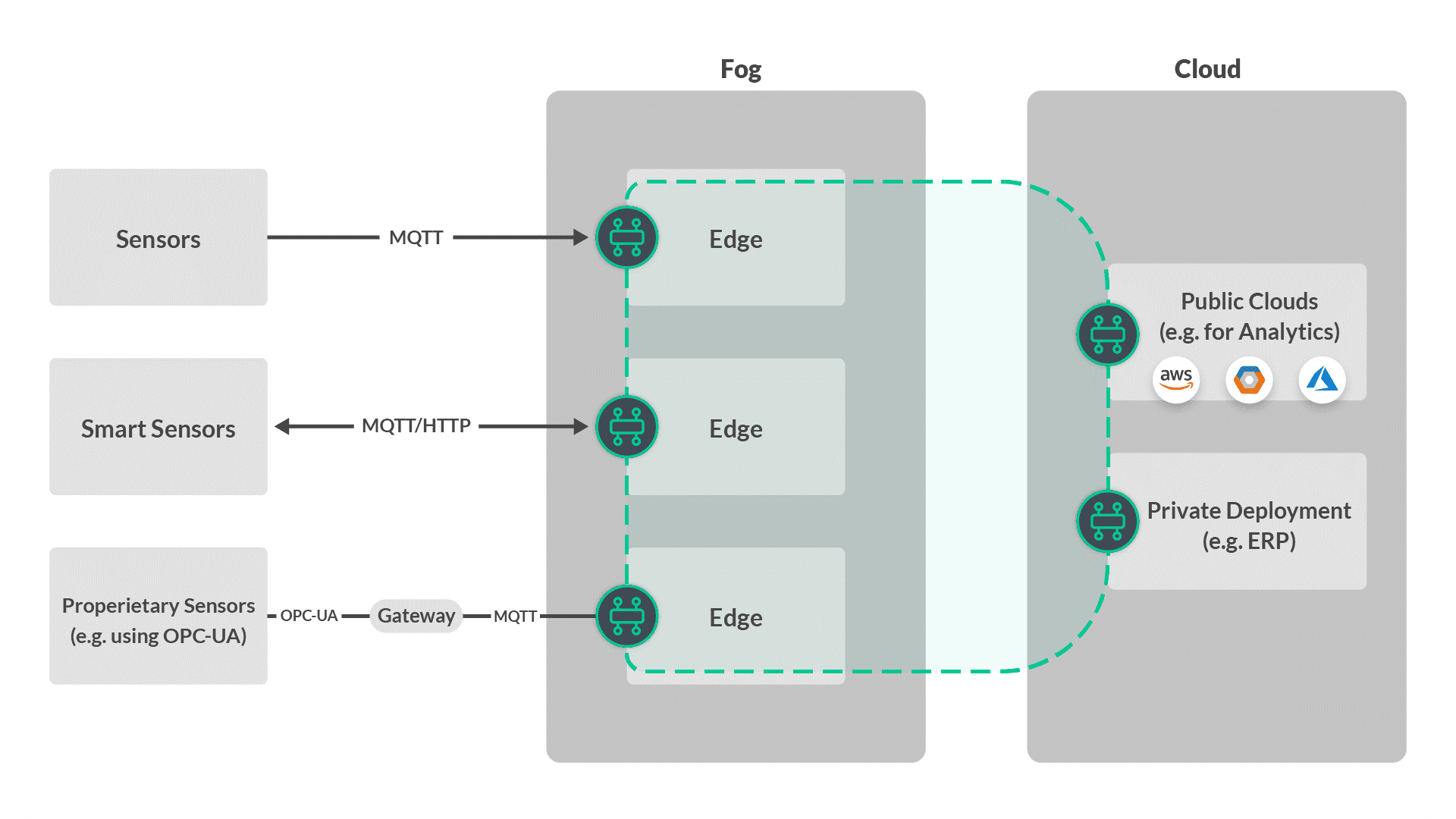

The journey of an IoT data stream begins at the edge, where devices – ranging from simple sensors to complex industrial machines – collect raw data. This data is then transmitted through various protocols, predominantly

Upon collection, the data streams often encounter

Challenges and Barriers to Adoption in IoT Data Management

Despite their transformative potential, managing IoT Data Streams presents significant challenges:

- Data Volume, Velocity, and Variety (the 3 Vs): The sheer scale of data generated by millions of devices, often arriving at high frequency and in diverse formats, overwhelms traditional data infrastructure. Ensuring systems can ingest, process, and store this data without bottlenecks is a constant battle.

- Data Quality and Integrity: IoT sensors can suffer from calibration issues, environmental interference, or network glitches, leading to noisy, incomplete, or inaccurate data. Maintaining data quality, filtering out erroneous readings, and ensuring data integrity are crucial for reliable insights.

- Security and Privacy: IoT devices are often deployed in exposed environments, making them vulnerable targets. Securing data in transit (e.g., via

data encryption ) and at rest, implementing robustdevice authentication and authorization , and enforcingrole-based access control are paramount. Privacy concerns also necessitatedata anonymization and strictdata retention policies . - Latency and Real-time Processing: Many IoT use cases, such as predictive maintenance or autonomous vehicles, demand sub-millisecond response times. Achieving this low latency across a distributed architecture, from edge to cloud, is a complex engineering feat.

- Interoperability and Standardization: A lack of universal standards for device communication, data formats, and platform integration often leads to siloed systems and vendor lock-in, hindering seamless integration and scalability.

- MLOps Complexity: Integrating machine learning models with continuous data streams introduces MLOps challenges such as model drift detection, retraining pipelines, and ensuring real-time model serving at scale.

Machine learning at the edge further complicates deployment and management.

Business Value and Return on Investment (ROI) from IoT Data Streams

The effective harnessing of IoT Data Streams delivers substantial business value and significant ROI:

- Enhanced Operational Efficiency: Real-time monitoring of assets and processes allows for immediate identification of inefficiencies, leading to optimized resource utilization, reduced waste, and streamlined operations. This includes

predictive maintenance where anomalies are detected before failures occur, saving costly downtime. - New Business Models and Services: The ability to collect and analyze granular data enables businesses to offer innovative, data-driven services, such as usage-based insurance, pay-per-use equipment, or subscription models based on device performance.

- Improved Decision Making:

Real-time operational intelligence derived from streams empowers stakeholders with up-to-the-minute insights, facilitating agile and informed decision-making across all levels of an organization. - Superior Customer Experience: Smart devices can personalize experiences, automate routine tasks, and respond proactively to user needs, leading to increased customer satisfaction and loyalty.

- Cost Reduction: By preventing equipment failures, optimizing energy consumption, and automating tasks, businesses can significantly reduce operational costs and extend the lifespan of assets.

- Advanced AI/ML Integrations: IoT streams provide the continuous input for

anomaly detection , predictive analytics, andautomated decision making . This high-quality, real-time data is critical for training and deploying robust AI models, essentially acting as a living “feature store” for AI applications operating on continuous data.

Comparative Insight: IoT Data Streams vs. Traditional Data Architectures

The advent of IoT Data Streams has necessitated a fundamental shift in data architecture, moving beyond the capabilities of traditional data lakes and data warehouses. While these legacy systems remain crucial for historical analysis and batch processing, they are ill-equipped to handle the unique demands of IoT data.

Traditional data warehouses, built primarily for structured data and complex SQL queries, excel at reporting on past events. Their batch-oriented extract, transform, load (ETL) processes are inherently slow and cannot cope with the velocity of IoT data. Similarly, data lakes, while capable of storing vast amounts of raw, unstructured data, typically process this data in batches for later analysis, making them unsuitable for real-time applications where immediate action is required.

IoT Data Streams, in contrast, demand a real-time,

Key differentiators include:

- Latency: Traditional systems operate with minutes to hours of latency; stream processing aims for sub-second latency.

- Data Freshness: IoT streams provide “fresh” data for current decision-making, whereas data lakes/warehouses typically offer “stale” data for retrospective analysis.

- Processing Model: Batch processing for traditional systems vs. continuous, event-by-event processing for streams.

- Use Cases: Business intelligence and historical reporting for traditional systems vs.

real-time operational intelligence ,anomaly detection , andautomated decision making for IoT streams.

While distinct, these architectures are not mutually exclusive. Often, filtered and aggregated IoT data streams are eventually archived into data lakes or warehouses for long-term storage, compliance, and deeper, historical machine learning model training. The modern data landscape is a hybrid, where streaming and batch processing coexist, each serving its unique purpose to create a holistic data strategy.

World2Data Verdict: Embracing the Real-time Imperative

The inexorable growth of the IoT landscape underscores an undeniable truth: IoT Data Streams are not merely a feature but the foundational imperative for any organization seeking competitive advantage in a connected world. World2Data.com asserts that businesses must pivot from reactive, batch-oriented data strategies to proactive, real-time stream processing capabilities. The future demands robust, secure, and scalable architectures that can ingest, process, and analyze data at the speed of an event, driving immediate insights and automated actions. Investing in platform categories like