ETL Platform Best Practices for Rapid Data Processing

- Platform Category: ETL/ELT Platforms, Data Integration Platforms

- Core Technology/Architecture: Cloud-native, ELT (Extract, Load, Transform) approach, Distributed Processing, Real-time Data Streaming, Serverless architecture

- Key Data Governance Feature: Robust Data Quality Checks, Automated Metadata Management, Data Lineage Tracking, Auditing and Logging of transformations

- Primary AI/ML Integration: AI-driven Data Quality Automation, Anomaly Detection in data pipelines, Predictive scaling of resources, Intelligent data mapping and schema recommendations

- Main Competitors/Alternatives: Fivetran, Stitch Data, Matillion, Talend, Informatica PowerCenter, AWS Glue, Azure Data Factory, Google Cloud Dataflow

In today’s hyper-competitive and data-intensive business landscape, the ability to process vast quantities of information at speed is no longer a luxury but a fundamental necessity. An efficient ETL Platform is not merely a technical tool; it is the cornerstone of timely insights and operational excellence, ensuring data pipelines are robust and agile to meet ever-evolving business demands. Mastering ETL Platform best practices for faster data processing is crucial for any organization aiming to leverage its data for strategic advantage and maintain a competitive edge.

Introduction: The Imperative for Accelerated Data Processing

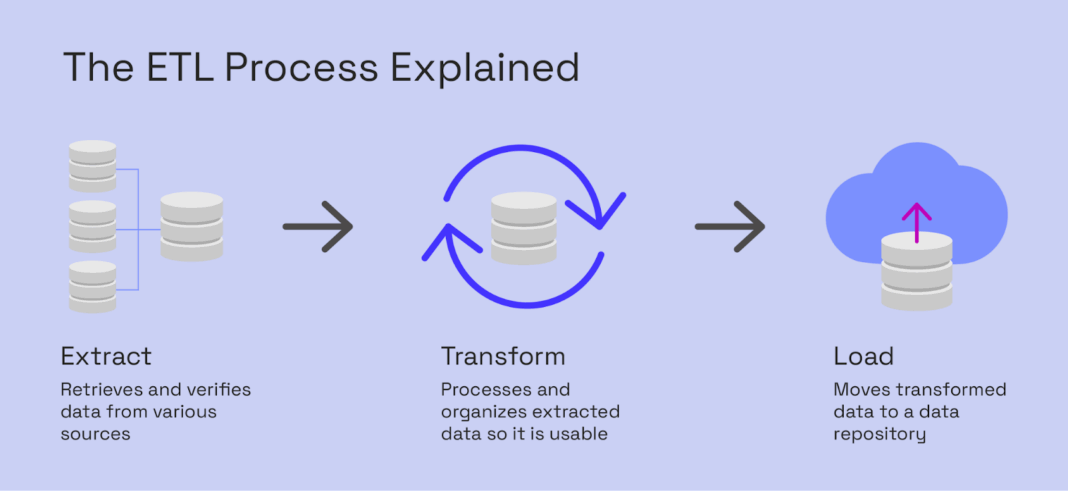

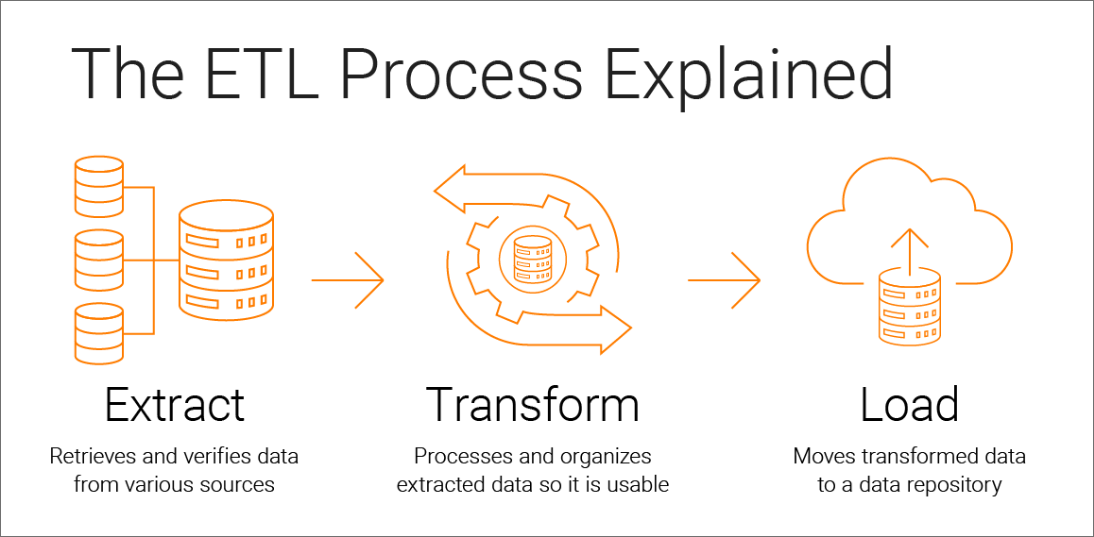

The proliferation of data sources, coupled with the increasing demand for real-time analytics and artificial intelligence (AI) initiatives, has placed unprecedented pressure on traditional data processing methodologies. Organizations are collecting data at an exponential rate from diverse systems, including transactional databases, IoT devices, social media, and third-party APIs. Extract, Transform, Load (ETL) processes, which historically have been batch-oriented and resource-intensive, must now evolve to handle this new paradigm. An optimized ETL Platform is critical for bridging the gap between raw data and actionable intelligence, ensuring that data is not only available but also clean, consistent, and ready for consumption by business intelligence tools, machine learning models, and critical decision-makers. This article delves into the essential best practices that empower organizations to achieve faster data processing and maximize the efficiency of their ETL infrastructure.

Core Breakdown: Architecting for Speed and Efficiency

1. Optimize Data Extraction Techniques

The initial stage of the ETL process — data extraction — can often be a significant bottleneck if not managed effectively. The goal is to retrieve necessary data from source systems with minimal impact and maximum speed.

- Implement Incremental Loading: Instead of performing full data loads every time, which is resource-intensive and time-consuming, focus on extracting only new or modified data. This approach significantly reduces the volume of data processed during each cycle, leading to quicker run times and less strain on source systems. An intelligent ETL Platform facilitates this by tracking changes effectively, often through timestamp columns, sequence numbers, or log-based mechanisms.

- Utilize Change Data Capture (CDC): CDC is a sophisticated form of incremental loading that captures and delivers only the changes made to source databases since the last extraction. This can be implemented at the database level (e.g., using transaction logs or triggers) or through specific CDC tools. By focusing solely on deltas, CDC minimizes the data volume transferred and processed, making it ideal for near real-time data integration and ensuring data freshness without overwhelming source systems.

- Parallelize Extractions: When dealing with multiple independent data sources or large tables that can be logically partitioned, concurrent extraction streams can dramatically reduce overall extraction time. Modern ETL Platforms support parallel processing, allowing different data sets or segments of a single large dataset to be extracted simultaneously.

- Source System Optimization: Ensure source databases are properly indexed and queries are optimized for extraction. Collaborating with source system administrators can identify performance bottlenecks and implement necessary adjustments, such as creating dedicated replication instances or read replicas to offload ETL queries.

2. Streamline Data Transformation Processes

Transformation is often the most complex and resource-intensive stage of ETL, involving data cleansing, standardization, enrichment, and aggregation. Efficient transformation is key to avoiding bottlenecks and ensuring data quality.

- Standardize Data Cleansing Rules: Establish clear, consistent, and automated data cleansing rules to handle missing values, duplicates, inconsistencies, and erroneous entries. Automating these rules within the ETL Platform reduces manual effort, improves data quality, and ensures transformations are applied uniformly, making data ready for analysis promptly.

- Leverage Parallel Processing: Just like extraction, transformation operations can greatly benefit from parallelization. By breaking down large datasets into smaller chunks and processing them concurrently across multiple compute nodes, complex transformations can be completed much faster. Distributed processing frameworks (like Apache Spark often integrated into cloud ETL Platforms) are designed specifically for this purpose.

- Push-Down Transformations: Whenever possible, perform transformations closer to the data source or target database. This “push-down” approach leverages the processing power of the database engines, which are often highly optimized for data manipulation, reducing the amount of data that needs to be moved and processed by the ETL engine itself. This is a hallmark of the ELT (Extract, Load, Transform) paradigm.

- Optimize Transformation Logic: Review and refine SQL queries and transformation scripts for efficiency. Avoid complex nested subqueries where simpler joins or temporary tables might suffice. Profile transformation steps to identify performance bottlenecks and refactor inefficient logic.

- Utilize In-Memory Processing: For certain transformation steps, especially aggregation or complex calculations, leveraging in-memory processing capabilities offered by modern ETL Platforms can significantly speed up execution by reducing disk I/O.

3. Enhance Data Loading Efficiency

The final step in the ETL Platform process, data loading, demands careful attention to ensure data is written to the target system as quickly and efficiently as possible, maximizing throughput and availability.

- Choose Appropriate Loading Strategies:

- Bulk Loading: For initial loads or large dataset updates, bulk loading mechanisms bypass standard database insert mechanisms, writing data directly to disk blocks. This is significantly faster than row-by-row inserts.

- Micro-batching: For near real-time scenarios, instead of continuous streaming, small batches of data can be loaded frequently, balancing latency with efficiency.

- Partitioning and Indexing: Ensure target tables are properly partitioned and indexed to support efficient data inserts and subsequent query performance. However, temporarily disabling non-critical indexes during large loads can speed up the loading process, re-enabling them once the load is complete.

- Optimize Target Database Performance:

- Resource Allocation: Ensure the target database has sufficient CPU, memory, and I/O resources to handle the incoming data volume.

- Database Locking: Minimize database lock contention during loading. Strategies like loading into staging tables and then using a single atomic swap or merge operation can reduce the impact on concurrent users.

- Parallel Loads: When loading into partitioned tables or multiple tables, parallel loading streams can drastically reduce the overall load time.

- Error Handling and Recovery: Implement robust error logging and graceful recovery mechanisms. Failed loads should not corrupt existing data and should be easily restartable from the point of failure.

4. Embrace Cloud-Native ETL Solutions

Leveraging cloud-native ETL Platform solutions provides unparalleled flexibility, scalability, and cost-efficiency, which are critical for faster data processing in today’s dynamic environments.

- Scale Resources Dynamically: Cloud platforms offer elasticity, allowing an ETL Platform to dynamically adjust compute, memory, and storage resources based on demand. This eliminates the need for expensive over-provisioning for peak loads and allows for faster data processing during peak times by scaling up instantly. Conversely, resources can be scaled down during off-peak hours to save costs.

- Integrate Managed Services: Cloud providers offer fully managed services for data integration (e.g., AWS Glue, Azure Data Factory, Google Cloud Dataflow), data warehousing (Snowflake, BigQuery, Redshift), and streaming (Kafka, Kinesis). Utilizing these services reduces operational overhead, as the provider handles infrastructure management, patching, and scaling, allowing teams to focus on data logic rather than infrastructure.

- Serverless Architecture: Many modern ETL Platforms leverage serverless computing (e.g., AWS Lambda, Azure Functions) for specific transformation tasks. This paradigm means you only pay for the compute resources consumed during execution, making it highly cost-effective for intermittent or event-driven ETL workloads and enabling rapid, on-demand processing.

- Global Reach and Resilience: Cloud-native solutions offer global distribution capabilities, enabling data processing closer to the source and target systems, reducing latency. Built-in redundancy and disaster recovery features enhance the resilience and availability of ETL pipelines.

5. Implement Robust Monitoring and Alerting

Continuous monitoring is essential for maintaining a high-performing ETL Platform. Proactive identification and resolution of issues are key to ensuring data availability and processing speed.

- Track Pipeline Performance Metrics: Monitor key metrics such as processing times for each stage (extraction, transformation, loading), data volumes processed, resource utilization (CPU, memory, I/O), and error rates. Visual dashboards can provide real-time insights into pipeline health and performance trends.

- Set Up Proactive Anomaly Detection: Implement automated anomaly detection systems that can flag unusual patterns in data volume, processing duration, or data quality metrics. For instance, a sudden drop in extracted rows or an unexplained spike in processing time could indicate a problem. Many modern ETL Platforms integrate with AI/ML tools for predictive monitoring.

- Configure Intelligent Alerting: Establish thresholds for critical metrics and configure alerts (e.g., email, SMS, Slack notifications) for when these thresholds are breached or when pipeline failures occur. Prioritize alerts based on severity to ensure immediate attention to critical issues.

- Establish Data Lineage and Audit Trails: Maintain comprehensive logs and metadata for every ETL run, detailing source and target data, transformations applied, and any errors encountered. This provides crucial audit trails for compliance and simplifies debugging and root cause analysis. Data lineage tools integrated with the ETL Platform can visually map data flow, enhancing transparency.

Challenges and Barriers to Adoption

While the benefits of optimized ETL platforms are clear, organizations often encounter significant hurdles in their implementation and continuous operation:

- Data Quality and Governance: Ensuring consistent data quality across diverse sources remains a major challenge. Poor source data can propagate errors throughout the ETL pipeline, leading to unreliable insights. Establishing robust data governance policies and automated data quality checks within the ETL Platform is critical but often complex.

- Complexity of Diverse Data Sources: Integrating data from a myriad of disparate systems, each with its own schema, data types, and access protocols, adds significant complexity. Developing and maintaining connectors for every source can be resource-intensive.

- Real-time Processing Demands: The shift from batch processing to real-time or near real-time data streaming requires a fundamental re-architecture of ETL pipelines, often necessitating new technologies and skill sets. Achieving low-latency data processing while maintaining data integrity is technically demanding.

- MLOps Complexity: For AI/ML-driven initiatives, ETL pipelines become part of a larger MLOps framework. Ensuring feature consistency, versioning, and continuous data delivery to machine learning models introduces additional layers of complexity related to data drift and model retraining.

- Cost Management: While cloud-native solutions offer scalability, managing cloud costs effectively requires careful monitoring and optimization. Inefficient resource provisioning or poorly optimized pipelines can lead to unexpectedly high cloud bills.

- Legacy System Integration: Many enterprises still rely on legacy on-premise systems that are difficult to integrate with modern cloud-based ETL platforms, often requiring custom development or specialized integration tools.

Business Value and ROI

Investing in an optimized ETL Platform and adhering to best practices yields substantial business value and a significant return on investment (ROI):

- Faster Time-to-Insight: By accelerating data processing, organizations can reduce the lag between data generation and insight generation, enabling quicker, more informed decision-making. This directly impacts agility and responsiveness to market changes.

- Improved Data Quality for AI: High-quality, timely data is the lifeblood of effective AI and Machine Learning models. A well-tuned ETL Platform ensures that models are trained and operate on clean, relevant data, leading to more accurate predictions and better business outcomes.

- Reduced Operational Costs: Automated, efficient ETL pipelines reduce manual effort, minimize errors, and optimize resource utilization, particularly in cloud environments. This translates to lower operational expenses and allows data engineers to focus on higher-value tasks.

- Enhanced Data Governance and Compliance: Robust monitoring, data lineage, and auditing features within an ETL Platform provide the necessary tools to meet regulatory compliance requirements (e.g., GDPR, CCPA) and maintain strong data governance practices.

- Scalability and Flexibility: Cloud-native ETL solutions offer unparalleled scalability, allowing businesses to seamlessly handle fluctuating data volumes and new data sources without significant capital expenditure. This flexibility supports rapid business growth and innovation.

- Competitive Advantage: Organizations that can quickly and reliably transform raw data into actionable intelligence gain a significant competitive edge, enabling them to identify market trends, personalize customer experiences, and optimize operations more effectively than their slower counterparts.

Comparative Insight: Modern ETL Platforms vs. Traditional Approaches

The landscape of data integration has evolved dramatically. Traditionally, ETL processes were often built using custom scripts, legacy on-premise tools, or manual processes. While these approaches served their purpose in simpler times, they present significant limitations in today’s data environment when compared to modern ETL Platforms following best practices.

- Scalability and Performance: Traditional, script-based ETL or older on-premise tools struggle with the sheer volume, velocity, and variety of modern data. They often rely on single-server architectures, leading to performance bottlenecks and lengthy batch windows. Modern ETL Platforms, especially cloud-native solutions, leverage distributed computing, parallel processing, and serverless architectures to handle petabytes of data with elastic scalability and significantly faster processing times. The ability to dynamically scale resources up or down on demand is a game-changer for variable workloads.

- Flexibility and Agility: Custom scripts are notoriously difficult to maintain, modify, and scale. Changes to source systems often require extensive re-coding. Legacy tools can be rigid and lack support for new data formats or cloud services. Modern ETL Platforms offer intuitive graphical interfaces, a wide array of pre-built connectors for various data sources and destinations (including SaaS applications and APIs), and often support both code-based and low-code/no-code development, making them far more agile and adaptable to evolving business needs.

- Data Governance and Quality: Manual ETL processes make it challenging to enforce data quality rules consistently or track data lineage. Errors can go undetected, leading to “garbage in, garbage out.” Modern ETL Platforms integrate robust data quality checks, metadata management, and automated data lineage tracking capabilities. They provide audit trails and version control, ensuring data integrity and simplifying compliance with regulatory requirements.

- Cost and Maintenance: On-premise ETL solutions require significant upfront investment in hardware, software licenses, and ongoing maintenance. Dedicated IT teams are needed to manage infrastructure. Cloud-native ETL Platforms shift this to an operational expenditure model, reducing initial costs and leveraging managed services to offload infrastructure management, freeing up internal teams to focus on data strategy. The pay-as-you-go model, combined with dynamic scaling, often leads to a lower total cost of ownership.

- Real-time Capabilities: Traditional ETL is predominantly batch-oriented, leading to delayed insights. Modern ETL Platforms are increasingly designed to support real-time data streaming and continuous data integration, enabling immediate data availability for operational analytics, fraud detection, and personalized customer experiences. This paradigm shift from ETL to ELT (Extract, Load, Transform) is also a key differentiator, where data is loaded into a scalable data warehouse or data lake first, then transformed, maximizing raw data availability and leveraging target system compute.

World2Data Verdict: The Future of Data Processing is Intelligent and Proactive

The trajectory for ETL Platforms is unequivocally towards greater intelligence, automation, and proactive self-optimization. Organizations must move beyond merely implementing best practices and embrace platforms that embed AI and Machine Learning directly into their core functions. The actionable recommendation from World2Data.com is to prioritize ETL Platforms that offer AI-driven capabilities for data quality automation, intelligent schema recommendations, predictive resource scaling, and anomaly detection. The future of data processing will not just be fast, but also smart – predicting potential issues, automatically optimizing pipelines, and continuously adapting to changes in data patterns and business demands, thereby transforming the ETL Platform into a truly autonomous and strategic asset.