Hybrid Data Platform: Combining On-Prem and Cloud for Optimal Performance and Agility

Platform Category: Hybrid Data Management Platform

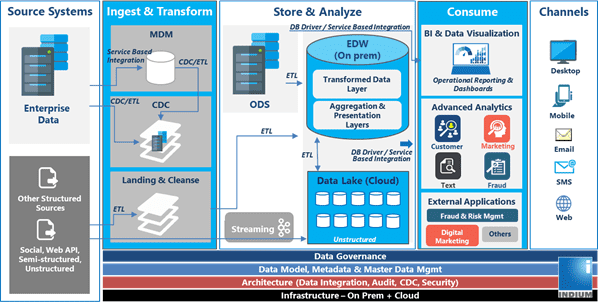

Core Technology/Architecture: Data virtualization, unified control plane, containerization (e.g., Kubernetes), API-driven integration, distributed data processing, multi-cloud integration

Key Data Governance Feature: Federated data governance, unified data catalog across environments, consistent security policies, centralized access management, compliance reporting

Primary AI/ML Integration: Distributed ML training and inference across on-prem and cloud, MLOps orchestration for hybrid deployments, leveraging cloud AI services with on-prem data, data science workbench

Main Competitors/Alternatives: Microsoft Azure (Azure Arc, Azure Stack HCI), Google Cloud (Anthos), Amazon Web Services (AWS Outposts), IBM Cloud Pak for Data, Oracle Exadata Cloud@Customer, HPE GreenLake

In today’s dynamic business landscape, organizations face the complex challenge of managing vast amounts of data residing both on-premises and across multiple cloud environments. An effective Hybrid Data Platform emerges as the strategic solution, enabling intelligent integration and consistent data management across these diverse infrastructures. It is no longer about choosing one or the other but about orchestrating a unified data strategy that leverages the best of both worlds, ensuring data agility, security, and scalability in an increasingly complex digital ecosystem.

Unlocking Agility and Strategic Integration with a Hybrid Data Platform

Modern enterprises demand unparalleled agility to respond to market shifts and customer needs. A well-implemented Hybrid Data Platform facilitates this by creating a seamless bridge between your legacy systems and scalable cloud resources. This approach allows businesses to maximize their existing on-premise investments while simultaneously embracing the innovation and flexibility offered by cloud computing, optimizing resource utilization and fostering rapid development cycles across the entire organization. The strategic integration fostered by such a platform is critical for achieving peak performance and maintaining data integrity.

A robust Hybrid Data Platform ensures data consistency and accessibility across all environments, preventing data silos and discrepancies that often plague distributed systems. It intelligently routes workloads to the most appropriate infrastructure, whether that is an on-premise data center for sensitive, low-latency tasks or a public cloud for elastic compute. This capability significantly optimizes costs by preventing over-provisioning and dramatically improves processing speeds for critical applications and analytics without compromise. Furthermore, this dynamic routing and integration capability is foundational for modern data architectures that demand real-time insights from disparate data sources.

Core Breakdown: Architecture, Challenges, and Business Value of a Hybrid Data Platform

The essence of a successful Hybrid Data Platform lies in its sophisticated architecture and the profound business value it delivers. At its core, a hybrid data architecture combines the stability and control of on-premises infrastructure with the elasticity and innovation of public cloud services, creating a cohesive environment for data management and analytics.

Architectural Components and Core Technologies

A robust Hybrid Data Platform relies on several key architectural components and technologies to bridge the gap between disparate environments:

- Data Virtualization: This technology creates a unified, logical view of data across multiple physical sources (on-prem, private cloud, public cloud) without replicating or moving the data. It abstracts the underlying complexities, allowing users and applications to access data as if it were from a single source. This is crucial for real-time analytics and reduces data egress costs.

- Unified Control Plane: A central management layer provides consistent operations, monitoring, and policy enforcement across all hybrid environments. This control plane often leverages container orchestration platforms like Kubernetes, enabling applications and data services to be deployed, managed, and scaled uniformly, regardless of where they reside.

- Containerization (e.g., Kubernetes): Containers encapsulate applications and their dependencies, ensuring they run consistently across any environment. Kubernetes acts as the orchestrator, automating the deployment, scaling, and management of containerized workloads, making it a cornerstone for portability in a hybrid setup.

- API-driven Integration: Extensive use of APIs allows for seamless and programmatic interaction between services, applications, and data sources across the hybrid landscape. This facilitates automated data pipelines, service mesh architectures, and robust communication channels.

- Distributed Data Processing: Technologies like Apache Spark, Hadoop, or cloud-native distributed processing services enable large-scale data ingestion, transformation, and analysis across geographically dispersed or hybrid data stores, leveraging the strengths of both on-prem compute and cloud scalability.

- Multi-cloud Integration: The platform is designed not just for on-prem-to-cloud but also for interoperability across multiple public cloud providers, preventing vendor lock-in and maximizing flexibility. This includes consistent networking, identity management, and data transfer mechanisms.

Challenges and Barriers to Adoption

Despite its compelling advantages, implementing a Hybrid Data Platform presents several challenges:

- Data Gravity and Latency: Moving large datasets between on-premises and cloud environments can be slow and expensive, especially for latency-sensitive applications. Data gravity dictates that it’s often easier to move compute to data than data to compute.

- MLOps Complexity: Managing the entire Machine Learning Operations (MLOps) lifecycle—from data preparation and model training to deployment and monitoring—across a hybrid environment adds significant complexity. Ensuring consistent tooling, pipelines, and governance for ML models across distributed infrastructure is a non-trivial task.

- Security and Compliance: Maintaining consistent security policies, access controls, and compliance standards (like GDPR, HIPAA, or local data sovereignty laws) across diverse environments is incredibly challenging. Data encryption, identity management, and audit trails must be harmonized.

- Operational Overhead and Skills Gap: Operating a hybrid environment requires specialized skills in both on-premises infrastructure management and cloud computing. The operational complexity can increase if the tools and processes are not unified.

- Data Governance and Lineage: Establishing a unified data catalog and ensuring consistent data governance, quality, and lineage tracking across hybrid data sources is critical yet difficult to achieve without specialized tools.

- Cost Management: While intended to optimize costs, a poorly managed hybrid environment can lead to unexpected expenses due to inefficient resource allocation, data egress fees, or shadow IT.

Business Value and ROI

When effectively implemented, a Hybrid Data Platform delivers significant business value and a strong return on investment:

- Faster Model Deployment and Innovation: By providing a unified data environment and MLOps capabilities, organizations can accelerate the development, training, and deployment of AI/ML models. This leads to quicker time-to-market for new data products and services, fostering continuous innovation.

- Optimized Resource Utilization and Cost Efficiency: The ability to burst workloads to the cloud during peak demands and keep sensitive or stable workloads on-premises minimizes capital expenditure and operating expenses, optimizing IT resource allocation.

- Enhanced Data Quality for AI: By consolidating and governing data across environments, the platform ensures higher data quality and consistency, which is fundamental for accurate and reliable AI/ML model training and decision-making.

- Improved Security and Compliance Posture: Granular control over data placement allows sensitive information to remain in secure on-premises environments, simplifying compliance with stringent regulatory requirements while still leveraging cloud agility for less sensitive data.

- Business Continuity and Disaster Recovery: A hybrid approach offers robust disaster recovery capabilities by replicating data and workloads across diverse environments, ensuring higher availability and resilience against outages.

- Data Sovereignty and Locality: Organizations can meet data residency requirements by keeping specific data within certain geographical boundaries or on-premises, while still benefiting from cloud elasticity for processing or less sensitive data.

- Legacy Modernization: It allows organizations to gradually migrate legacy applications and data to the cloud without a disruptive “big bang” approach, preserving existing investments while incrementally adopting modern cloud practices.

Comparative Insight: Hybrid Data Platform vs. Traditional Data Lakes/Warehouses

To fully appreciate the transformative power of a Hybrid Data Platform, it’s essential to compare it with traditional data management paradigms like data lakes and data warehouses. While these older models served their purpose, they often struggle to meet the demands of modern, distributed data environments.

Traditional Data Warehouses

Data warehouses are highly structured, centralized repositories designed for reporting and analytical purposes. They excel at processing structured data, ensuring high data quality and consistency, and supporting well-defined business intelligence queries. However, they are typically expensive to scale, struggle with unstructured or semi-structured data, and are primarily designed for on-premises deployment, making them rigid and slow to adapt to new data sources or analytical requirements, especially those involving real-time or massive scale data streams that are common in today’s cloud-native applications.

Traditional Data Lakes

Data lakes, on the other hand, are vast repositories that store raw, unstructured, semi-structured, and structured data at scale, often in their native format. They offer immense flexibility for exploratory analytics, machine learning, and big data processing. However, without strong governance, data lakes can quickly devolve into “data swamps,” making it difficult to find, trust, and utilize data effectively. Furthermore, traditional data lakes often reside either entirely on-premises or entirely in a single cloud, lacking the inherent flexibility to bridge these environments seamlessly or manage data across multiple public clouds with unified governance and security.

The Hybrid Data Platform Advantage

A Hybrid Data Platform transcends the limitations of both. It isn’t a replacement but an evolution that leverages the best aspects while introducing unprecedented flexibility and integration:

- Unified Access, Diverse Storage: Unlike a single-location data warehouse or lake, a hybrid platform provides a unified access layer to data that can physically reside anywhere—on-prem, private cloud, or multiple public clouds. This allows organizations to choose the optimal storage and compute location based on cost, performance, latency, security, and compliance needs.

- Intelligent Workload Placement: It allows for dynamic workload placement, routing data processing and analytical tasks to the most appropriate environment. For instance, sensitive customer data might be processed on-premises for regulatory compliance, while large-scale, burstable AI model training might occur in the cloud.

- Consistent Governance and Security: A major challenge for traditional models stretched across environments is maintaining consistent security and governance. A hybrid platform, through its unified control plane and federated governance features, ensures uniform policy enforcement, data cataloging, and access management across all data locations, a capability largely absent in fragmented data lake/warehouse deployments.

- Operational Efficiency: By integrating diverse environments under a single operational umbrella, a hybrid platform reduces the complexity and operational overhead associated with managing separate data silos. This contrasts sharply with the often disjointed management required for standalone data lakes and warehouses.

- Scalability and Flexibility: While data lakes offer scalability, a hybrid platform extends this by allowing businesses to leverage infinite cloud elasticity on demand, without the need for massive on-premises infrastructure investments. This “bursting” capability is not naturally present in traditional, fixed-capacity data environments.

- Support for Modern AI/ML Workloads: Traditional systems often require significant re-engineering to support distributed AI/ML training and inference. A Hybrid Data Platform is designed with MLOps orchestration and distributed compute capabilities built-in, seamlessly integrating cloud AI services with on-prem data.

In essence, while data lakes and warehouses are foundational data repositories, a Hybrid Data Platform acts as the intelligent orchestration layer that makes these repositories interoperable, governable, and performant across a fluid, multi-environment landscape. It’s the architecture that truly enables an enterprise to operate as a data-driven organization in the cloud era, without abandoning existing investments.

World2Data Verdict

The journey towards an optimized data strategy in the modern enterprise unequivocally points to the Hybrid Data Platform as the indispensable architecture. World2Data.com believes that organizations that embrace this integrated approach will not only survive but thrive in the face of escalating data volumes and increasingly stringent regulatory demands. The future of data management is not about choosing cloud over on-prem, or vice-versa, but about intelligently orchestrating these environments to create a seamless, resilient, and highly performant data fabric.

Our recommendation is clear: enterprises must prioritize investing in a Hybrid Data Platform that offers a truly unified control plane, robust data virtualization, and comprehensive MLOps integration capabilities. Focus on solutions that empower federated data governance and a unified data catalog, as these are critical for maintaining trust and compliance across your distributed data estate. Moving forward, the competitive edge will belong to those who master the art of blending the stability of on-premises control with the agility and innovation of the cloud, thereby unlocking the full potential of their data assets.