Unleashing Potential: AI for Real-Time Big Data Analytics

In a world saturated with information, the capacity to instantly process, interpret, and act upon real-time big data using artificial intelligence provides a crucial competitive advantage for any organization. This deep dive explores how AI Real-Time Big Data Analytics empowers businesses to move beyond retrospective analysis, enabling immediate responses to rapidly evolving situations. It is fundamentally transforming how businesses harness information, making instantaneous insights the new standard.

Introduction: The Imperative of Instant Insights with AI

The digital age has ushered in an era where data is generated at an unprecedented velocity, volume, and variety. Traditional batch processing methods, once sufficient for historical reporting, fall short in environments demanding immediate decision-making. This is where AI Real-Time Big Data Analytics emerges as a transformative force. It’s not merely about processing data quickly; it’s about applying advanced artificial intelligence and machine learning algorithms to live data streams to extract actionable intelligence the moment it becomes relevant. This article will delve into the technical architecture, business implications, challenges, and the undeniable value proposition of integrating AI with real-time big data.

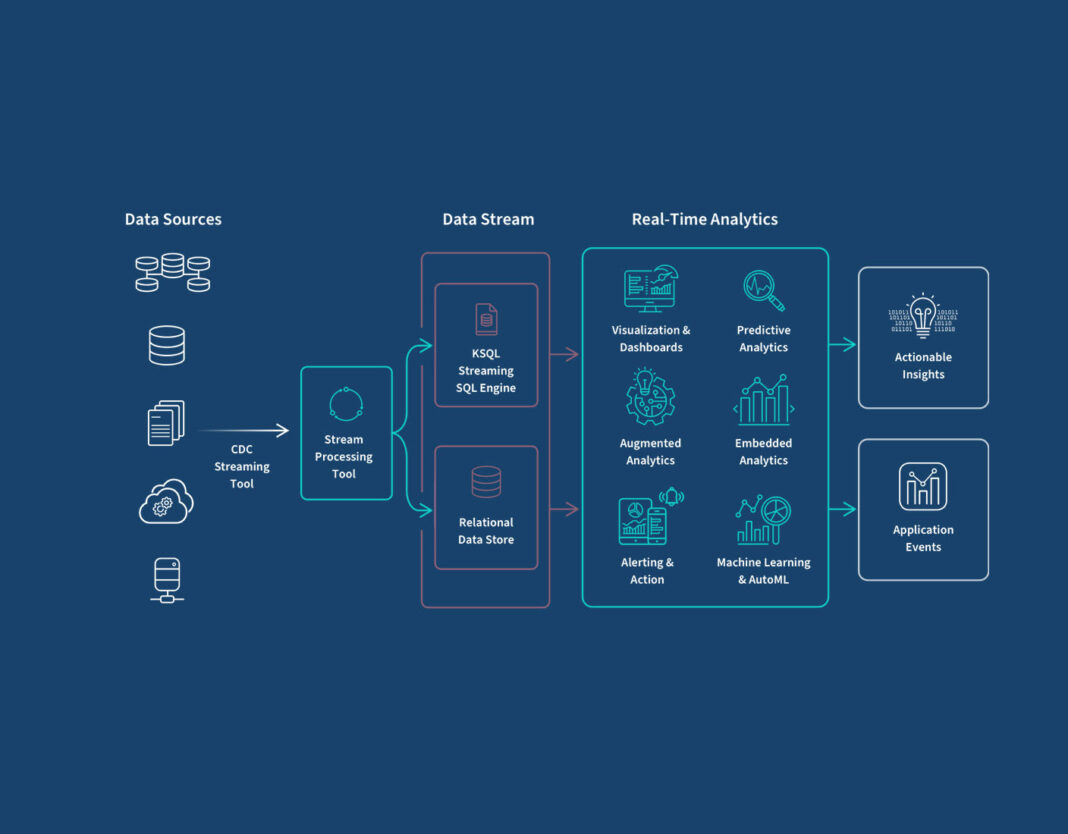

Core Breakdown: Architecting Intelligence into Data Streams

At its heart, AI Real-Time Big Data Analytics leverages sophisticated technical architectures to handle the unique demands of high-velocity data. The foundation often rests on a Stream Processing Platform, designed to ingest, process, and analyze continuous streams of data events as they occur, rather than storing them first for batch analysis. Key to this is an Event-Driven Architecture, where systems react to discrete events in real-time, enabling immediate data flow and processing. This paradigm ensures that insights are generated and acted upon without significant delay.

A prevalent architectural pattern in this domain is the Kappa Architecture, which simplifies the traditional Lambda architecture by using a single stream processing engine for both real-time and batch processing. All data passes through the same stream processing layer, making data consistency and system management more straightforward. Complementing this is the use of In-memory Computing, which stores data in RAM instead of on disk during processing. This drastically reduces latency, allowing for computations to be performed at speeds critical for real-time applications.

Data governance and quality are paramount, even at high speeds. A robust Schema Registry plays a vital role by centrally managing and enforcing schemas for data streams, ensuring compatibility and consistency across various data producers and consumers. Coupled with this, Real-time Data Lineage provides an auditable trail of data as it moves through the system, crucial for compliance, debugging, and understanding the origin and transformations of data in real-time. This transparency is essential for building trust in AI-driven insights.

The AI component is integrated through two primary mechanisms: Real-time Model Inference and Online Machine Learning. Real-time model inference involves deploying pre-trained machine learning models to score or classify incoming data streams instantly, delivering predictions or recommendations in milliseconds. For example, a fraud detection model can flag a suspicious transaction the moment it occurs. Online Machine Learning, on the other hand, allows models to continuously learn and adapt from new, incoming data streams. This is crucial for environments where data patterns evolve rapidly (e.g., changing customer preferences, emerging cyber threats), enabling models to maintain accuracy without requiring periodic retraining cycles that could introduce stale insights.

Challenges and Barriers to Adoption

Despite its immense promise, implementing AI Real-Time Big Data Analytics comes with significant hurdles. One of the primary challenges is managing the sheer **data velocity and volume** without compromising processing speed or data quality. Ensuring data integrity and accuracy as it flows through complex pipelines requires robust validation and error handling mechanisms. **Data quality** itself, particularly for unstructured or semi-structured real-time data, presents a continuous battle, as garbage in leads to garbage out, even at incredible speeds.

Another major barrier is **system complexity and integration**. Building and maintaining an event-driven, stream-processing architecture with integrated AI components demands specialized skills and a sophisticated understanding of distributed systems. Integrating various technologies like Kafka, Flink, Spark Streaming, and AI frameworks seamlessly can be daunting. Furthermore, **latency requirements** for true real-time systems are often in the sub-millisecond range, which dictates hardware, network, and software choices, adding to the architectural complexity and cost.

Model drift is a particularly acute challenge in real-time AI. As underlying data distributions change over time (e.g., consumer behavior shifts, new fraud patterns emerge), deployed models can become less accurate. Continuously monitoring model performance and implementing strategies for online learning or rapid retraining and redeployment (a key aspect of MLOps) is essential but difficult to achieve in production environments. Finally, the **cost of real-time infrastructure** – from high-performance computing to specialized databases and networking – can be substantial, requiring a clear ROI justification.

Business Value and ROI of AI Real-Time Big Data Analytics

The integration of AI into real-time big data analytics pipelines significantly boosts operational efficiency across various sectors. From optimizing supply chain logistics to managing energy grids, AI-driven insights facilitate automatic adjustments and resource allocation. This leads to reduced downtime, optimized performance, and substantial cost savings through intelligent, immediate actions. Here are some key business values:

- Faster Model Deployment and Adaptation: The architecture supports agile deployment of AI models, enabling businesses to quickly put new predictive capabilities into action and adapt to market changes. Online ML ensures models remain relevant and accurate.

- Superior Predictive Power and Anomaly Detection: Leveraging AI in Real-time Big Data Analytics empowers organizations with superior predictive capabilities. Machine learning models analyze live data flows to anticipate market shifts, equipment failures, or customer behavior before they fully materialize. Concurrently, these systems excel at spotting subtle anomalies indicative of fraud or cyber threats the moment they emerge.

- Enhanced Operational Efficiency: Streamlined business processes and automated decision-making are direct outcomes. In manufacturing, predictive maintenance can prevent costly breakdowns. In finance, real-time fraud detection significantly reduces losses.

- Driving Personalized Customer Experiences: In competitive markets, understanding and responding to individual customer needs in real-time is paramount. AI Real-Time Big Data Analytics enables businesses to deliver highly personalized experiences. By analyzing live browsing patterns, purchase histories, and interactions, AI can dynamically adjust recommendations, present relevant offers, and personalize user interfaces on the fly, leading to higher engagement and conversion rates.

- Future-Proofing Business Strategies: The continuous evolution of data sources and business challenges necessitates an agile analytics framework. This capability ensures organizations remain proactive, resilient, and continuously optimized, securing a decisive edge in fast-moving industries.

- Compliance and Risk Management: Real-time data lineage and strong governance features aid in meeting regulatory requirements and provide immediate visibility into potential risks.

Comparative Insight: AI Real-Time Analytics vs. Traditional Data Architectures

To truly appreciate the power of AI Real-Time Big Data Analytics, it’s essential to compare it with traditional data processing paradigms, namely the Data Lake and Data Warehouse. While these established architectures remain foundational for many enterprises, their core design principles often prioritize data storage and retrospective batch analysis over immediate action and intelligent prediction.

A **Traditional Data Warehouse** is optimized for structured, historical data, primarily for reporting and business intelligence. Data is typically loaded in batches (ETL processes), cleaned, and transformed into a predefined schema. While excellent for complex queries on historical trends, it inherently suffers from latency. Decisions made using a data warehouse are based on data that is hours or even days old, making it unsuitable for scenarios demanding instantaneous responses. Integrating AI models for real-time inference is often an add-on, requiring external systems and sacrificing the integrated, low-latency nature of a purpose-built real-time AI system.

The **Data Lake** offers greater flexibility, storing vast amounts of raw, multi-structured data without a predefined schema. It’s excellent for exploratory analytics, machine learning model training, and storing historical data at scale. However, the raw nature of data in a data lake often requires significant processing before it’s ready for analysis, introducing latency. While Data Lakes can be augmented with real-time ingestion capabilities, their native processing for analytics is often still batch-oriented (e.g., Spark batch jobs). The challenge lies in converting vast, raw data into real-time actionable intelligence with integrated AI, which is not its primary design strength. It lacks the built-in stream processing, event-driven architecture, and low-latency inference capabilities that define modern AI Real-Time Big Data Analytics platforms.

In contrast, an **AI Real-Time Big Data Analytics** platform (often built upon technologies like Apache Kafka for streaming, Apache Flink or Spark Streaming for processing, and specialized databases for low-latency lookups) is designed from the ground up for speed and intelligence. Its **Event-Driven Architecture** ensures data is processed as it arrives, rather than waiting for batches. **In-memory Computing** capabilities dramatically reduce processing delays. Crucially, the integration of **Real-time Model Inference** and **Online Machine Learning** directly into the stream processing pipeline means that AI-driven insights are generated and acted upon in milliseconds. This fundamental shift from “analysis after the fact” to “intelligence in the moment” enables proactive decision-making, immediate anomaly detection, and dynamic personalization that traditional architectures simply cannot match.

While Data Lakes and Data Warehouses serve their purposes for historical analysis and large-scale data storage, they are not optimized for the instantaneity and adaptive intelligence that AI Real-Time Big Data Analytics provides. Modern enterprises increasingly require a hybrid approach, where a real-time AI analytics layer complements existing data infrastructure, extracting immediate value from live data streams while feeding processed insights back to the data lake or warehouse for long-term storage and deeper historical analysis. The former provides the agility and foresight; the latter provides the comprehensive historical context.

World2Data Verdict: The Unstoppable Momentum of Real-Time AI

The trajectory for AI Real-Time Big Data Analytics is clear and compelling: it is rapidly transitioning from a competitive advantage to an operational necessity. As data velocity continues to accelerate across industries, the ability to derive instant, intelligent insights will differentiate market leaders from followers. World2Data believes that organizations must invest strategically in building robust Stream Processing Platforms leveraging Event-Driven Architecture, integrating advanced **Real-time Model Inference** and **Online Machine Learning** capabilities. The focus should be on scalable, resilient systems with strong **Schema Registry** and **Real-time Data Lineage** for governance. Enterprises that proactively embrace this paradigm will not only unlock unprecedented operational efficiencies and personalized customer experiences but also establish a foundation for continuous innovation and sustained market leadership in a data-driven world. The future of data is not just big; it’s instant, intelligent, and relentlessly adaptive.