AI Support for Data Quality Measurement and Control: A New Era of Data Integrity

The quest for impeccable data is universal, and AI Support for Data Quality Measurement and Control represents a pivotal shift in how organizations manage their most critical asset. Organizations grapple daily with incomplete, inaccurate, or inconsistent data, directly impacting analytical insights, operational efficiency, and strategic decision-making. The integration of artificial intelligence for robust AI Data Quality Measurement is no longer a luxury but a strategic imperative to unlock true data potential, enabling enterprises to build trust and derive meaningful value from their vast datasets.

Revolutionizing Data Quality Measurement with AI

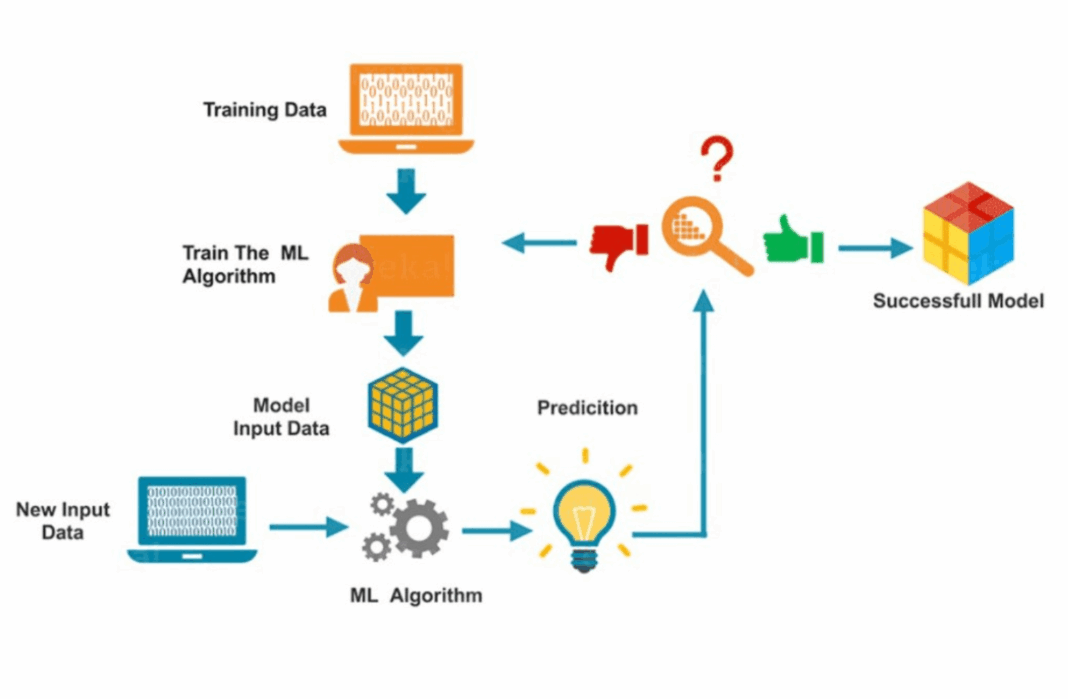

In today’s data-driven landscape, the sheer volume and velocity of information render traditional, manual data quality processes obsolete. This is where AI Support for Data Quality Measurement and Control emerges as a game-changer. AI algorithms are fundamentally transforming how we approach data quality, moving beyond static rules and reactive checks to proactive, intelligent monitoring. At its core, this involves machine learning-based anomaly detection, which serves as the primary AI/ML integration for identifying deviations in key data characteristics such as freshness, volume, and distribution.

Automated detection of anomalies and inconsistencies means machine learning can identify subtle errors, drifts, and deviations that human review would often miss or discover too late. This leads to significantly enhanced accuracy and precision in evaluation, as AI models learn complex, evolving data patterns over time. Unlike predefined rules, AI systems can adapt to changes in data behavior, offering more granular and reliable assessments of data health across vast and dynamic datasets. This capability is especially crucial for modern data environments, often categorized under a Data Quality & Observability Platform.

Core components contributing to this revolution include advanced data profiling tools powered by AI, which automatically discover data patterns, relationships, and potential inconsistencies. Semantic consistency checks, often leveraging Natural Language Processing (NLP) for unstructured or semi-structured data, ensure that data values make sense within their context. Furthermore, AI facilitates the automated discovery of data quality rules, reducing the reliance on manual rule definition and maintenance. This is a significant leap from traditional methods, providing a foundation for truly intelligent data governance.

AI-Driven Control for Superior Data Integrity

Beyond mere measurement, AI provides robust control mechanisms that elevate data integrity to new heights. One of the most critical aspects is the proactive identification of data issues. By continuously analyzing data streams and historical patterns, AI can predict potential problems before they manifest into critical errors or costly downstream impacts. This foresight allows data teams to intervene early, preventing wider data corruption and ensuring the reliability of insights derived from the data.

Intelligent data cleansing and transformation capabilities are another cornerstone of AI-driven control. Instead of relying on rigid scripts, AI can suggest or even automatically implement corrections based on learned patterns and contextual understanding. For instance, AI might identify common misspellings or inconsistent formatting across disparate data sources and propose standardized corrections, streamlining the remediation process. This not only speeds up the cleansing cycle but also reduces the human effort required, freeing up valuable resources for more strategic tasks.

Furthermore, continuous monitoring is inherent to AI-powered data quality solutions. These systems provide real-time oversight and alerts for any degradation in data quality, ensuring sustained quality across the data lifecycle. Whether it’s a sudden drop in data volume, an unexpected change in distribution, or a deviation from expected freshness levels, the system can flag it immediately, allowing for prompt investigation and resolution. This constant vigilance is paramount for maintaining high data integrity in fast-paced data ecosystems.

Challenges and Barriers to Adoption

While the benefits of AI Support for Data Quality Measurement and Control are clear, several challenges hinder its widespread adoption. One significant hurdle is the complexity of integrating AI solutions with existing legacy systems and diverse data architectures. Many organizations operate with a patchwork of technologies, and ensuring seamless interoperability requires significant effort and expertise.

Another challenge lies in the interpretability and explainability of AI models used for data quality. Understanding why an AI model flagged a particular data point as an anomaly, or how it arrived at a suggested correction, can be crucial for trust and compliance. Lack of transparency can create skepticism among data stewards and make it difficult to debug or fine-tune the AI system. Data drift, where the characteristics of incoming data change over time, can also degrade the performance of trained AI models, requiring continuous retraining and monitoring.

The skill gap is another critical barrier. Implementing and managing advanced AI-driven data quality platforms requires specialized knowledge in machine learning, data engineering, and data governance. Attracting and retaining such talent can be costly and difficult for many organizations. Finally, the initial investment in technology, infrastructure, and training can be substantial, making the return on investment (ROI) a key consideration for potential adopters, especially when compared to simpler, albeit less effective, traditional methods.

Business Value and ROI of AI Data Quality Measurement

The strategic adoption of AI Data Quality Measurement delivers substantial business value and a compelling return on investment. Firstly, improved decision-making and business insights are a direct result of reliable, high-quality data. When leaders can trust the underlying information, they are empowered to make more accurate and confident strategic choices, leading to better business outcomes and competitive advantage.

Secondly, significant operational efficiency gains are realized by automating repetitive and time-consuming data quality tasks. This automation frees up valuable data professionals from manual error detection and remediation, allowing them to focus on higher-value activities such as advanced analytics, model development, and innovation. The reduction in manual effort translates directly into lower operational costs and faster data processing cycles.

Moreover, enhanced data quality significantly reduces the costs associated with data errors, such as rework, lost revenue due to incorrect reporting, customer dissatisfaction, and potential regulatory fines. For instance, in sectors like finance or healthcare, poor data quality can lead to non-compliance with strict regulations, resulting in severe penalties. By ensuring data quality for AI and MLOps processes, organizations can deploy machine learning models faster and with greater confidence, accelerating time-to-market for data products and AI-powered services.

Ultimately, this AI-driven approach helps in building pervasive trust in data assets across the entire organization. When stakeholders have confidence in the accuracy and integrity of their data, it fosters a data-driven culture, encourages wider data usage, and maximizes the value extracted from every data point. This trust is fundamental for any organization aspiring to leverage data as a strategic asset.

Comparative Insight: AI-Driven DQ vs. Traditional Data Management

Understanding the distinction between AI-driven AI Data Quality Measurement and traditional data management practices is crucial for appreciating the revolutionary potential of the former. Traditional data quality approaches have historically relied on a combination of manual processes, rigid rule-based systems, and batch processing. These methods often involve defining a static set of rules to identify common data issues, followed by human review and correction.

A traditional data lake or data warehouse model, while excellent for storing vast amounts of data, typically separates data quality checks into distinct, often retrospective, phases. Data is ingested, stored, and then potentially subjected to cleansing routines before it can be used for analysis. This approach is inherently reactive, labor-intensive, and struggles with the scale and complexity of modern data ecosystems. Its limitations become apparent when dealing with diverse data types, high data velocity, and evolving business requirements. Manual rule definition is arduous, often incomplete, and difficult to maintain as data schemas or business logic change. Moreover, the scalability of human-driven validation is severely limited, making it impractical for petabyte-scale data lakes.

In stark contrast, AI-driven data quality solutions, often central to a Data Quality & Observability Platform, offer a proactive, adaptive, and scalable alternative. Platforms leveraging machine learning-based anomaly detection continually monitor data streams in real-time or near real-time, identifying issues as they emerge rather than after they have caused problems. Unsupervised ML for anomaly detection in data freshness, volume, and distribution means the system learns what “normal” data behavior looks like and flags deviations automatically, even for patterns that were not explicitly defined by rules.

Key data governance features like automated rule discovery and data lineage are significantly enhanced by AI. Instead of manually mapping data flows and defining validation rules, AI can infer these relationships and generate quality rules based on observed data behavior and metadata. This dynamic capability is far superior to static rule sets, as AI systems can adapt to new data sources, changing business logic, and unforeseen data patterns. The predictive nature of AI in identifying potential data issues before they escalate also represents a monumental shift from the reactive paradigm of traditional methods. While competitors like Monte Carlo, Bigeye, Soda, Anomalo, and Collibra DQ offer varying degrees of AI integration, the core philosophy remains consistent: leverage intelligence to make data quality autonomous and omnipresent.

The speed and accuracy of AI for data quality measurement far surpass human capabilities for large datasets, drastically reducing the time required to detect and resolve issues. This translates to faster time-to-insight, more reliable analytical models, and a significant competitive advantage. The ability of AI to continuously learn and improve over time also means that the data quality system becomes more robust and intelligent with every piece of data it processes, a feature entirely absent in traditional, static approaches.

World2Data Verdict

The imperative for organizations to adopt AI Support for Data Quality Measurement and Control is undeniable. As data volumes explode and the reliance on accurate insights intensifies, traditional, manual data quality processes are simply insufficient. World2Data believes that integrating AI into every facet of data quality is not just an enhancement but a fundamental requirement for modern data strategies. Organizations must prioritize investments in Machine Learning-based Anomaly Detection and Automated Rule Discovery to build resilient, trustworthy data pipelines. The future of data integrity lies in intelligent automation, transforming reactive clean-up into proactive prevention. Enterprises that embrace this shift will secure a significant competitive edge, enabling faster, more reliable decision-making and unlocking the full potential of their data assets.