Unlocking Instant Insights: Essential Tools for Accelerating Real-Time Big Data Processing

Platform Category: Stream Processing Framework

Core Technology/Architecture: Distributed In-Memory Processing

Key Data Governance Feature: Schema Registry

Primary AI/ML Integration: Streaming Machine Learning Libraries and Real-time Feature Engineering

Main Competitors/Alternatives: Apache Flink, Apache Spark Streaming, Apache Kafka Streams, Google Cloud Dataflow, Amazon Kinesis

In the relentless pace of the modern digital economy, the ability to derive immediate value from ever-growing data streams is no longer an aspiration but a critical imperative. Real-Time Big Data Acceleration Tools stand as the bedrock of competitive advantage, empowering enterprises to process, analyze, and act upon information as it is generated. This deep dive explores the critical components, architectural considerations, and business impact of leveraging these powerful tools to transform raw data into instant, actionable intelligence.

Introduction: The Imperative for Real-Time Big Data Acceleration

The volume, velocity, and variety of data generated by modern applications, IoT devices, and user interactions have surged to unprecedented levels. Businesses across sectors, from finance and e-commerce to healthcare and manufacturing, face the challenge of extracting timely insights from this continuous deluge. Traditional batch processing, with its inherent latency, often renders insights stale, preventing organizations from reacting swiftly to market shifts, identifying fraud in real-time, or personalizing customer experiences instantaneously. This urgent need for immediate data processing has spurred the evolution and adoption of sophisticated Real-Time Big Data Acceleration Tools.

These tools are designed to minimize latency across the entire data pipeline, enabling organizations to process events as they occur, make split-second decisions, and maintain a competitive edge. The objective of this article is to provide a comprehensive analysis of the technologies and strategies that underpin effective real-time big data acceleration, highlighting their architectural foundations, key features, challenges, and profound business value.

Core Breakdown: Architecture and Capabilities of Real-Time Big Data Platforms

An effective real-time big data platform relies on a sophisticated architecture comprising several integrated components, each playing a crucial role in accelerating data processing. At its heart, these platforms are typically characterized by a Stream Processing Framework employing Distributed In-Memory Processing, which allows for ultra-low latency operations by keeping data accessible in RAM across a cluster.

Architectural Pillars of Real-Time Acceleration

- Data Ingestion Layer: This is the entry point for all streaming data. Technologies like Apache Kafka are paramount here, providing a highly scalable, fault-tolerant, and durable distributed streaming platform capable of handling millions of events per second. Kafka acts as a central nervous system, buffering data streams and ensuring reliable delivery to downstream processing engines. Alternatives like Amazon Kinesis and Google Cloud Pub/Sub offer similar capabilities in cloud environments.

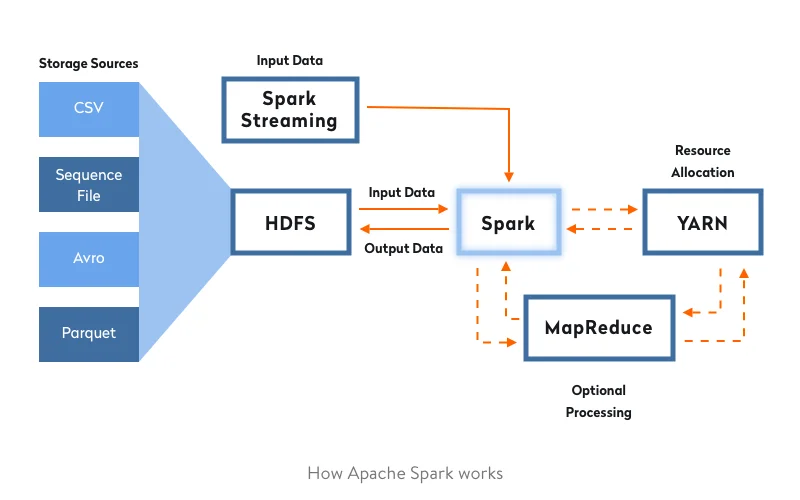

- Stream Processing Engines: Once ingested, data needs to be processed, transformed, and analyzed continuously. Tools like Apache Flink and Apache Spark Streaming (with its micro-batch processing model) are the workhorses of this layer. Apache Flink, in particular, excels in true event-at-a-time processing, stateful computations, and complex event processing (CEP), making it ideal for fraud detection, real-time analytics, and monitoring systems. These engines leverage distributed computing paradigms to process data in parallel, drastically reducing latency.

- In-Memory Data Grids & Databases: For applications requiring immediate data access and rapid lookups, in-memory data grids (IMDGs) and specialized databases are crucial. These technologies store data directly in RAM, bypassing disk I/O bottlenecks and enabling sub-millisecond response times. Examples include Apache Ignite, Redis, and specialized time-series databases optimized for high-volume data points.

- Real-time Feature Stores: A critical component for AI/ML integration, a Feature Store provides a centralized repository for curated, consistent, and low-latency features for machine learning models. It ensures that features used for model training are identical to those used for real-time inference, preventing training-serving skew. This capability is vital for streaming machine learning libraries and real-time feature engineering, enabling models to adapt to new data instantly.

Key Components & Technologies in Focus

Beyond the core engines, several other technologies contribute to the robustness and efficiency of Real-Time Big Data Acceleration Tools:

- Data Serialization Formats: Efficient formats like Apache Avro or Parquet reduce data size on the wire and on disk, significantly improving network throughput and processing speed. They often come with schema definition capabilities crucial for data evolution.

- Schema Registry: A Key Data Governance Feature, a Schema Registry (e.g., Confluent Schema Registry) manages and enforces schemas for data streams, ensuring data consistency and compatibility across different applications and versions. This prevents data compatibility issues and facilitates seamless evolution of data formats.

- Distributed Caching: Techniques like distributed caching keep frequently accessed data close to compute resources, reducing the need to re-process or re-fetch data from slower storage, thus enhancing overall system performance.

Real-time Feature Engineering & Streaming Machine Learning

The integration of AI and Machine Learning into real-time pipelines is a game-changer. Real-time feature engineering allows for the computation of features from streaming data (e.g., calculating a user’s average transaction value over the last 5 minutes) as events arrive. These features are then fed into pre-trained models or used by streaming machine learning libraries for immediate predictions or anomaly detection. This capability is a primary AI/ML integration point, enabling dynamic personalization, fraud prevention, and predictive maintenance.

Challenges and Barriers to Adoption

While the benefits are clear, implementing and managing Real-Time Big Data Acceleration Tools comes with its own set of challenges:

- Complexity and Skill Gap: Designing, deploying, and maintaining highly available and fault-tolerant real-time systems requires specialized skills in distributed systems, stream processing, and MLOps.

- Data Quality and Consistency: Ensuring data quality and consistency in a continuous stream is difficult. Data drift, where the statistical properties of the target variable or input features change over time, can severely degrade model performance in real-time scenarios. Robust monitoring and retraining strategies are essential.

- State Management: Stateful stream processing, while powerful, introduces complexities in managing fault tolerance, consistency, and scalability of state across distributed systems.

- Cost of Infrastructure: In-memory processing and highly available distributed systems can be resource-intensive and thus costly to operate, especially at scale.

- Debugging and Monitoring: Diagnosing issues in complex, distributed, real-time pipelines can be challenging due to the asynchronous nature of events and vast amounts of log data.

Business Value and ROI of Real-Time Data Platforms

Despite the challenges, the return on investment (ROI) from adopting Real-Time Big Data Acceleration Tools is substantial:

- Faster Model Deployment & Improved Decision Making: By enabling real-time feature engineering and model inference, businesses can deploy machine learning models faster and make instantaneous, data-driven decisions that impact operations, customer experience, and revenue.

- Enhanced Data Quality for AI: A robust real-time pipeline, coupled with a Schema Registry and careful data governance, ensures that the data feeding AI models is consistent, timely, and of high quality, leading to more accurate predictions.

- Proactive Problem Solving: Real-time monitoring allows for immediate detection of anomalies, system failures, or fraudulent activities, enabling proactive interventions and minimizing potential damages.

- Personalized Customer Experiences: By analyzing customer behavior in real-time, businesses can deliver hyper-personalized recommendations, offers, and services, significantly improving engagement and satisfaction.

- Operational Efficiency: Real-time insights into operational metrics can optimize resource allocation, identify bottlenecks, and streamline processes across various departments.

Comparative Insight: Real-Time Big Data Platform vs. Traditional Data Lake/Data Warehouse

Understanding the distinction between modern Real-Time Big Data Acceleration Tools and traditional data management paradigms like data lakes and data warehouses is crucial. While both serve to store and analyze data, their fundamental approaches to processing and their use cases diverge significantly.

Traditional Data Warehouses (DW) are highly structured repositories optimized for batch processing of historical data, primarily for reporting, business intelligence (BI), and strategic analysis. Data is typically ETL’d (Extract, Transform, Load) into a predefined schema, which can be time-consuming. While excellent for retrospective analysis and complex queries on aggregated data, DWs are not designed for low-latency ingest or immediate action on streaming data. They prioritize data consistency and structured querying.

Data Lakes, conversely, store raw, unstructured, semi-structured, and structured data at scale, without predefined schemas. They offer flexibility for various analytical workloads, including big data analytics, machine learning, and data exploration. However, like data warehouses, data lakes traditionally lean towards batch processing. While they can ingest real-time data, extracting immediate insights often requires subsequent batch processing steps, introducing latency.

Real-Time Big Data Platforms (utilizing Real-Time Big Data Acceleration Tools) fundamentally shift the paradigm from “data at rest” to “data in motion.” Their core philosophy is to process data continuously as it arrives, minimizing latency to milliseconds or seconds. They are built around stream processing engines, in-memory computing, and event-driven architectures. Key differences include:

- Latency: Real-time platforms focus on sub-second latency for immediate actions; DW/Data Lakes operate with minutes to hours (or even days) of latency for insights.

- Data State: Real-time platforms often deal with “data in flight” and transient states; DW/Data Lakes store “data at rest” for historical analysis.

- Workloads: Real-time platforms are for operational analytics, fraud detection, personalization, and anomaly detection; DW/Data Lakes are for strategic BI, reporting, and historical data mining.

- Schema Enforcement: While a Schema Registry is vital for real-time platforms to maintain consistency for data in motion, traditional DWs enforce strict schemas at ingest, and Data Lakes are schema-on-read.

- Complexity: Real-time platforms, especially those handling stateful computations and ensuring fault tolerance, tend to have higher operational complexity than managing a simple data lake.

In many modern data architectures, these paradigms are complementary. A real-time stream processing layer can feed processed, aggregated data into a data warehouse or data lake for long-term storage and deeper historical analysis, creating a lambda or kappa architecture. This hybrid approach allows organizations to leverage the strengths of each system: the immediacy of real-time platforms for operational decisions and the historical depth of data lakes/warehouses for strategic insights.

World2Data Verdict: Embracing the Future of Instant Data Value

The journey towards truly data-driven operations is intrinsically linked to mastering real-time capabilities. World2Data.com asserts that the strategic adoption of Real-Time Big Data Acceleration Tools is no longer merely an option but a foundational requirement for any enterprise aiming for agility, predictive power, and hyper-personalization. Organizations must prioritize investment in robust stream processing frameworks, intelligent data governance features like Schema Registries, and seamless integration with streaming machine learning libraries and real-time feature engineering. The future belongs to those who can not only collect data but also derive immediate, actionable intelligence from it, continuously adapting and innovating at the speed of business. We recommend focusing on a modular architecture that allows for incremental adoption and scalability, leveraging open-source powerhouses like Flink and Kafka, or cloud-native alternatives, while building in strong MLOps practices from the outset to manage the lifecycle of real-time models effectively.