Optimizing Big Data Analytics: Applying Spark in Large-Scale Data Processing

Applying Spark in Large-Scale Data Processing has emerged as a cornerstone strategy for organizations navigating the complexities of modern data landscapes. This powerful, unified analytics engine is designed to handle the immense volume, velocity, and variety of data, empowering businesses to extract critical insights and drive innovation. From real-time analytics to sophisticated machine learning applications, Spark’s capabilities are uniquely suited to unlock profound value from even the most challenging datasets.

Introduction to Apache Spark: The Unifying Force in Big Data

In an era defined by data proliferation, the ability to efficiently process and analyze massive datasets is paramount. Apache Spark, a leading Distributed Computing Framework, has revolutionized how enterprises approach Spark Large Data Processing. Unlike its predecessors, Spark offers a holistic solution for a multitude of data workloads, including batch processing, interactive queries, real-time streaming, machine learning, and graph processing. Its core architecture, built on In-Memory Computing, provides unparalleled speed and efficiency, making it the go-to platform for critical analytical tasks.

The objective of this article is to delve into the technical underpinnings of Spark, exploring its architectural advantages, key components, and practical applications in large-scale data environments. We will also examine the challenges inherent in adopting and optimizing Spark, its significant business value, and how it compares to traditional data processing paradigms. Ultimately, we aim to provide a comprehensive understanding of why Applying Spark in Large-Scale Data Processing is not just a trend, but a fundamental requirement for data-driven success.

Key attributes that define Spark’s prowess include its open-source nature, robust fault tolerance, and a rich ecosystem of libraries. These elements combined make Spark an incredibly versatile and powerful tool, capable of transforming raw data into actionable intelligence at unprecedented speeds.

Core Breakdown: Apache Spark’s Architecture and Capabilities for Massive Datasets

Apache Spark’s architecture is meticulously designed for performance and scalability in distributed environments. At its heart lies the Spark Core, which provides the fundamental functionality for task scheduling, memory management, fault recovery, and interacting with storage systems. Built upon Spark Core are several libraries that extend its capabilities across various data processing paradigms.

The Power of Distributed Processing and In-Memory Computing

Spark’s ability to handle large datasets stems primarily from its distributed processing model. Data is partitioned across a cluster of machines, and computations are performed in parallel, drastically reducing processing times. This parallelism is further supercharged by In-Memory Computing. Unlike traditional disk-based systems like Hadoop MapReduce, Spark can cache data in RAM across the cluster, minimizing costly disk I/O operations. This allows iterative algorithms, common in machine learning and graph processing, to run orders of magnitude faster.

The Resilient Distributed Dataset (RDD) was Spark’s initial primary abstraction for distributed collections of items, allowing users to perform operations on data in parallel. While RDDs still underpin Spark, higher-level abstractions like DataFrames and Datasets offer optimized performance through Catalyst Optimizer and Tungsten Execution Engine, enabling schema-on-read capabilities and significantly improved query execution for structured and semi-structured data.

Accelerating ETL and Data Ingestion with Spark

Spark excels at accelerating complex data ingestion and transformation tasks, making it a cornerstone for modern Extract, Transform, Load (ETL) pipelines. It provides powerful APIs, primarily through DataFrames and Datasets, for building efficient ETL pipelines that can easily integrate diverse batch and real-time streaming data sources. Spark SQL, another key component, allows developers to query structured data using SQL, providing seamless integration with existing data warehousing tools and business intelligence applications.

Spark Structured Streaming is a particularly vital feature for dynamic and rapidly changing data environments. It extends Spark’s batch processing capabilities to handle real-time data streams, treating incoming data as a continuously appending table. This unified API simplifies the development of stream processing applications, enabling complex transformations, aggregations, and joins on streaming data with exactly-once fault tolerance, crucial for critical business operations.

Advanced Analytics and Machine Learning with MLlib and GraphX

Powering advanced analytics and machine learning applications is where Spark truly shines. Its Primary AI/ML Integration comes through its built-in ML Library, MLlib. MLlib offers a comprehensive suite of scalable machine learning algorithms that can be applied to very large datasets, encompassing classification, regression, clustering, collaborative filtering, dimensionality reduction, and optimization primitives. This allows data scientists to build predictive models, discover patterns, and generate insights from massive data volumes without needing to sample data or move it to specialized environments.

Beyond traditional machine learning, Spark’s GraphX library facilitates processing graph-parallel computations, which are vital for network analysis, social graph applications, recommendation systems, and fraud detection. Analysts also appreciate Spark’s interactive capabilities (often via notebooks like Databricks or Jupyter) for rapid data exploration, iterative model development, and feature engineering, significantly speeding up the data science workflow.

Challenges and Barriers to Adoption

Despite its undeniable power, Applying Spark in Large-Scale Data Processing comes with its own set of challenges. One of the primary barriers is the **Operational Complexity** involved in managing Spark clusters. Configuring Spark for optimal performance requires deep expertise in memory management, parallelism settings, and choosing the right deployment mode (YARN, Mesos, Kubernetes). Incorrect configurations can lead to inefficient resource utilization, slow job execution, or even cluster crashes.

Another significant hurdle is **Debugging Distributed Systems**. Tracing errors across numerous nodes and understanding the root cause of failures in a complex Spark job can be daunting. Performance tuning, especially identifying bottlenecks related to data shuffling, garbage collection, or skewed data partitions, demands specialized skills and tools.

**Data Governance and Security** are critical in large-scale environments. While Spark itself provides some security features, integrating it seamlessly into an enterprise’s broader governance framework can be complex. Organizations often need to rely on external governance tools such as Unity Catalog or Apache Ranger to enforce access controls, manage metadata, and ensure data lineage across Spark applications. The skill gap in the market for individuals proficient in Spark development, operations, and optimization further contributes to adoption challenges, leading to higher recruitment costs and slower project timelines.

Business Value and ROI of Spark

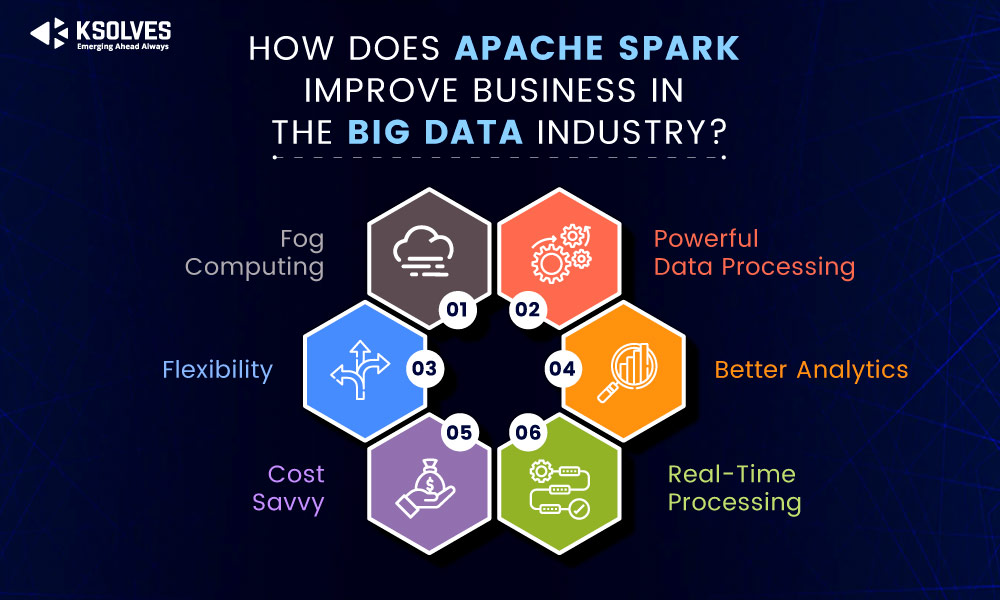

The return on investment (ROI) from Applying Spark in Large-Scale Data Processing is substantial and multifaceted. Organizations leveraging Spark experience significantly **Faster Time-to-Insight**. Its rapid data processing capabilities allow businesses to analyze vast quantities of data in near real-time, enabling quicker responses to market changes, customer behavior, and operational anomalies. This speed translates directly into more agile business operations and improved decision-making processes.

Spark’s ability to unify various data workloads on a single platform reduces infrastructure complexity and operational overhead. Instead of maintaining separate systems for batch, stream, and machine learning, organizations can consolidate their data processing, leading to **Reduced Operational Costs** in the long run. By enabling advanced analytics and machine learning at scale, Spark fuels **Innovation and New Product Development**. Companies can build sophisticated recommendation engines, predictive maintenance systems, fraud detection models, and personalized customer experiences that would be impractical or impossible with traditional tools.

Ultimately, Spark Large Data Processing provides a distinct **Competitive Advantage**. Businesses that can extract more value from their data, faster and more comprehensively, are better positioned to outmaneuver competitors, identify new revenue streams, and enhance customer satisfaction.

Comparative Insight: Spark vs. Traditional Data Processing Paradigms

To fully appreciate Spark’s impact, it’s essential to understand its position relative to established data processing technologies such as traditional data lakes and data warehouses, and older distributed computing frameworks.

Spark vs. Traditional Data Warehouses

Traditional data warehouses are highly optimized for structured data, performing complex SQL queries on pre-defined schemas, and are built for business intelligence reporting. They excel in ACID (Atomicity, Consistency, Isolation, Durability) transactions and ensuring data integrity. However, they struggle with semi-structured and unstructured data, real-time processing, and the iterative nature of machine learning workloads. Spark, with its schema-on-read capabilities and support for diverse data formats (JSON, Parquet, ORC, CSV, Avro), offers greater flexibility. While a data warehouse might take hours for complex reports, Spark can achieve similar results in minutes, and crucially, it can extend this capability to real-time data and ML models.

Spark vs. Traditional Data Lakes

Data lakes store vast amounts of raw data in its native format, often on distributed file systems like HDFS or object storage like Amazon S3. They offer flexibility in terms of data storage but lack inherent processing capabilities. Data lakes often require separate compute engines for analysis. Spark seamlessly integrates with data lakes, transforming them from mere storage repositories into active analytical platforms. It provides the necessary processing power to cleanse, transform, and analyze the raw data within the lake, unifying both storage and compute.

Spark vs. Hadoop MapReduce

Hadoop MapReduce was a groundbreaking innovation for distributed batch processing. However, Spark represents a significant evolution. MapReduce’s disk-intensive, two-stage processing model means that each intermediate result is written to disk, incurring substantial I/O overhead. Spark, by leveraging In-Memory Computing and Directed Acyclic Graphs (DAGs) for execution, can chain multiple operations together in memory, avoiding redundant disk writes. This makes Spark typically 10 to 100 times faster than MapReduce for many workloads, especially iterative ones. Furthermore, Spark’s unified API for batch, streaming, SQL, ML, and graph processing is far more versatile than MapReduce’s batch-only paradigm, greatly simplifying development and reducing context switching.

When considering alternatives like Apache Flink or Dask, while they offer powerful capabilities in specific areas (Flink for true low-latency stream processing, Dask for Pythonic parallel computing), Spark’s broad ecosystem and established enterprise adoption make it a highly competitive and often preferred choice for diverse, large-scale data processing needs.

World2Data Verdict: The Enduring Relevance of Spark

Apache Spark has cemented its position as an indispensable technology for organizations embarking on large-scale data processing initiatives. Its unique combination of distributed computing, in-memory processing, and a unified API for diverse workloads makes it an unparalleled choice for tackling the challenges of modern data. From accelerating ETL pipelines and powering real-time analytics to enabling advanced machine learning and AI applications, Spark provides the foundational infrastructure necessary for data-driven innovation.

For businesses seeking to maximize their data assets, World2Data.com recommends a strategic adoption of Spark, emphasizing robust architectural planning, continuous performance tuning, and investing in developer expertise. The ongoing evolution of the Spark ecosystem, including advancements in Adaptive Query Execution and enhanced integration with cloud-native services, ensures its continued relevance and capability to meet future demands. Organizations committed to leveraging the full potential of their data will find Applying Spark in Large-Scale Data Processing not just beneficial, but critical for maintaining a competitive edge in today’s data-intensive economy. Its role in shaping the future of data analytics and artificial intelligence remains undisputed.