Anomaly Detection: Identifying Unusual Patterns with AI

Anomaly Detection: Identifying Unusual Patterns with AI is fundamentally transforming how organizations protect their assets, optimize operations, and gain deeper understanding from complex data streams. In an increasingly data-rich and interconnected world, the ability to accurately distinguish normal operational behavior from critical deviations is not merely an advantage but a strategic imperative. At its core, Anomaly Detection involves pinpointing data points, events, or observations that significantly diverge from the expected norm. When powered by advanced artificial intelligence, this process transcends static rules, capable of uncovering subtle yet sophisticated irregularities that traditional methods or human observation might overlook, thereby offering crucial, actionable insights across virtually every industry sector.

The integration of artificial intelligence dramatically elevates the capabilities of conventional Anomaly Detection. AI-driven systems possess the unique ability to learn intricate patterns and relationships from vast historical datasets, thereby constructing a comprehensive, dynamic understanding of what constitutes ‘normal’ behavior. These systems leverage a diverse array of machine learning algorithms—ranging from robust statistical methods and sophisticated clustering techniques to advanced deep learning architectures specifically designed for time series analysis. This algorithmic versatility enables the detection of minute deviations that can signal everything from burgeoning fraud schemes and impending system failures to critical security breaches or emerging operational inefficiencies. Crucially, the continuous learning paradigm intrinsic to AI allows these systems to adapt and evolve alongside changing ‘normal’ patterns, ensuring sustained effectiveness and resilience in dynamic environments.

The transformative practical applications of AI-powered Anomaly Detection are expansive and pervasive across numerous sectors. In the financial industry, AI systems can process millions of transactions in real-time, swiftly flagging fraudulent activities and thereby protecting both consumers and financial institutions from significant losses. For critical IT operations, AI proactively identifies unusual network traffic, server performance anomalies, or unexpected resource consumption, preventing costly outages and bolstering cybersecurity defenses against sophisticated threats. Healthcare benefits immensely, as AI can pinpoint unusual patient data, identify adverse drug interactions, or detect early signs of disease progression, significantly enhancing patient safety, diagnostic accuracy, and treatment efficacy. Furthermore, in manufacturing and industrial IoT, this technology is invaluable for predictive maintenance, detecting equipment malfunctions before they escalate into major breakdowns, thereby optimizing production lines, ensuring product quality, and maximizing operational uptime.

Despite its profound capabilities, the successful implementation and operationalization of effective AI-driven Anomaly Detection systems present a unique set of challenges. A fundamental requirement is the availability of substantial volumes of high-quality, representative data to accurately train AI models, particularly challenging given the inherent rarity of true anomalies. Moreover, ensuring model interpretability—understanding precisely *why* a particular anomaly was flagged—is often complex but crucial for fostering trust, enabling effective root cause analysis, and meeting regulatory compliance mandates. Striking the delicate balance between detecting genuine anomalies (high sensitivity) and minimizing the proliferation of false positives (high specificity) remains an ongoing, iterative effort, demanding meticulous fine-tuning, continuous monitoring, and expert human oversight to achieve optimal performance and unwavering reliability.

The future trajectory of Anomaly Detection is poised for even greater sophistication, driven by accelerating advancements in areas such as explainable AI (XAI), federated learning, and hyper-real-time processing capabilities. As global data volumes continue to explode and threats become increasingly intricate and evasive, the demand for intelligent systems capable of identifying and contextualizing unusual patterns will only intensify. The seamless integration of Anomaly Detection with other emerging AI disciplines—such as natural language processing for unstructured log data or computer vision for visual inspection—promises to usher in a new era of truly predictive insights and proactive defense mechanisms, safeguarding our digital and physical infrastructures in ways previously unimaginable. This relentless evolution underscores that embracing advanced AI for anomaly detection is no longer merely a competitive advantage, but an absolute necessity for maintaining operational integrity, bolstering security, and fostering resilience in our complex, interconnected landscape.

Quick Insights: Anomaly Detection with AI

- Platform Category: A critical capability integrated into Data Analytics Platforms, Observability Tools, Cybersecurity Solutions, and specialized AI/ML services.

- Core Technology/Architecture: Relies on Machine Learning algorithms (e.g., statistical methods, clustering, deep learning for time series), real-time data streaming and processing, and scalable cloud-based architectures.

- Key Data Governance Feature: Includes controlled access to anomaly alerts and underlying data, data lineage tracking for anomaly root cause analysis, data quality monitoring to prevent false positives, and audit trails of detection actions.

- Primary AI/ML Integration: Leverages built-in and customizable ML models to establish baselines and identify deviations, supports automated model training and retraining, and integrates with MLOps pipelines for model deployment and management.

- Main Competitors/Alternatives: Cloud AI/ML services (e.g., AWS SageMaker, Azure Machine Learning, Google Cloud AI Platform), dedicated observability platforms (e.g., Datadog, Splunk, Dynatrace), specialized security analytics tools, and open-source ML libraries.

Introduction to AI-Powered Anomaly Detection

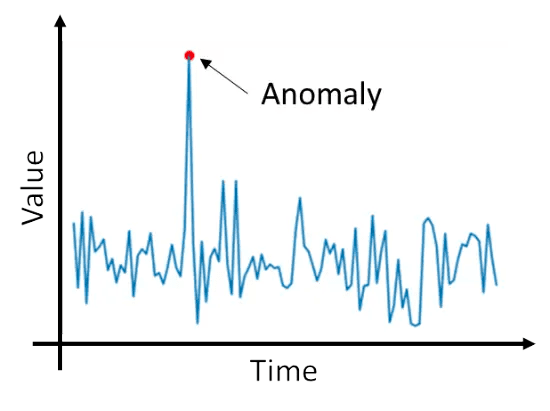

In an era increasingly defined by an exponential surge in data generation from myriad sources—ranging from IoT sensors and financial transactions to network logs and medical devices—the ability to swiftly and accurately discern the normal from the abnormal is no longer merely advantageous; it has become an existential imperative for operational continuity, robust security, and agile strategic decision-making. Anomaly Detection, fundamentally defined as the process of identifying data points, events, or observations that deviate significantly from expected behavior, has undergone a profound transformation. What once relied on rudimentary rule-based systems and static thresholds has evolved into sophisticated, AI-driven platforms capable of unveiling subtle, complex, and previously undetectable patterns of irregularity. These patterns can signify anything from nascent cyber threats and sophisticated fraudulent activities to critical equipment malfunctions and early health anomalies, all of which demand immediate attention and informed action.

At World2Data.com, we firmly believe that the true intelligence embedded within modern data platforms is demonstrated by their capacity to not just store and efficiently process vast quantities of information, but to autonomously extract profound, actionable insights from it. AI-powered Anomaly Detection perfectly embodies this principle, moving far beyond simple statistical outliers to comprehend the intricate context, dynamic relationships, and temporal dependencies within massive datasets. This comprehensive deep dive will meticulously explore the fundamental architectural underpinnings, the sophisticated core components, the profound and quantifiable business value, and the persistent operational and technical challenges intrinsically associated with implementing cutting-edge artificial intelligence for identifying unusual patterns. Our overarching objective is to provide a granular, holistic understanding of why integrating AI into anomaly detection workflows represents not just an incremental upgrade, but a pivotal paradigm shift essential for modern, data-driven organizations striving for resilience and innovation.

Core Breakdown: The Architecture and Mechanics of AI-Driven Anomaly Detection

The unparalleled efficacy of contemporary AI-driven Anomaly Detection systems is predicated upon a robust, modular, and multi-layered architecture. This architecture seamlessly integrates advanced machine learning capabilities with highly scalable data processing pipelines. These sophisticated platforms are engineered to ingest, meticulously process, and intelligently analyze vast quantities of both streaming and historical data, operating either in real-time or near real-time. Crucially, they learn continuously, perpetually refining their understanding of ‘normal’ behavior patterns as data landscapes evolve, thereby enhancing their precision and adaptiveness.

Key Components and Technologies

- High-Volume Data Ingestion and Preprocessing: This foundational layer is responsible for the ubiquitous collection of raw data from an incredibly diverse array of sources. This includes, but is not limited to, IoT sensors, network devices, application logs, financial transaction records, user behavior telemetry, and various forms of time series data. Ingested data, often arriving at extremely high velocity and volume, then undergoes rigorous cleaning, comprehensive normalization, and meticulous feature engineering. This transforms raw, disparate inputs into meaningful, actionable features that are optimally suited for consumption by AI models. Common preprocessing techniques encompass aggregation, resampling, contextual enrichment, and various statistical transformations designed to highlight relevant characteristics indicative of normal or anomalous states.

- Centralized Feature Store: A pivotal component within any robust AI/ML platform, a feature store serves to standardize, centralize, and manage features utilized across multiple machine learning models. For Anomaly Detection, this component is invaluable as it ensures consistency, reusability, and discoverability of expertly engineered features across different anomaly detection models, significantly accelerating model development cycles and markedly improving overall model performance and reliability. It facilitates rapid, low-latency access to features crucial for real-time inference and simultaneously acts as a meticulously maintained historical repository for high-quality training data, ensuring model reproducibility and auditability.

- Advanced Machine Learning Algorithms: This constitutes the intellectual core of the system. A comprehensive suite of diverse ML techniques is employed, chosen strategically based on the nature of the data and the type of anomaly being sought:

- Statistical Methods: For scenarios where anomalies can be characterized by simple deviations from a known distribution, methods such as Z-score, Interquartile Range (IQR), or Gaussian distribution models are effective in identifying univariate or simple multivariate outliers.

- Clustering-based Methods: Algorithms like K-Means, DBSCAN, or Isolation Forests are adept at identifying data points that are either spatially isolated, do not naturally belong to any significant cluster, or form very small, distinct clusters, indicative of anomalous behavior.

- Classification-based Methods: While anomalies are often unknown or poorly represented, techniques like One-Class SVM or novel deep anomaly detection frameworks learn the boundaries of normal data distribution, flagging any observation falling outside these learned boundaries as anomalous.

- Deep Learning for Time Series Data: Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Gated Recurrent Units (GRUs), and the more recent Transformer architectures are exceptionally powerful for detecting contextual and collective anomalies in sequential data. They excel by learning complex temporal dependencies, predicting future values, and identifying deviations from these predictions. Autoencoders are also widely deployed, learning a compressed, low-dimensional representation of normal data, with high reconstruction errors indicating an anomaly.

- Automated Model Training and Lifecycle Management (MLOps): AI-powered Anomaly Detection platforms necessitate continuous model training, frequent retraining, and adaptive recalibration to effectively respond to evolving data patterns, seasonal variations, and concept drift. Robust MLOps pipelines automate this entire lifecycle, encompassing model versioning, seamless deployment, real-time performance monitoring, and rigorous evaluation. This automation ensures that anomaly detection models remain highly effective, accurate, and are seamlessly updated in production environments with minimal manual intervention.

- Real-time Inference and Sophisticated Alerting: Post-training, the deployed models continuously process incoming data streams, performing rapid inference to classify new observations as either normal or anomalous. Sophisticated, tiered alerting systems then automatically trigger notifications, escalating critical insights to human operators, security teams, or other automated remediation systems for immediate and decisive response.

- Feedback Loops and Explainability (XAI): To continually minimize false positives, enhance precision, and foster user trust, integrating human-in-the-loop feedback mechanisms for flagged anomalies is paramount. Furthermore, incorporating explainable AI (XAI) techniques provides analysts with critical insights into why a particular anomaly was detected, demystifying complex model decisions, facilitating thorough root cause analysis, and enabling more effective remediation strategies.

Challenges and Barriers to Adoption

Despite the immense promise and transformative potential of AI-driven Anomaly Detection, its successful implementation and scalable deployment are often fraught with several significant and intricate challenges:

- Data Scarcity and Extreme Imbalance: By their very definition, anomalies represent rare occurrences. This inherent rarity makes acquiring sufficient quantities of labeled anomalous data for supervised learning paradigms exceptionally difficult. While unsupervised and semi-supervised methods offer viable alternatives, they still necessitate comprehensive, representative datasets of “normal” behavior.

- Dynamic Definition of ‘Normal’: What constitutes normal behavior is rarely static; it is inherently dynamic, context-dependent, and subject to continuous evolution. Systems must adeptly adapt to seasonality, long-term trends, sudden shifts, and gradual changes in underlying operational patterns (known as concept drift), which can rapidly render static or infrequently updated models obsolete.

- Pervasive High False Positive Rates: Overly sensitive anomaly detection models, while potentially catching every true anomaly, can deluge operators with an unsustainable volume of false alarms. This “alert fatigue” can swiftly erode trust in the system and lead to critical warnings being overlooked. Achieving the optimal balance between high sensitivity (recall) and high specificity (precision) remains a continuous, intricate tuning challenge.

- Model Interpretability and Explainability Deficit: For many complex AI models, particularly deep learning architectures, understanding the precise, underlying reasons why an anomaly was flagged can be opaque. This lack of transparency, a challenge commonly referred to as the “black box problem,” can severely hinder effective root cause analysis, impede decision-making, and complicate adherence to stringent regulatory compliance requirements.

- Scalability and Real-time Processing Demands: Detecting anomalies within extremely high-velocity, high-volume data streams—such as gigabytes per second of network traffic or millions of IoT sensor readings—demands exceptionally scalable, fault-tolerant, and ultra-low-latency processing infrastructure. Building and maintaining such an infrastructure is both technologically challenging and often financially intensive.

- Operational Complexity (MLOps Overhead): The comprehensive process of deploying, meticulously monitoring, rigorously maintaining, and continually updating numerous disparate ML models for various data sources, asset types, and anomaly characteristics within a coherent MLOps framework requires significant specialized expertise, robust automation tooling, and substantial ongoing operational investment.

Business Value and ROI of Advanced Anomaly Detection

The strategic and often substantial investment in AI-driven Anomaly Detection invariably yields a multitude of profound and quantifiable returns across an extensive range of business functions and industry verticals:

- Exponentially Enhanced Cybersecurity: Proactively identifies advanced persistent threats (APTs), insider threats, zero-day vulnerabilities, sophisticated malware, and unusual network behaviors that demonstrably bypass traditional signature-based detection mechanisms, thereby significantly reducing the risk and potential impact of data breaches and cyberattacks.

- Robust Fraud Detection and Prevention: Enables real-time, instantaneous analysis of financial transactions, credit card usage patterns, insurance claims, and digital identities, swiftly flagging suspicious activities. This capability prevents millions in potential financial losses, safeguards consumer trust, and protects organizational reputation.

- Revolutionized Predictive Maintenance: Continuously monitors the operational parameters of industrial machinery, transportation fleets, critical infrastructure components, and manufacturing equipment. By detecting subtle performance deviations or early indicators of impending failure, it facilitates proactive, just-in-time maintenance, minimizing costly unscheduled downtime, extending asset lifespan, and substantially reducing overall operational expenditures.

- Optimized Operational Efficiency and Observability: For IT operations teams, AI-powered anomaly detection identifies performance bottlenecks, unusual resource consumption spikes, or service disruptions well before they can impact end-users or critical business processes. In vast IoT environments, it ensures the smooth, efficient, and secure operation of myriad connected devices and complex industrial processes.

- Superior Quality Control in Manufacturing: Detects subtle defects, inconsistencies, or deviations in product quality directly on high-speed production lines. This prevents substandard goods from progressing further in the manufacturing chain or reaching the market, thereby reducing waste, minimizing recalls, and upholding brand integrity.

- Proactive Customer Experience Management: In sectors like telecommunications, e-commerce, or subscription services, AI can detect unusual usage patterns that might indicate service abuse, customer dissatisfaction, or an elevated churn risk. This empowers businesses to initiate proactive interventions, enhancing customer loyalty and satisfaction.

- Streamlined Regulatory Compliance and Audit Readiness: Provides an indisputable, auditable trail of detected anomalies, the subsequent actions taken, and the responses to these events. This substantially aids in meeting stringent regulatory compliance obligations, demonstrating due diligence in risk management, and bolstering corporate governance.

- Accelerated Time to Insight and Decision-Making: Automates the inherently arduous and time-consuming task of manually sifting through colossal datasets for irregularities. This liberation allows highly skilled human experts and analysts to dedicate their invaluable time and cognitive resources to in-depth analysis, strategic planning, and rapid remediation, rather than manual detection.

Comparative Insight: AI-Powered Anomaly Detection vs. Traditional Approaches

For many years, organizations largely relied upon traditional methods for identifying anomalies within their datasets. These approaches primarily consisted of basic rule-based systems and static statistical thresholds. While these conventional techniques offered a rudimentary level of detection in simpler, less complex data landscapes, they have proven to be fundamentally inadequate and fall critically short when confronted with today’s burgeoning volumes of high-velocity, high-dimensionality, and inherently complex data environments. A clear understanding of these profound differences is essential to fully grasp the truly transformative power and strategic advantages afforded by modern AI-driven Anomaly Detection solutions.

Limitations of Traditional Data Lake/Data Warehouse Architectures and Rule-Based Systems

In traditional enterprise setups, data lakes and data warehouses typically function as centralized repositories, primarily designed for the storage, management, and basic querying of structured and unstructured data. Within this paradigm, anomaly detection typically involves:

- Static Thresholds and Fixed Baselines: This approach involves setting predefined upper or lower limits for specific metrics (e.g., flagging CPU usage if it exceeds 90%). While deceptively simple to implement, these static thresholds are notoriously prone to generating high rates of both false positives and false negatives, as normal operational behavior is rarely constant and frequently fluctuates with time, seasonality, and external factors.

- Rigid, Hard-coded Business Rules: This method relies on defining explicit, logical conditions (e.g., “If a transaction amount is greater than $X AND the transaction originates from a location outside the user’s home country, then flag it as suspicious”). Such rules necessitate constant, manual updates by human experts, struggle immensely to identify novel or previously unseen attack vectors, and critically lack the adaptability required to cope with evolving patterns of both threats and normal operations.

- Labor-intensive Manual Analysis: Many traditional approaches often default to human analysts painstakingly sifting through vast quantities of dashboards, reports, and alerts. This process is inherently time-consuming, highly error-prone, and utterly unsustainable when confronted with the immense data volumes characteristic of modern enterprise environments.

- Limited Contextual Awareness: A significant drawback of traditional methods is their tendency to analyze individual data points or events in isolation. They critically fail to grasp the broader operational context, the intricate interdependencies between variables, or the temporal relationships that are absolutely crucial for identifying subtle, sophisticated anomalies that only manifest when viewed holistically.

In essence, these traditional methods are predominantly reactive, inherently brittle, and struggle immensely with the ‘curse of dimensionality’—a phenomenon where the complexity of pattern recognition increases exponentially with the number of features. They are largely ill-equipped to detect sophisticated, multi-faceted anomalies that might involve subtle, correlated deviations across numerous variables manifesting over extended periods. Furthermore, their fundamental inability to learn autonomously or adapt dynamically renders them largely ineffective against continuously evolving threats, dynamic system behaviors, or shifting operational norms.

The AI Data Platform Approach to Anomaly Detection

An AI Data Platform, specifically engineered and optimized to support advanced analytics and sophisticated machine learning workloads, fundamentally elevates Anomaly Detection capabilities to an unprecedented level. These platforms transform raw data into a dynamic source of predictive and protective intelligence:

- Dynamic and Adaptive Baseline Learning: Moving beyond rigid static thresholds, AI models continuously and autonomously learn what constitutes ‘normal’ behavior from vast and diverse datasets. They possess the inherent capability to automatically adjust to seasonality, evolving trends, cyclical patterns, and gradual shifts in data distributions, creating a highly resilient and accurate baseline.

- Sophisticated Contextual and Collective Anomaly Detection: AI excels where traditional methods fail—in understanding complex relationships and intricate dependencies within data. It can adeptly identify all three primary types of anomalies:

- Point Anomalies: Individual data points that are distinctly abnormal (e.g., a sudden, sharp spike in network latency at an unexpected time).

- Contextual Anomalies: Data points that might be normal in one context but unequivocally abnormal in another (e.g., a user logging into a system from an unusual geographical location is normal during business travel, but highly anomalous if they are simultaneously logging in from their usual office location).

- Collective Anomalies: A collection of data points that, when viewed individually, might not appear anomalous, but when considered together, form a pattern that is highly indicative of an anomaly (e.g., a subtle, gradual decline in hard drive performance metrics, followed by a sudden increase in temperature and disk I/O, collectively signaling an impending hardware failure).

- Scalable Feature Engineering and Management: By leveraging purpose-built components like a centralized feature store, AI platforms can efficiently generate, manage, and deliver hundreds or even thousands of highly engineered features in real-time. This rich feature set empowers models to capture significantly more nuanced and subtle patterns that are critical for accurate anomaly detection.

- Continuous Adaptation and Self-Correction: Through robust MLOps practices, anomaly detection models are continuously monitored for performance degradation, automatically retrained with fresh data, and seamlessly redeployed. This ensures the system perpetually adapts to concept drift, maintains consistently high accuracy over extended periods, and remains effective against evolving threat landscapes without constant manual intervention.

- Significantly Reduced False Positives: Advanced ensemble algorithms, sophisticated statistical filtering, and integrated human-in-the-loop feedback mechanisms work in concert to substantially reduce the rate of costly false positives compared to simplistic rule-based systems, thereby increasing trust in the system and optimizing operational efficiency.

- Unsupervised Discovery of Novel Anomalies: Many critical anomalies are, by definition, previously unknown or have never been observed. AI platforms leverage powerful unsupervised learning techniques to discover these novel anomalies without requiring prior labeling, a fundamental capability that traditional systems completely lack.

Ultimately, while traditional data lakes and data warehouses provide the essential raw data fuel, AI Data Platforms furnish the highly sophisticated engine and intelligent navigation system necessary to process that fuel for predictive, protective, and truly insightful outcomes. They fundamentally transform Anomaly Detection from a predominantly reactive, brittle, and manually intensive chore into a proactive, adaptive, and largely automated strategic organizational advantage.

World2Data Verdict: The Imperative of Adaptive Anomaly Detection

The relentless proliferation of data across all facets of modern enterprise, coupled with the ever-increasing sophistication of digital threats and the inherent complexities of contemporary operational systems, unequivocally establishes AI-driven Anomaly Detection as an indispensable pillar of any truly robust and future-proof data strategy. It transcends the definition of merely an advanced analytical tool; it is a critical, proactive defense mechanism and a powerful accelerator for comprehensive operational intelligence. Organizations that cling to outdated, static thresholds and brittle manual rule sets risk being perpetually one critical step behind, leaving themselves dangerously exposed to escalating financial fraud, disruptive system outages, and devastating security breaches that intelligent, adaptive systems are uniquely capable of identifying and mitigating in real-time.

World2Data.com unequivocally asserts that the sustained success and competitive resilience of enterprises in the coming decade will be intrinsically linked to their capacity to not just adopt, but to expertly implement and operationalize adaptive, explainable, and inherently scalable Anomaly Detection solutions. This strategic imperative necessitates not only the integration of advanced AI models but also the establishment of a comprehensive, end-to-end MLOps framework to ensure continuous model learning, rapid, reliable deployment, and vigilant, proactive performance monitoring. A laser focus must be maintained on cultivating exceptionally high data quality, executing robust and dynamic feature engineering, and seamlessly integrating robust human-in-the-loop feedback mechanisms to continually refine models and effectively manage false positives. The strategic emphasis must decisively shift from merely reacting to known threats to proactively identifying the “unknown unknowns,” leveraging the full spectrum of AI capabilities—from advanced deep learning architectures for nuanced time series analysis to cutting-edge explainable AI (XAI) for transparent, auditable insights. Embracing these sophisticated, AI-powered capabilities is no longer an optional endeavor but a paramount strategic imperative to rigorously safeguard critical assets, comprehensively optimize complex operations, and decisively maintain a formidable competitive edge in our dynamically evolving and increasingly interconnected digital landscape.