Batch vs Streaming: Optimizing Your Data Processing Paradigm: A World2Data Deep Dive

In the dynamic world of data processing, the fundamental choice between batch and streaming architectures is pivotal for any organization striving for data-driven excellence. This crucial Batch vs Streaming Comparison guides businesses toward optimal data handling strategies, directly impacting operational efficiency, decision-making speed, and competitive advantage. Understanding which paradigm aligns with specific business needs and technical capabilities is paramount for success in today’s fast-paced digital landscape.

Introduction: Decoding Data Processing Paradigms

The relentless growth of data, coupled with increasing demands for real-time insights, has brought the distinction between batch and streaming data processing to the forefront of modern data architecture discussions. As a leading platform for data insights, World2Data.com aims to provide a comprehensive Batch vs Streaming Comparison to empower data professionals and business leaders alike. This article will delve deep into the mechanics, use cases, advantages, and challenges of both approaches, helping you determine which paradigm, or perhaps a hybrid model, is the right fit for your organization.

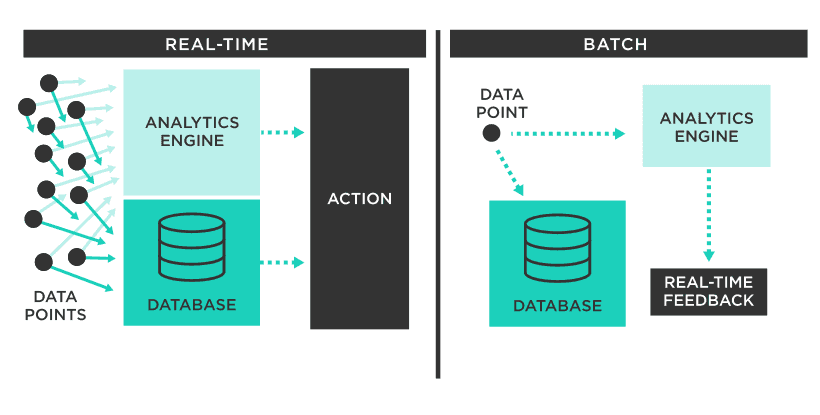

At its core, data processing can be categorized into these two distinct paradigms. Batch processing involves the scheduled processing of large data volumes, typically utilizing robust frameworks like Hadoop MapReduce or Apache Spark in batch mode. Conversely, streaming processing is defined by real-time, event-driven processing of continuous data flows, championed by technologies such as Apache Flink or Kafka Streams. These foundational differences dictate everything from latency to data governance features, with batch processing focusing on data quality checks and schema validation on large, static datasets, while streaming emphasizes in-flight schema enforcement (e.g., via Schema Registry) and real-time data quality monitoring. Furthermore, their integration with AI/ML also diverges significantly: batch for training models on vast historical datasets, and streaming for real-time inference, scoring, and even online model training.

Core Breakdown: Architecting for Data Velocity and Volume

To truly understand the implications of a Batch vs Streaming Comparison, we must dissect the technical and architectural nuances of each approach.

Understanding Batch Processing: Deep Dive into its Mechanics and Use Cases

Batch processing is a traditional method where data is collected and processed in large blocks at predetermined intervals. This approach is intrinsically linked to scenarios where immediate data insights are not critical, but rather, a comprehensive, historical analysis is required. The defining characteristic of batch systems is their optimization for high throughput, efficiently handling vast datasets without requiring continuous, real-time resource consumption.

Architecturally, batch processing often involves Extract, Transform, Load (ETL) pipelines, where data is pulled from various sources, cleansed and reshaped, and then loaded into a data warehouse or data lake. Technologies like Apache Hadoop (with MapReduce), Apache Spark in batch mode, and traditional database systems form the backbone of many batch processing environments. These systems excel at parallel processing across distributed clusters, making them ideal for tasks that can tolerate latency and benefit from processing large chunks of data at once. Data governance in batch environments typically involves rigorous data quality checks and schema validation performed on these large, static datasets before or during the loading process, ensuring high fidelity for historical analysis.

Typical use cases for batch processing include:

- End-of-month financial reporting: Consolidating vast transaction data for accurate financial statements.

- Complex machine learning model training: Training sophisticated AI/ML models on extensive historical datasets, where the training process can take hours or days.

- Daily data warehousing updates: Refreshing data marts and warehouses with daily transaction summaries.

- Business Intelligence (BI) dashboards: Generating reports that reflect aggregated historical performance metrics.

- Compliance and auditing: Processing archival data to meet regulatory requirements.

The primary AI/ML integration point for batch processing is centered around model training. By leveraging large, curated historical datasets, batch systems enable the development of robust and accurate predictive models, which can then be deployed for either batch or real-time inference depending on the application.

Exploring Streaming Processing: Real-time Insights and Architectural Imperatives

Conversely, streaming processing is designed to handle data continuously as it arrives, providing near real-time insights with minimal latency. Data is processed record by record, or in very small micro-batches, significantly minimizing the delay between data generation and insight extraction. This paradigm is crucial for applications that demand immediate reactions and continuous monitoring.

Streaming architectures are characterized by event-driven patterns, where data flows through a series of processing stages as individual events. Messaging queues and stream processing engines are core components. Technologies such as Apache Kafka serve as distributed streaming platforms, handling high-volume, low-latency event streams, while Apache Flink and Kafka Streams provide powerful capabilities for processing these streams. Kinesis and Pulsar are other prominent players in this space. These systems require robust, always-on infrastructure to maintain a constant flow and ensure data consistency and delivery guarantees. Key data governance features for streaming include in-flight schema enforcement, often managed by a Schema Registry, and real-time data quality monitoring, allowing for immediate detection and flagging of anomalies or invalid data points.

This approach is indispensable for applications requiring instant reactions, such as:

- Fraud detection: Identifying suspicious transactions as they occur.

- Live user activity monitoring: Tracking website clicks, application usage, and personalized recommendations in real-time.

- IoT analytics: Processing sensor data from connected devices for immediate operational adjustments or anomaly detection.

- Real-time anomaly detection: Flagging unusual patterns in network traffic or system performance instantly.

- Real-time inference/scoring: Applying trained AI/ML models to incoming data streams to make immediate predictions, such as personalizing content or recommending products as users interact with a platform.

Streaming processing significantly enhances AI/ML capabilities by enabling real-time inference and online model training. This allows models to adapt to new data trends almost instantly, leading to more agile and responsive AI systems.

Challenges and Considerations in Batch vs Streaming Architectures

The choice between batch and streaming is not without its complexities. A thorough Batch vs Streaming Comparison must account for the challenges inherent in each.

- Latency vs. Throughput: This is the most fundamental trade-off. Batch systems excel at high throughput (processing large amounts of data efficiently over time) but introduce latency. Streaming systems prioritize low latency (processing data quickly as it arrives) but can be more complex to manage for consistent high throughput under fluctuating loads.

- Complexity and Operational Overhead: Streaming systems are generally more complex to design, build, deploy, and maintain. Ensuring exactly-once processing, handling out-of-order data, managing state, and scaling dynamically require sophisticated engineering expertise. Batch systems, while still complex for very large datasets, often have more established patterns and tools for error handling and reprocessing.

- Resource Management and Cost: Batch processing typically involves bursty resource utilization, consuming significant compute resources during scheduled processing windows and then scaling down. Streaming, conversely, demands continuous, dedicated resources to maintain constant data flow and low latency, potentially leading to higher ongoing infrastructure costs.

- Data Integrity and Consistency: Ensuring data integrity and consistency (e.g., “exactly-once” processing) is a significant challenge in streaming systems due to the continuous, distributed nature of data flow. Batch systems, operating on finite datasets, offer more straightforward mechanisms for validating data and reprocessing errors.

- Scalability and Elasticity: Both paradigms require scalability, but their approaches differ. Batch processing scales by distributing large jobs across clusters, optimizing for the total processing time of a finite dataset. Streaming platforms require elastic scalability to manage fluctuating, continuous data ingestion rates without falling behind, demanding dynamic infrastructure that can adapt to varying data volumes in real-time.

- Data Governance and Schema Evolution: While both require robust data governance, the implementation differs. Batch systems can enforce strict schema validation before processing, allowing for easier error isolation. Streaming systems need mechanisms for in-flight schema enforcement and graceful handling of schema evolution without interrupting the continuous flow of data.

The Business Value and ROI of Optimal Data Processing Choices

The decision between batch and streaming directly impacts an organization’s ability to derive value from its data, with significant implications for Return on Investment (ROI). Choosing the right approach, or combination of approaches, allows businesses to:

- Accelerate Time to Insight: For critical real-time use cases, streaming processing can deliver immediate insights, enabling quicker decision-making and operational responses, which translates to competitive advantage and improved customer experience (e.g., instant fraud alerts prevent financial losses).

- Optimize Resource Utilization and Cost: Batch processing, when correctly applied to non-urgent tasks, can be significantly more cost-effective due to its ability to scale down resources between processing cycles. Misusing streaming for batch tasks can lead to unnecessary continuous infrastructure costs.

- Enhance AI/ML Capabilities: An optimal data processing strategy provides the right data, at the right time, for AI/ML models. Batch processing fuels robust historical model training, leading to more accurate predictive models. Streaming enables real-time inference and online learning, allowing AI to react dynamically to current events and personalize experiences on the fly.

- Improve Data Quality and Trust: Tailored data governance features, whether rigorous batch validation or real-time streaming monitoring, ensure higher data quality, which is fundamental for reliable analysis and AI model performance.

- Drive Innovation: By having a flexible and powerful data processing backbone, organizations are better positioned to experiment with new data products, analytics, and AI applications, fostering innovation and discovering new revenue streams.

Comparative Insight: Beyond the Binary Choice – The Rise of Hybrid Architectures

While a direct Batch vs Streaming Comparison highlights their distinct characteristics, modern data landscapes rarely rely on a single paradigm. The “Main Competitors/Alternatives” are not just one or the other, but increasingly include Hybrid Architectures, such as Lambda and Kappa, which combine the strengths of both batch and streaming to address diverse business needs.

Historically, the Lambda Architecture emerged as a popular hybrid model. It comprises two distinct layers: a batch layer and a speed (streaming) layer. The batch layer handles comprehensive, accurate processing of all historical data, ensuring data completeness and fault tolerance. Results from this layer are typically pre-computed views. The speed layer processes new data in real-time to provide immediate, albeit potentially approximate, results. These two views are then merged at query time to provide a complete picture. The advantage of Lambda is its high accuracy and robustness from the batch layer combined with the low latency of the speed layer. However, its main drawbacks include increased complexity, as it requires maintaining two separate codebases and processing pipelines, leading to potential data inconsistencies between layers and higher operational overhead.

In response to the complexity of Lambda, the Kappa Architecture was proposed as a simplification. In Kappa, the entire data processing pipeline is built around a single streaming system. All data, whether historical or real-time, is treated as a stream of events. Historical data processing involves replaying events from the beginning of the stream, while real-time processing handles incoming events. This approach simplifies the architecture by eliminating the need for separate batch and speed layers and maintaining a single codebase. Technologies like Apache Kafka, combined with stream processing engines such as Apache Flink, are well-suited for Kappa architectures. While simpler to manage, the Kappa architecture places higher demands on the streaming system’s ability to handle large historical data replays and ensure fault tolerance and data consistency across potentially very long data streams.

The continuous evolution in stream processing technologies, offering more sophisticated state management, fault tolerance, and expressive querying capabilities, is making Kappa an increasingly attractive option for organizations seeking a unified data processing paradigm. However, the choice between Lambda and Kappa, or a purely batch/streaming system, ultimately depends on factors such as required latency, data volume, budget, and the expertise available within the organization.

For many enterprises, the most effective solution is a thoughtful blend, leveraging batch for robust historical analysis and long-term storage, while deploying streaming for immediate operational insights and interactive applications. This tailored approach allows organizations to maximize the benefits of both paradigms, achieving both comprehensive accuracy and real-time responsiveness without unnecessary overhead.

World2Data Verdict: Navigating the Future of Data Processing

The ultimate decision in the Batch vs Streaming Comparison is rarely a binary one in today’s intricate data ecosystems. The World2Data verdict emphasizes that the optimal approach is one that is strategically aligned with specific business objectives, data characteristics, and organizational capabilities. Rather than viewing batch and streaming as mutually exclusive, forward-thinking organizations are embracing flexible, adaptive architectures. The future of data processing will increasingly lean towards integrated data platforms that intelligently manage data across the entire spectrum of latency requirements, often abstracting away the underlying batch or streaming complexities from the end-user.

We recommend starting with a clear understanding of your use cases. Identify where real-time responsiveness is absolutely critical for competitive advantage or operational necessity, and where periodic, comprehensive analysis suffices. Evaluate your data volume, velocity, and variety, and consider the skills of your data engineering team. Don’t shy away from exploring hybrid models like Lambda or Kappa architectures, or even bespoke combinations that leverage the strengths of established batch processing tools alongside modern streaming platforms. Furthermore, prioritize robust data governance and security measures from the outset, ensuring data quality and compliance regardless of the processing paradigm chosen. By taking a thoughtful, use-case-driven approach to this crucial Batch vs Streaming Comparison, organizations can build resilient, scalable, and highly performant data processing systems that truly fuel innovation and business growth.