Mastering Big Data Query Optimization: Techniques for Peak Performance

Platform Category: Distributed Query Engine

Core Technology/Architecture: Data Partitioning, Columnar Storage Formats (e.g., Parquet, ORC), Massively Parallel Processing (MPP)

Key Data Governance Feature: Statistics and Metadata Management for Cost-Based Optimizer

Primary AI/ML Integration: AI-powered Query Plan Optimization and Caching

Main Competitors/Alternatives: Batch Processing (MapReduce), Full-Text Search Engines (e.g., Elasticsearch), In-Memory Databases

In the vast landscape of modern data, the ability to extract timely and accurate insights hinges critically on efficient query processing. Techniques for Optimizing Big Data Query Performance are paramount, directly translating into accelerated decision-making, reduced operational overheads, and a stronger competitive edge. This deep dive explores the multifaceted strategies essential for achieving peak performance when querying massive datasets, ensuring that valuable information is always within reach.

Introduction: The Imperative of Big Data Query Optimization

The digital age is characterized by an explosion of data, with enterprises constantly grappling with petabytes, and even exabytes, of information. From IoT sensors and web traffic to transactional records and social media feeds, the sheer volume, velocity, and variety of this “Big Data” present unprecedented challenges for traditional analytical systems. Without robust strategies, querying such immense datasets can become a time-consuming, resource-intensive, and ultimately frustrating endeavor. This is where Big Data Query Optimization emerges as a critical discipline, transforming sluggish data access into rapid, actionable insights.

The objective of this article is to dissect the core techniques and architectural considerations that underpin effective Big Data Query Optimization. We will explore how leveraging advanced technologies and meticulous planning can drastically improve query speeds, enhance data accessibility, and ensure that organizations can truly harness the power of their data assets. Our focus will span from foundational data structures to sophisticated query execution engines, demonstrating a holistic approach to conquering the complexities of large-scale data queries.

Core Breakdown: Advanced Strategies for Peak Query Performance

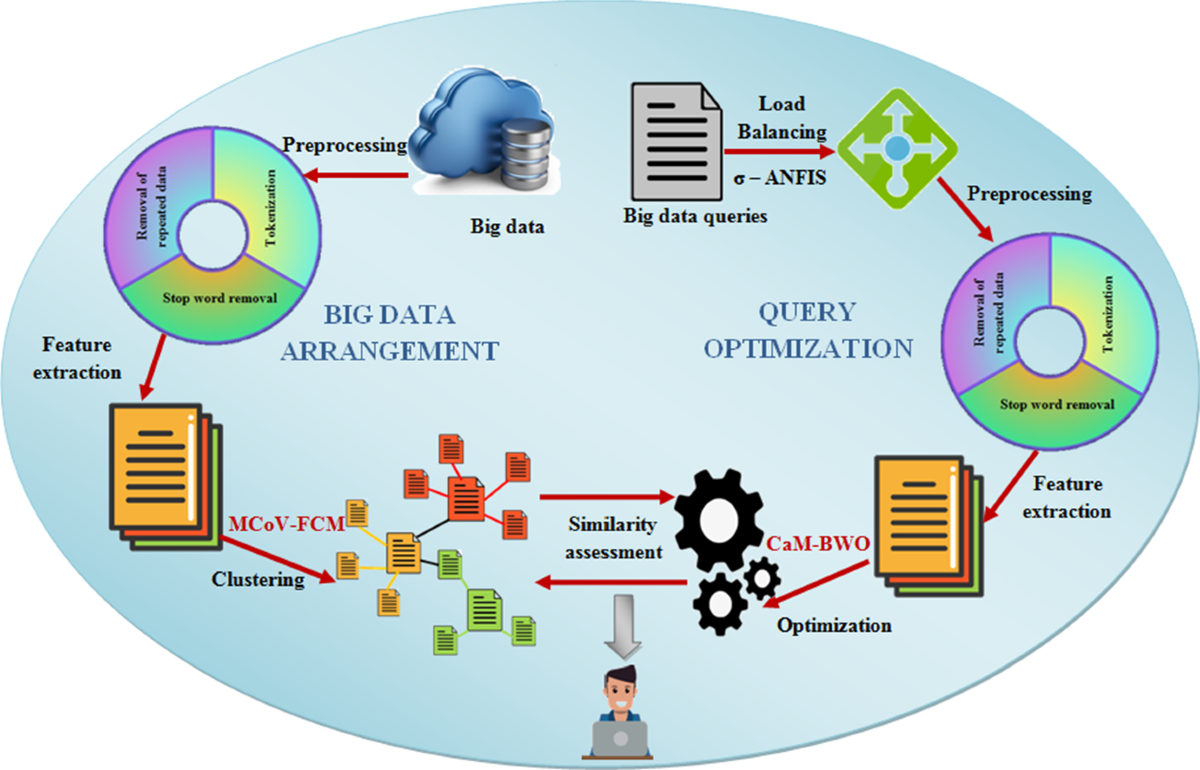

Achieving superior query performance in Big Data environments is not a single technique but a symphony of interconnected strategies. These techniques range from how data is stored to how queries are parsed and executed within a Distributed Query Engine.

Understanding Your Data for Performance: Schema and Storage

- Data Profiling and Schema Design: The journey to optimal query performance begins long before a query is ever written. Thorough data profiling helps in understanding the characteristics of your data—its types, distributions, cardinality, and potential for nulls. A well-designed schema is foundational, minimizing data redundancy, ensuring data integrity, and facilitating efficient access patterns. Poor schema design, such as overly normalized or denormalized tables for the wrong workload, can introduce significant performance bottlenecks.

- Columnar Storage Benefits: Modern Big Data Query Optimization heavily relies on columnar storage formats like Parquet and ORC. Unlike traditional row-oriented storage, which reads entire rows even if only a few columns are needed, columnar storage stores data column by column. This dramatically improves read performance for analytical queries, which typically access a subset of columns across many rows. By retrieving only the relevant columns, I/O operations are minimized, memory usage is reduced, and query execution becomes significantly faster, especially within a Massively Parallel Processing (MPP) framework. Compression ratios are also often higher for columnar data due to similar data types within a column.

Indexing and Partitioning Strategies

- Effective Indexing Implementation: While traditional database indexing might seem straightforward, its application in Big Data requires careful consideration. Strategic indexing on frequently queried columns, join keys, or filter predicates can drastically reduce scan times. Instead of scanning entire datasets, indexes allow the Distributed Query Engine to quickly locate specific data blocks or records. However, over-indexing can introduce overheads during data ingestion and storage, so a balanced approach based on query patterns is crucial for effective Big Data Query Optimization.

- Smart Data Partitioning: Data Partitioning is a cornerstone of Big Data performance. By dividing a large table into smaller, more manageable parts based on specific criteria (e.g., date, geographic region, customer ID), queries can “prune” partitions, meaning they only process the relevant subsets of data. This significantly limits the amount of data a query needs to scan, reducing I/O, network traffic, and CPU cycles. Effective partitioning strategies are vital for speeding up targeted queries and are a fundamental component of any robust Big Data Query Optimization effort.

Query Rewriting and Optimization Tools

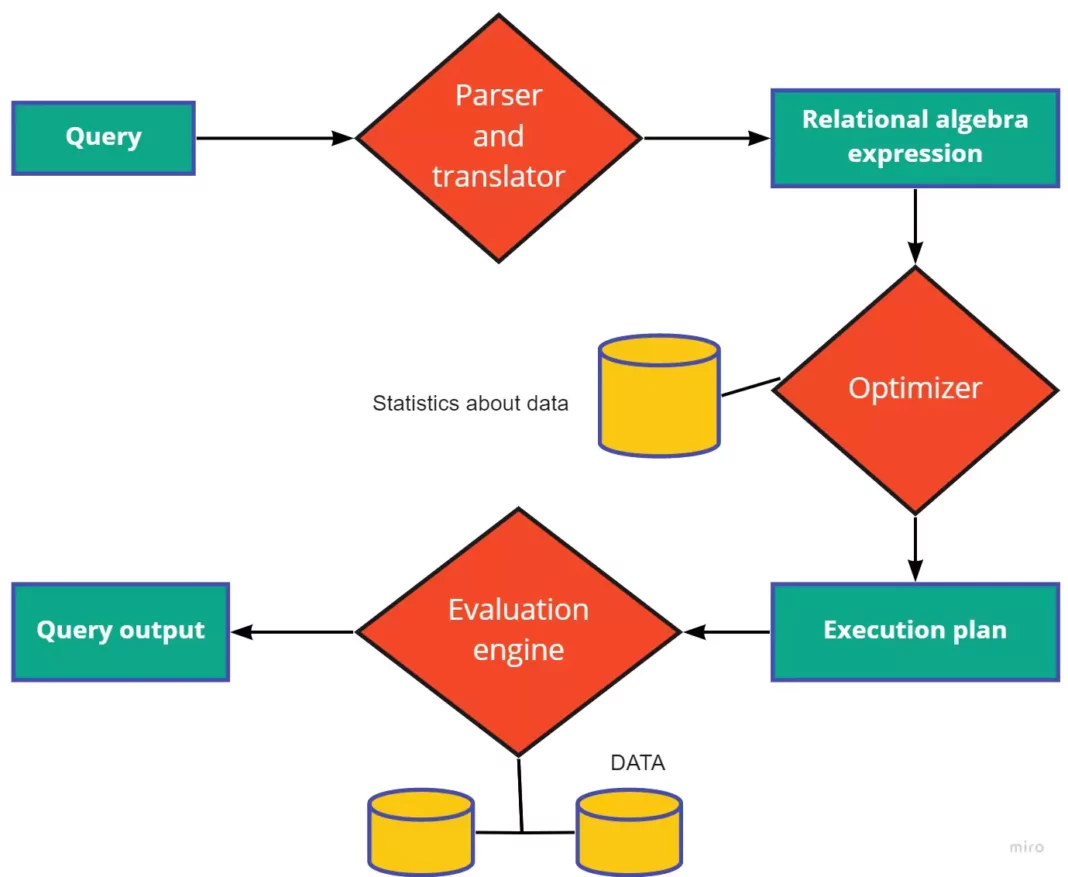

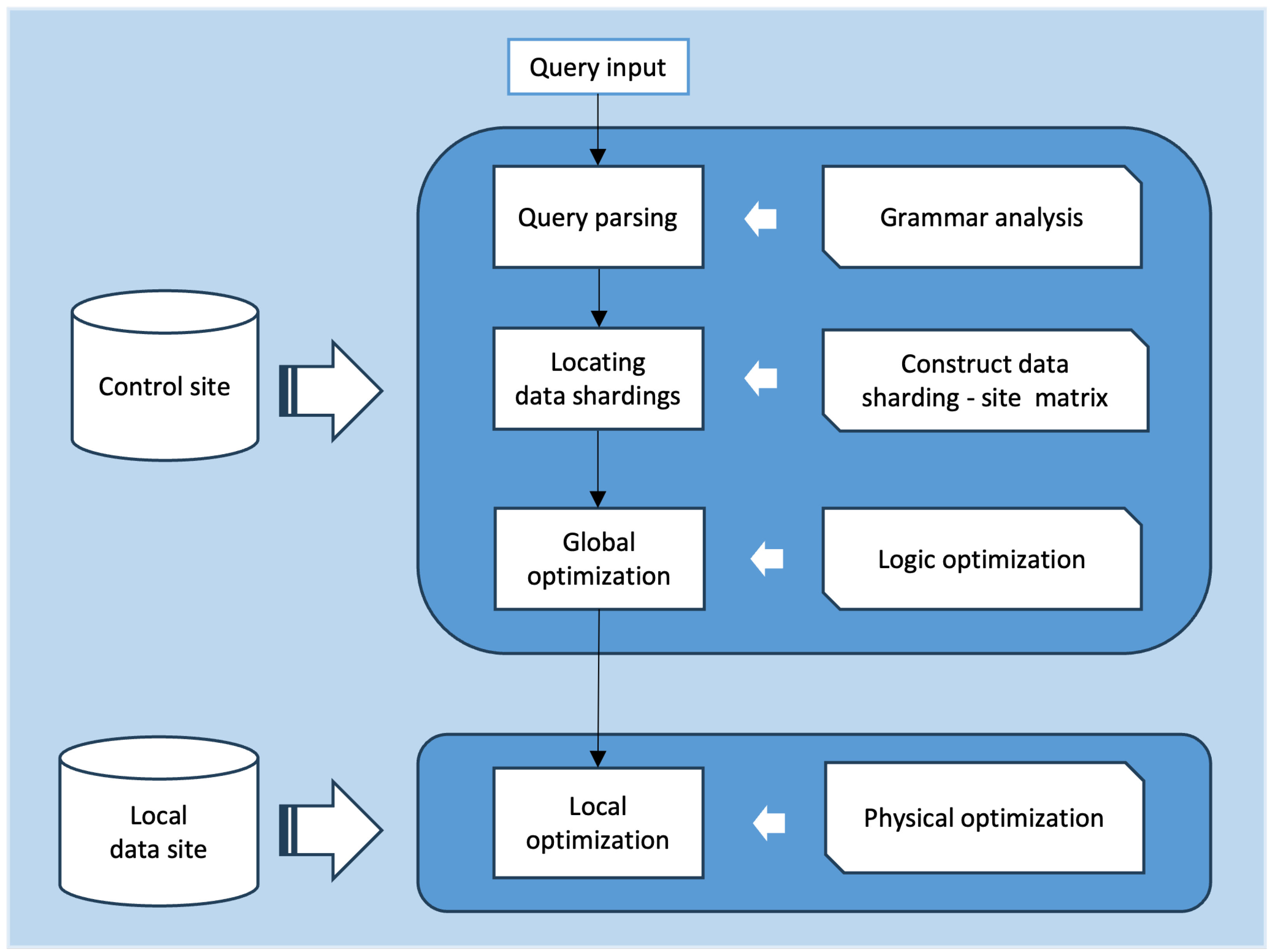

- Leveraging Query Optimizers: At the heart of most modern Distributed Query Engine platforms are sophisticated query optimizers. These optimizers automatically analyze incoming SQL queries and rewrite them into more efficient execution plans. They consider various factors such as indexes, partitions, data distribution, and available resources to determine the most cost-effective way to retrieve data. Understanding how these optimizers work and tuning them through hints or configuration can be crucial. This often relies on accurate Statistics and Metadata Management for Cost-Based Optimizer to make informed decisions.

- SQL Query Refinement Techniques: Beyond automatic optimization, the way queries are written by developers plays a significant role. Techniques include avoiding `SELECT *`, using appropriate join types (e.g., inner vs. outer), filtering early in the query plan, and avoiding resource-intensive operations like full table scans where possible. Eliminating unnecessary subqueries, using common table expressions (CTEs) for clarity and potential reusability, and understanding the impact of user-defined functions are all essential skills for maximizing Big Data Query Optimization.

Resource Management and Scalability

- Cluster Configuration Tuning: The underlying infrastructure of a Distributed Query Engine must be meticulously tuned. This involves optimizing CPU core allocation, memory provisioning, network bandwidth, and storage I/O capacity. In a Massively Parallel Processing (MPP) architecture, each node contributes to query execution, and bottlenecks in any part of the cluster can degrade overall performance. Regular monitoring and proactive adjustments based on workload patterns are essential.

- Dynamic Resource Allocation: Advanced big data systems can dynamically allocate resources based on workload demands. This allows for efficient utilization, preventing resource contention during peak loads and scaling down during off-peak times. Kubernetes-based orchestrators and cloud-native services are increasingly providing this elasticity, ensuring that queries always have sufficient resources without over-provisioning and incurring unnecessary costs.

Caching and Materialized Views

- Implementing Data Caching: Caching frequently accessed data or query results in faster storage layers (e.g., in-memory caches, SSDs) can dramatically reduce query response times. By serving results directly from cache, the system avoids re-executing complex computations or re-reading data from slower persistent storage. AI-powered Query Plan Optimization and Caching can intelligently predict which data segments or query results are likely to be reused, leading to highly effective caching strategies.

- Utilizing Materialized Views: Materialized views are pre-computed and stored results of complex queries. For highly repetitive analytical or reporting queries, creating a materialized view means the results are immediately available, bypassing the need for real-time recalculation. This is especially powerful for aggregating large datasets, providing significant speedups for dashboards and business intelligence tools.

Challenges and Barriers to Adoption in Big Data Query Optimization

Despite the clear benefits, implementing effective Big Data Query Optimization faces several significant hurdles:

- Data Volume, Velocity, and Variety: The sheer scale and diversity of big data make it inherently difficult to manage and optimize. Continuously growing datasets require constant re-evaluation of indexing and partitioning strategies.

- Schema Evolution and Data Drift: Big Data environments are rarely static. As data sources evolve, schemas change, leading to data drift. Maintaining optimal query performance amidst these changes requires continuous monitoring, schema migration strategies, and flexible query processing.

- MLOps Complexity: For data platforms that feed into Machine Learning pipelines, maintaining optimal query performance is critical for efficient model training and inference. However, integrating Big Data Query Optimization with MLOps workflows adds another layer of complexity, requiring seamless coordination between data engineering and ML engineering teams.

- Resource Contention and Cost Management: Optimizing queries often involves allocating more resources, which can lead to increased infrastructure costs. Balancing performance needs with budget constraints is a perpetual challenge, particularly in cloud environments.

- Skills Gap: Mastering the intricacies of Big Data Query Optimization requires specialized skills in distributed systems, SQL tuning, data architecture, and often, specific platform knowledge. The shortage of such skilled professionals can be a significant barrier.

Business Value and ROI of Effective Big Data Query Optimization

The investment in Big Data Query Optimization yields substantial returns across various business functions:

- Faster Insights and Decision-Making: Rapid query execution means business analysts and decision-makers get answers faster, enabling them to react quickly to market changes, identify trends, and seize opportunities. This directly impacts competitiveness and agility.

- Reduced Operational Costs: Efficient queries consume fewer computational resources (CPU, memory, I/O). This translates to lower infrastructure costs, especially in cloud-native environments where resource consumption directly correlates with billing.

- Enhanced Data Quality for AI: For AI and ML applications, timely access to high-quality, pre-processed data is vital. Optimized queries ensure that data pipelines feed robust, current data to models, leading to more accurate predictions and improved AI system performance.

- Improved User Experience: Applications and dashboards powered by optimized queries respond faster, leading to a smoother and more satisfying user experience for internal stakeholders and external customers alike.

- Faster Model Deployment: In the context of MLOps, optimized data retrieval significantly accelerates the data preparation phase, which can be a bottleneck in the ML lifecycle, thereby contributing to Faster Model Deployment and iteration cycles for AI models.

Comparative Insight: Modern Distributed Query Engines vs. Traditional Data Architectures

The evolution of data architectures has profoundly impacted how we approach query performance. To truly appreciate the power of modern Big Data Query Optimization techniques, it’s essential to compare current Distributed Query Engine paradigms with their predecessors: the Traditional Data Lake and Data Warehouse models.

Traditional Data Lakes and Data Warehouses

- Data Warehouses: Historically, data warehouses were the go-to for analytical processing. They focused on structured, clean data, often conforming to a predefined schema (schema-on-write). Queries were typically optimized for specific, known reporting needs. While excellent for BI, their rigid structure and high cost often made them inflexible for rapidly evolving data needs and unsuitable for unstructured or semi-structured data. Query performance was highly dependent on pre-aggregation and well-defined ETL processes.

- Data Lakes: Data lakes emerged as a response to the need for storing raw, diverse data at scale (schema-on-read). They offered flexibility and cost-effectiveness for storing vast amounts of data in its native format. However, querying data lakes often posed significant performance challenges. Without proper organization, indexing, or metadata management, queries could devolve into full scans of petabytes of data, leading to slow response times and high computational costs. This often necessitated moving data to data warehouses or specialized engines for analytical workloads, adding latency and complexity.

The Rise of Modern Distributed Query Engines

Modern Distributed Query Engine platforms, often built on cloud-native architectures and leveraging technologies like MPP, represent a paradigm shift in Big Data Query Optimization. They bridge the gap between the flexibility of data lakes and the performance of data warehouses:

- Hybrid Capabilities: These engines can query diverse data formats (structured, semi-structured, unstructured) directly in a data lake or object storage, performing schema-on-read while still delivering warehouse-like performance.

- Massively Parallel Processing (MPP): At their core, modern distributed query engines utilize MPP architectures, where queries are broken down into smaller tasks and executed in parallel across hundreds or thousands of nodes. This parallelization is fundamental to handling the scale of Big Data and is a key enabler of fast query times.

- Advanced Optimization: They incorporate sophisticated Cost-Based Optimizers that leverage extensive Statistics and Metadata Management to generate highly efficient query plans. Furthermore, the integration of AI-powered Query Plan Optimization and Caching takes efficiency to a new level, dynamically adapting to workloads and learning optimal strategies over time.

- Columnar Storage and Data Partitioning: As discussed, these engines deeply integrate with columnar storage formats (Parquet, ORC) and intelligent Data Partitioning schemes, which are crucial for minimizing I/O and accelerating analytical scans.

Competitors and Complementary Technologies

While Distributed Query Engine platforms excel in general-purpose analytical querying, other technologies serve specific niches:

- Batch Processing (MapReduce): Technologies like Apache Hadoop MapReduce are designed for large-scale batch processing rather than interactive querying. While they can process massive datasets, their latency makes them unsuitable for real-time analytics, standing as a historical foundation rather than a direct competitor for interactive query performance.

- Full-Text Search Engines (e.g., Elasticsearch): These are optimized for indexing and searching textual data. While they offer incredibly fast text search capabilities, their strengths lie in inverted indexing and relevance scoring, not in complex analytical SQL queries over diverse data types. They serve a different, albeit often complementary, purpose.

- In-Memory Databases: These databases store entire datasets or large portions of them in RAM, offering extremely low-latency access. They are superb for very fast operational analytics or transactional workloads on datasets that fit in memory, but scalability for truly massive, petabyte-scale data can be cost-prohibitive compared to distributed query engines leveraging tiered storage.

In essence, modern Distributed Query Engine platforms provide a versatile and high-performance solution for general-purpose Big Data Query Optimization, often acting as the central analytical layer over diverse data sources, integrating and surpassing the capabilities of traditional approaches for many workloads.

World2Data Verdict: The Future of Intelligent Query Optimization

The journey towards truly optimized Big Data querying is continuous, driven by ever-increasing data volumes and the demand for real-time insights. World2Data believes that the future of Big Data Query Optimization lies in a deeply integrated, intelligent, and adaptive approach. Organizations must move beyond ad-hoc tuning and embrace platforms that offer automated, AI-driven optimization capabilities. The critical differentiator will be systems that not only leverage columnar storage, partitioning, and MPP effectively but also integrate sophisticated AI-powered Query Plan Optimization and Caching, enabling self-tuning and predictive performance management.

For sustainable competitive advantage, enterprises should prioritize investing in modern Distributed Query Engine platforms that natively support robust Statistics and Metadata Management for Cost-Based Optimizer, ensuring that every query benefits from the most efficient execution plan possible. Furthermore, a proactive strategy for data governance, schema evolution, and resource elasticity will be paramount. By focusing on these intelligent, adaptive, and scalable solutions, organizations can unlock unprecedented value from their vast data reservoirs, transforming complex data challenges into strategic opportunities for rapid innovation and informed decision-making.