Unlocking Enterprise Potential: Essential Data Standards Every Organization Should Implement in 2025

Data Standards Every Organization Should Implement in 2025 are far more than mere technical guidelines; they represent the foundational framework for resilient, agile, and future-proof enterprises. A comprehensive understanding and proactive adoption of robust data standards are paramount for navigating the intricate complexities of today’s dynamic information landscapes. These standards are the bedrock upon which data quality, compliance, and innovation are built, ensuring that data assets deliver maximum strategic value.

Introduction: The Imperative of Data Standards in a Data-Driven World

In the rapidly evolving digital landscape of 2025, data has cemented its position as the lifeblood of modern organizations. From powering critical operational decisions to fueling advanced artificial intelligence initiatives, the quality, consistency, and accessibility of data directly dictate an enterprise’s ability to compete and innovate. However, without a well-defined and rigorously enforced set of Data Standards, this invaluable asset can quickly transform into a liability—a chaotic sprawl of inconsistent formats, conflicting definitions, and unreliable information. This article delves into why establishing and adhering to robust Data Standards is no longer optional but an absolute strategic imperative. We will explore the multifaceted benefits, address the inherent challenges, and provide World2Data’s perspective on the path forward for organizations aiming to truly leverage their data assets in 2025 and beyond. The objective is to provide a comprehensive guide for organizations looking to fortify their data foundations and prepare for the future of intelligent operations.

Core Breakdown: Architecting Data Excellence Through Standardization

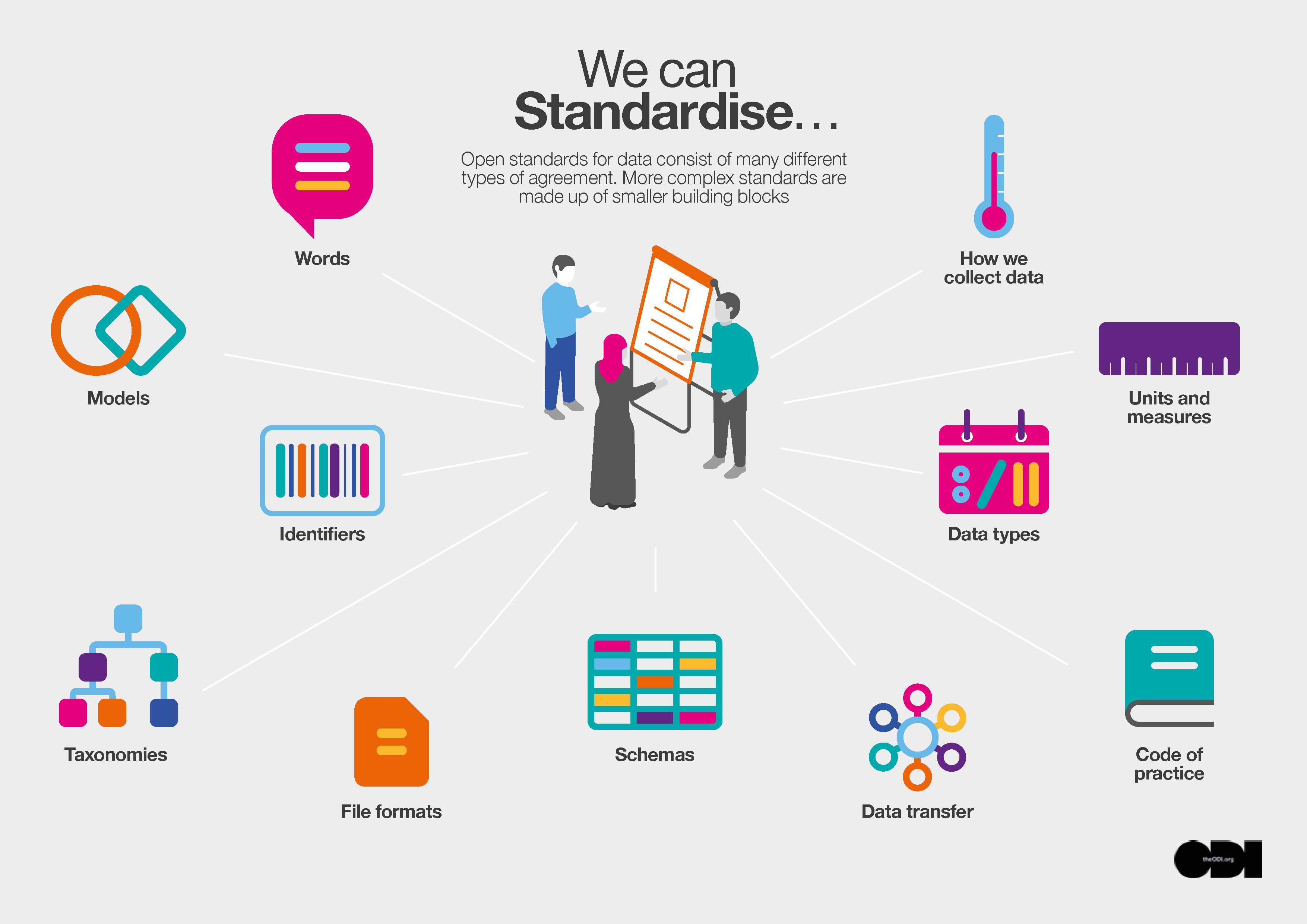

The implementation of comprehensive Data Standards involves a detailed technical and architectural analysis of an organization’s entire data ecosystem. It encompasses various dimensions, including syntactic, semantic, and structural standards, each playing a crucial role in ensuring data integrity and usability. Syntactic standards define the format and structure of data elements (e.g., date formats, currency symbols), while semantic standards clarify the meaning and interpretation of data (e.g., definitions of ‘customer’ or ‘revenue’). Structural standards, on the other hand, govern how data is organized within databases, schemas, and other repositories. Collectively, these standards form an architectural blueprint that dictates how data flows, is stored, and is consumed across the enterprise.

This architectural approach often includes components that mirror the rigor seen in advanced data platforms, such as automated data validation rules, metadata management systems, and centralized data dictionaries. Data labeling, while often associated with AI/ML, inherently relies on strong data standards to ensure consistent categorization and annotation of data, which is vital for training accurate models. Similarly, the concept of a Feature Store—a repository for machine learning features—is entirely dependent on standardized feature definitions, transformations, and lineage tracking to prevent feature drift and ensure reproducibility. Without these underlying standards, the value of these advanced components diminishes significantly.

Enhancing Data Quality and Reliability

Achieving high-quality data is an undeniable imperative. By establishing clear Data Standards, organizations guarantee consistency and uniformity across all internal systems and external integrations. This disciplined approach dramatically reduces the prevalence of data errors and discrepancies, directly leading to more precise reporting and significantly more reliable insights. Ultimately, superior data quality empowers more confident and impactful strategic decision-making. Standardized data means that every department, from marketing to finance, operates with a single, truthful version of information, eradicating ambiguity and fostering cross-functional alignment. This consistency also simplifies data cleansing processes, as anomalies are easier to identify against a clear standard baseline.

Streamlining Operations and Efficiency

Well-defined Data Standards are critical enablers for seamless data exchange and robust interoperability between disparate departments and various technological systems. This essential standardization effectively eliminates the need for tedious manual data reconciliation, facilitates process automation, and profoundly improves overall operational efficiency. Organizations can then process vital information with greater speed and allocate fewer resources to repetitive tasks. The time saved from manually correcting errors or transforming data formats can be redirected towards more strategic initiatives, leading to tangible productivity gains. Furthermore, standardized data simplifies the integration of new systems and technologies, reducing implementation costs and accelerating time-to-value for new projects.

Ensuring Regulatory Compliance and Security

In the current global climate of increasingly stringent data privacy legislation, Data Standards serve as a crucial mechanism for compliance. They provide organizations with the necessary framework to meet diverse regulatory requirements such as GDPR, CCPA, HIPAA, or industry-specific mandates, by meticulously dictating how sensitive data is to be collected, stored, processed, and shared. This proactive compliance strategy significantly fortifies data security postures and cultivates invaluable customer trust. By standardizing data classification and access controls, organizations can clearly delineate sensitive information, implement appropriate security measures, and demonstrate accountability to auditors. This systematic approach reduces legal and reputational risks, making compliance an ingrained part of data operations rather than a reactive burden.

Fostering Better Analytics and Business Intelligence

A foundation of consistently standardized data provides a clean, unified, and dependable base for conducting advanced analytics. With uniform data formats and precise definitions, organizations are positioned to generate exceptionally accurate reports, develop sophisticated predictive models, and extract truly meaningful business intelligence. This consistency leads to clearer insights and considerably improved strategic foresight. Data scientists spend less time on data wrangling and more on model development and interpretation, significantly accelerating the analytics lifecycle. Reliable data, free from inconsistencies, ensures that the insights derived are trustworthy, leading to better-informed strategic planning and operational adjustments.

Driving Innovation and Digital Transformation

Implementing robust Data Standards systematically prepares an organization for the inevitable waves of future technological advancements. They provide the essential structural support required for successful artificial intelligence and machine learning initiatives, efficient cloud migrations, and other critical digital transformation endeavors. A meticulously standardized data environment is an indispensable prerequisite for sustained innovation and maintaining a decisive competitive advantage. When data is standardized, it becomes a readily available and usable resource for building AI models, training algorithms, and integrating with emerging technologies like blockchain or IoT, lowering the barrier to entry for innovation and facilitating rapid experimentation.

Challenges and Barriers to Adoption

While the benefits of Data Standards are compelling, organizations often face significant hurdles in their implementation and widespread adoption. One primary challenge is the existence of legacy systems, which frequently operate on outdated or proprietary data formats, making integration and standardization a complex and costly endeavor. Migrating or adapting these systems to new standards requires substantial investment in time, resources, and often, specialized expertise.

Another significant barrier is organizational resistance. Implementing data standards necessitates cultural shifts, often requiring departments to change established workflows and data handling practices. Without strong executive sponsorship and clear communication about the long-term benefits, employees may perceive standardization efforts as an unnecessary burden, leading to slow adoption or even sabotage. A lack of clear data ownership and accountability further complicates matters, as different teams may have conflicting views on how data should be defined or managed.

Data drift, a concept more commonly discussed in MLOps, also poses a subtle challenge to data standards. Even with initial standards, the inherent nature of real-world data can cause it to evolve over time, leading to deviations from established definitions. This necessitates continuous monitoring and adaptation of standards, adding to the ongoing operational overhead. MLOps complexity, particularly in ensuring data consistency from training to production environments, is heavily reliant on robust and evolving data standards. If the data pipelines are not standardized, ensuring that the data consumed by an ML model in production matches the data it was trained on becomes exceedingly difficult, leading to performance degradation and unreliable predictions.

Finally, the sheer cost and complexity of defining, documenting, and enforcing standards across a large, diverse data estate can be daunting. It requires dedicated data governance teams, investment in metadata management tools, and continuous training. Organizations must weigh these upfront and ongoing costs against the long-term ROI, which, while substantial, may not always be immediately apparent in quarterly reports.

Business Value and ROI of Data Standards

The return on investment (ROI) from implementing robust Data Standards manifests in numerous tangible and intangible ways. Firstly, there’s a direct reduction in operational costs. By minimizing manual data cleaning, reconciliation, and transformation, organizations save significant staff hours and computational resources. The improved efficiency across data pipelines, from ingestion to consumption, translates into faster processing cycles and reduced overhead.

Secondly, faster model deployment and improved accuracy in AI/ML initiatives represent a critical business value. With standardized, high-quality data, data scientists can accelerate the development and deployment of machine learning models. This directly leads to quicker time-to-market for AI-powered products and services, providing a significant competitive edge. Data quality for AI is not merely a technical requirement; it is a business enabler. Standardized data reduces the risk of ‘garbage in, garbage out,’ ensuring that AI models deliver reliable, actionable insights, thereby maximizing the value derived from AI investments.

Beyond cost savings and AI acceleration, data standards enhance strategic decision-making. Reliable and consistent data empowers leaders to make more informed choices, reducing risk and identifying new opportunities with greater clarity. This improved foresight can lead to better market positioning, optimized resource allocation, and more effective customer engagement strategies. The reduction in legal and reputational risks associated with data breaches or non-compliance also offers substantial financial protection. Ultimately, a standardized data environment builds trust—both internally among departments and externally with customers and regulators—solidifying an organization’s reputation as a reliable and responsible data steward, which is an invaluable asset in the digital economy.

Comparative Insight: Data Standards vs. Traditional Data Environments

To truly appreciate the transformative power of comprehensive Data Standards, it’s insightful to compare an environment where they are rigorously implemented against a more traditional data lake or data warehouse setup lacking such strong governance.

In a traditional data lake, the primary focus is often on ingesting vast amounts of raw data, regardless of its format or structure. While this offers flexibility and scalability for storing diverse data types, it frequently devolves into a “data swamp.” Without predefined Data Standards, data within these lakes often lacks consistency, meaning, and discoverability. Different departments might store similar information (e.g., customer IDs, product names) using varying formats, spellings, or definitions. This inconsistency necessitates extensive, often manual, data cleaning and transformation efforts before any meaningful analysis can occur. Data scientists spend an inordinate amount of time on data wrangling, leading to slow project cycles, higher costs, and a high probability of analytical errors due to ambiguity. Data lineage is often fragmented, making it difficult to trace data origins or understand transformations, which complicates compliance and debugging.

Similarly, traditional data warehouses, while offering more structured storage, can also suffer from a lack of overarching Data Standards, especially across disparate systems. While ETL processes might impose some consistency, the semantic understanding of data can still vary. For instance, “active customer” might mean different things to marketing versus sales. This semantic drift leads to conflicting reports and undermines confidence in the central source of truth. Data silos, where different departments maintain their own versions of data, are another common problem, directly hindering cross-functional collaboration and creating a fragmented view of the business.

In stark contrast, an organization that has meticulously implemented strong Data Standards operates with a unified, coherent data ecosystem. Data is ingested, stored, and processed according to predefined rules for format, definition, and quality. This means that a ‘customer ID’ will have the same structure and meaning across the entire enterprise. Metadata is rich, standardized, and easily accessible, providing clear context and lineage for every data asset. Data consumers, whether analysts, business users, or AI models, can trust the data without extensive preprocessing, dramatically accelerating insights and model deployment. Interoperability is seamless, enabling different systems and applications to communicate effortlessly and share data without custom integrations for every new use case. This shift from a chaotic, reactive data environment to a proactive, standardized one reduces operational friction, enhances data security and compliance, and fundamentally unlocks the full strategic potential of an organization’s data assets, making it a true competitive differentiator in 2025.

World2Data Verdict: The Unifying Catalyst for Future-Ready Enterprises

The proactive move towards comprehensive Data Standards isn’t simply a fleeting recommendation for 2025; it stands as an essential strategic imperative for any organization aiming not just to survive but to truly thrive in an increasingly data-driven global economy, ensuring long-term stability and dynamic growth. World2Data’s verdict is unequivocal: organizations that prioritize and systematically implement robust Data Standards will emerge as leaders in their respective industries. These standards act as the unifying catalyst, transforming disparate data points into a cohesive, intelligent, and trustworthy enterprise asset. We recommend that organizations establish a dedicated data governance framework with executive sponsorship, invest in metadata management tools, and foster a culture of data literacy and accountability. The future belongs to those who master their data, and Data Standards are the indispensable blueprint for that mastery.