On-Prem Data Platform: When Local Infrastructure Still Matters

In an era dominated by ubiquitous cloud solutions, the strategic relevance of an On-Prem Data Platform might initially seem like a legacy approach. However, for organizations navigating stringent regulatory landscapes, grappling with highly sensitive information, or demanding specialized performance, local infrastructure remains not just relevant, but absolutely essential. An On-Prem Data Platform ensures your most valuable asset—data—resides within direct physical and logical control, offering distinct advantages that public clouds cannot always replicate. This strategic choice empowers businesses seeking unparalleled command over their digital foundation, offering a tailored environment for critical operations.

Introduction: Reasserting the Value of On-Premise Data Infrastructure

The paradigm shift towards cloud computing has reshaped enterprise IT, offering agility, scalability, and reduced upfront costs. Yet, this narrative often overshadows the enduring and, in many cases, indispensable role of the On-Prem Data Platform. Far from being an outdated concept, on-premise solutions continue to be the cornerstone for sectors ranging from finance and healthcare to government and defense, where data sovereignty, ultimate security, and predictable performance are non-negotiable. This article delves into the core aspects, advantages, and specific scenarios where investing in and maintaining an On-Prem Data Platform provides a superior, more controlled, and often more cost-effective long-term solution for complex enterprise data needs. We will explore its architectural nuances, critical capabilities, inherent challenges, and profound business value, contrasting it with prevalent cloud alternatives to articulate why local infrastructure still matters significantly.

Core Breakdown: The Anatomy of a Robust On-Prem Data Platform

An On-Prem Data Platform is a meticulously engineered ecosystem designed to manage, process, and store vast quantities of data within an organization’s own physical data centers. This localized infrastructure provides granular control over every layer, from hardware to applications, distinguishing it fundamentally from multi-tenant cloud environments. It’s a comprehensive approach that integrates various components to support diverse data workflows.

Architectural Foundations and Core Technologies

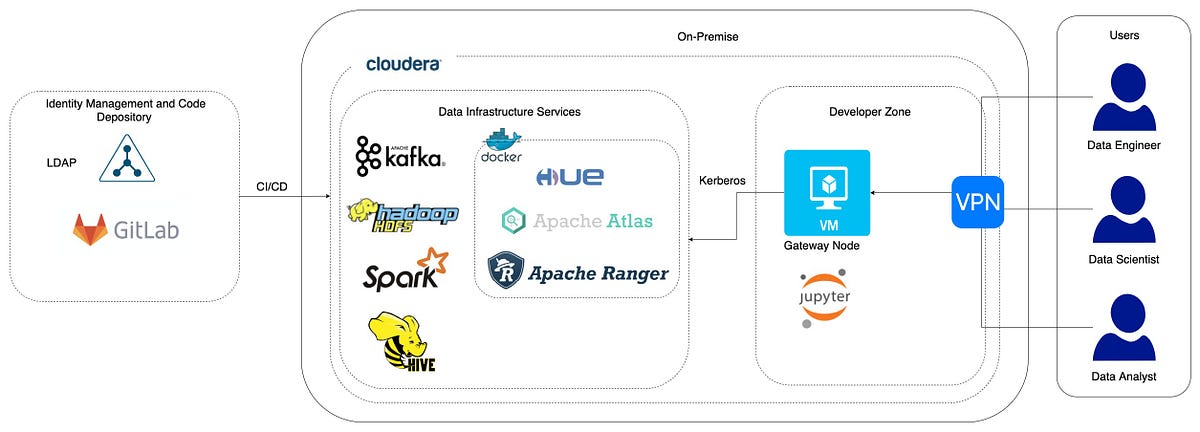

The foundation of an On-Prem Data Platform is built upon robust and carefully selected infrastructure. This often includes:

- Bare-metal servers: Providing raw computing power and direct access to hardware resources, critical for high-performance computing and specialized workloads.

- Virtualized environments (VMware, Hyper-V): Abstracting hardware to create flexible and scalable virtual machines, optimizing resource utilization and simplifying management.

- Distributed file systems (HDFS): Essential for handling massive datasets, enabling parallel processing and high fault tolerance, characteristic of Data Lake implementations.

- Traditional relational database management systems (RDBMS): Forming the backbone for structured data, transactional systems, and Enterprise Data Warehouse functionalities.

- Private cloud infrastructure: Leveraging technologies like OpenStack or VMware Cloud Foundation to deliver cloud-like agility and resource provisioning within the confines of a private data center.

- Kubernetes for container orchestration: Increasingly vital for deploying and managing containerized applications, enabling microservices architectures and efficient resource scaling for modern data pipelines and operational databases.

Key Components and Capabilities

A mature On-Prem Data Platform integrates a suite of specialized tools and systems to address the full spectrum of data management:

- Enterprise Data Warehouse (EDW): Optimized for reporting and analytical queries on structured, historical data, providing a single source of truth for business intelligence.

- Data Lake: A vast repository for raw, unstructured, semi-structured, and structured data, facilitating advanced analytics, machine learning, and data exploration.

- Operational Databases: Supporting mission-critical applications with high transaction volumes and real-time data needs.

- ETL/ELT tools: Comprehensive solutions for extracting, transforming, and loading data from various sources into the data platform components, ensuring data quality and integration.

- Business Intelligence platforms: Tools for data visualization, dashboarding, and interactive reporting, empowering users to derive insights from prepared data.

- Stream Processing: Capabilities for ingesting and processing real-time data streams (e.g., Apache Kafka with Flink/Spark Streaming), crucial for immediate analytics and responsive applications.

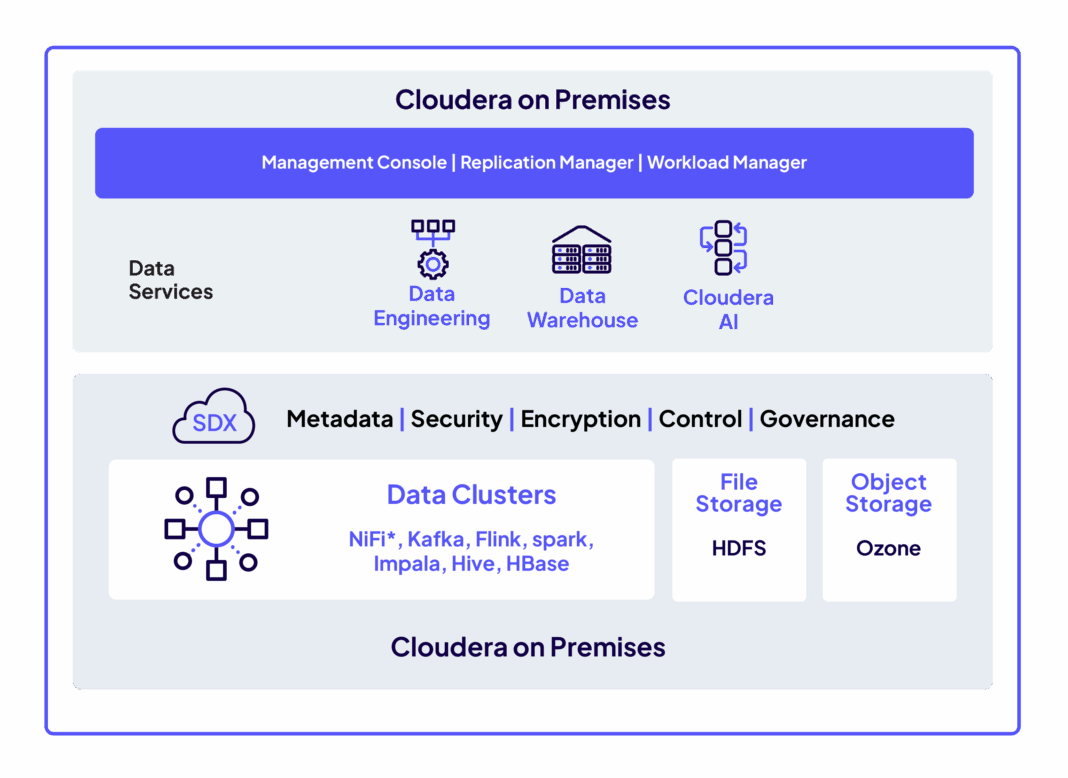

- Private Cloud Data Platforms: Solutions that consolidate these components into an integrated, self-service environment, mimicking public cloud experiences but with private infrastructure control.

Data Governance and Security: Unlocking Unmatched Data Control

Maintaining absolute sovereignty over data is paramount for numerous sectors. An On-Prem Data Platform provides unparalleled control, addressing stringent regulatory compliance requirements like GDPR, HIPAA, CCPA, or industry-specific mandates by keeping data within defined geographical and security boundaries. Your sensitive information resides on servers you own, in facilities you manage, significantly enhancing security posture. This direct oversight minimizes external vulnerabilities and assures full ownership, critical for safeguarding proprietary business intelligence. Key data governance features include:

- Role-Based Access Control (RBAC) at database/OS level: Granular permissions ensuring only authorized personnel and applications can access specific data.

- Network segmentation and firewall rules: Isolating sensitive data networks and controlling traffic flow to prevent unauthorized access.

- Data Masking and Encryption at rest/in transit: Protecting data within the private network using advanced encryption standards and obscuring sensitive information.

- On-premise Data Catalogs: Centralized metadata management to discover, understand, and govern data assets across the platform.

- Audit logging and compliance tooling: Comprehensive logging of all data access and modification activities, crucial for regulatory reporting and forensic analysis.

Primary AI/ML Integration

For advanced analytics and machine learning, an On-Prem Data Platform offers significant advantages, particularly for sensitive data or compute-intensive models:

- Local GPU clusters: Dedicated hardware for accelerating machine learning training and inference, offering superior performance and cost efficiency for intensive workloads compared to shared cloud resources.

- On-premise ML platforms: Solutions like Cloudera Data Science Workbench or self-managed Jupyter notebooks running on local servers provide controlled environments for data scientists.

- Open-source ML frameworks (TensorFlow, PyTorch) run on local servers: Flexibility to deploy and manage leading ML frameworks without external dependencies or licensing complexities.

- Integration with edge devices for real-time analytics: Seamless data flow from IoT devices at the edge directly into local processing units, enabling ultra-low latency decision-making and immediate operational responses.

Challenges and Barriers to Adoption

While the benefits of an On-Prem Data Platform are compelling, organizations must also contend with inherent challenges:

- Significant Upfront Capital Expenditure (CapEx): Initial investment in hardware, software licenses, and data center infrastructure can be substantial, requiring careful financial planning.

- Operational Overhead and Maintenance Burden: Managing physical servers, networking, storage, and software updates requires a dedicated and skilled IT team, encompassing tasks like patching, backups, and disaster recovery.

- Scalability Limitations: While robust, scaling an on-premise environment can be slower and more complex than in the cloud, often requiring procurement cycles and manual installation. Rapid, elastic scaling to meet unpredictable spikes in demand can be particularly challenging.

- Complexity in Expertise Requirements: Building and maintaining a sophisticated On-Prem Data Platform demands a broad range of specialized skills, including database administration, network engineering, virtualization expertise, and security architects, which can be difficult and costly to acquire and retain.

- Disaster Recovery and Business Continuity: Designing and implementing robust disaster recovery strategies for on-premise infrastructure requires significant planning, investment in redundant systems, and regular testing, a responsibility largely handled by cloud providers in IaaS/PaaS models.

Business Value and Return on Investment (ROI)

Despite the challenges, the strategic advantages offered by an On-Prem Data Platform translate into significant business value and a strong ROI for specific use cases:

- Unparalleled Data Control and Sovereignty: Critical for adhering to strict regulatory compliance (GDPR, HIPAA) and maintaining complete ownership of sensitive data, preventing data residency issues.

- Superior Performance Optimization for Demanding Workloads: For applications requiring ultra-low latency and high throughput, such as real-time analytics, high-frequency trading, or large-scale IoT processing, dedicated on-prem infrastructure ensures predictable, lightning-fast data access and processing. Eliminating internet latency and avoiding shared resource limitations guarantees peak efficiency.

- Enhanced Security Posture: By keeping data within controlled physical and logical boundaries, organizations can implement highly customized security measures, reducing exposure to external threats and potential data breaches inherent in multi-tenant cloud environments.

- Cost Predictability and Long-Term Value: While cloud promises flexibility, on-premise solutions offer clear cost predictability, especially long-term. Organizations can avoid unexpected egress fees, API call charges, and fluctuating monthly bills. Initial CapEx becomes a controlled investment, leveraging existing IT infrastructure and expertise, leading to a clearer Total Cost of Ownership (TCO) over several years.

- Strategic Independence and Customization Potential: Opting for an on-prem data platform grants businesses strategic independence, mitigating vendor lock-in risks. This freedom allows organizations to fully customize their data environment, selecting precise hardware, software, and networking components that perfectly align with unique operational requirements and strategic goals, fostering innovation without external constraints.

- Leveraging Existing Investments: Many enterprises have significant investments in existing data center infrastructure and trained personnel. An on-prem strategy allows them to maximize these assets, extending their lifespan and ensuring a smoother transition or integration rather than a complete overhaul.

Comparative Insight: On-Premise vs. Cloud Data Platforms

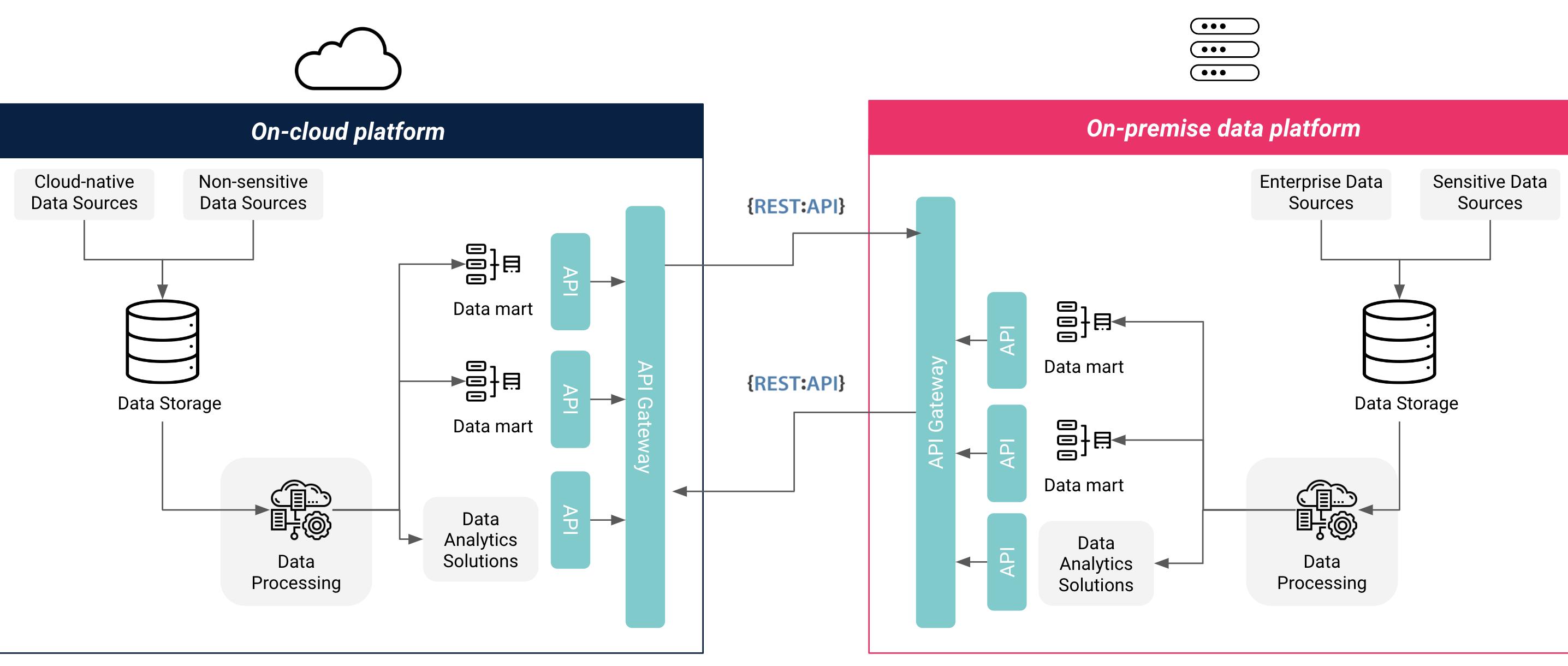

The decision between an On-Prem Data Platform and a cloud-based solution is a critical strategic choice, each with distinct characteristics impacting cost, performance, security, and operational flexibility. Traditional data lakes and data warehouses, historically on-premise, have evolved significantly, but their fundamental principles of data management persist whether hosted locally or in the cloud. The primary competitors and alternatives to a purely on-prem model are:

- Public Cloud Data Platforms (AWS, Azure, Google Cloud Platform): Offer immense scalability, pay-as-you-go pricing, and a vast ecosystem of managed services (e.g., Amazon S3, Azure Data Lake Storage, Google Cloud Storage). They abstract away infrastructure management but introduce concerns around data residency, egress fees, and vendor lock-in.

- Cloud Data Warehouses (Snowflake, Google BigQuery, Amazon Redshift): Provide highly scalable, fully managed analytical databases designed for complex querying and business intelligence, often with consumption-based pricing. While powerful, customization and direct hardware control are limited.

- Cloud Data Lakes (Databricks Lakehouse Platform, Amazon S3 with Athena/EMR): Combine the flexibility of a data lake with data warehousing capabilities, offering unified data management for various data types and workloads. They excel in agility but require careful cost management and architectural design to avoid sprawl.

- Hybrid Cloud Data Platforms: Represent a middle ground, combining on-premise infrastructure with public cloud resources. This model allows organizations to keep sensitive data on-prem while leveraging cloud for burstable workloads, disaster recovery, or specific services. It offers flexibility but introduces complexity in integration and management.

While cloud platforms offer agility and reduced upfront costs, the On-Prem Data Platform shines in scenarios demanding absolute data sovereignty, regulatory adherence, and predictable high performance. For instance, a financial institution handling proprietary trading algorithms and highly sensitive customer data might find an on-premise solution indispensable for regulatory compliance and ultra-low latency processing, where milliseconds can mean millions. Conversely, a startup focused on rapid iteration and global reach might opt for a public cloud due to its immediate scalability and managed services, deferring infrastructure concerns. The choice ultimately hinges on an organization’s specific data sensitivity, performance requirements, regulatory obligations, existing infrastructure investments, and long-term cost strategy. For those who prioritize control and specialized performance, the on-premise model remains a compelling and often superior choice.

World2Data Verdict: The Enduring Imperative of Strategic Local Data Infrastructure

The narrative of cloud dominance often overshadows the pragmatic realities faced by many enterprises. World2Data believes that while cloud adoption will continue its ascent, the strategic importance of the On-Prem Data Platform is far from diminished; rather, it is evolving into a specialized, high-value asset. For organizations where data sovereignty, hyper-performance for critical workloads, uncompromising security, and predictable long-term costs are paramount, local infrastructure is not merely an option but an imperative. We predict a future where on-prem platforms will increasingly serve as the immutable core for highly regulated, data-intensive industries, complementing hybrid cloud strategies for less critical, burstable workloads. Therefore, enterprises must critically assess their unique operational landscape and data requirements, recognizing that a well-architected and managed On-Prem Data Platform offers unparalleled control and strategic independence, cementing its role as a vital component of a resilient, future-proof data strategy.