AI-Powered Real-Time Data Anomaly Detection: Safeguarding Modern Enterprises

In the era of big data and rapid digital transformation, Real-Time Data Anomaly Detection powered by artificial intelligence has evolved from a sophisticated tool into an indispensable necessity for modern enterprises. This crucial capability allows organizations to instantly identify unusual patterns or outliers within vast, incoming data streams, thereby protecting operational integrity and enabling proactive decision-making. By leveraging advanced AI, businesses can preempt significant issues, from cybersecurity threats to system failures, minimizing risks and optimizing performance in dynamic environments.

Introduction: The Imperative of Real-Time Anomaly Detection

The relentless influx of data generated across diverse sectors—from financial transactions and industrial IoT to customer interactions and network logs—presents both immense opportunities and significant challenges. For organizations striving for operational excellence and robust security, the ability to perform Real-Time Data Anomaly Detection is no longer a competitive advantage but a foundational requirement. Traditional statistical methods and rule-based systems, while useful, often buckle under the sheer volume, velocity, and variety of modern data, proving too slow or too rigid to catch subtle, evolving anomalies. This article delves into how AI, particularly machine learning and deep learning, transforms anomaly identification, examining its core architectural components, business implications, and how it dramatically outperforms conventional approaches, securing data integrity and fostering proactive decision-making. As a Streaming Analytics Platform, AI support for real-time anomaly detection fundamentally relies on efficient stream processing and sophisticated unsupervised machine learning models, ensuring continuous data quality monitoring and alerting policies are in place to maintain robust data governance.

Core Breakdown: Architecture and Components of AI-Driven Anomaly Detection

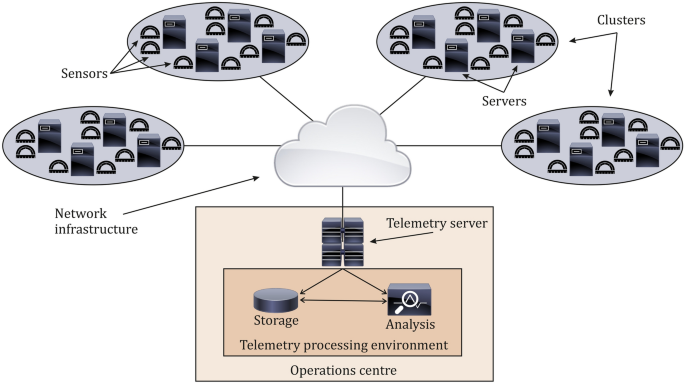

The architectural backbone of an AI-powered Real-Time Data Anomaly Detection system is designed for high-throughput, low-latency processing and intelligent pattern recognition. At its heart lies a sophisticated Streaming Analytics Platform, where data is ingested and processed as it arrives, rather than in batches. This paradigm is critical for genuine real-time capabilities, allowing organizations to act within milliseconds or seconds of an anomaly occurring.

- Data Ingestion and Stream Processing: Raw data from various sources (e.g., IoT sensors, network logs, financial transactions, user behavior metrics) is funneled into high-performance streaming engines. Technologies like Apache Kafka, Apache Flink, or Amazon Kinesis are typical choices, ensuring data arrives with minimal delay and is immediately available for analysis. Initial preprocessing steps, such as data cleansing, normalization, aggregation, and feature extraction, happen in real-time to prepare the data for the anomaly detection models.

- Feature Engineering for Anomaly Detection: This critical phase transforms raw data into a set of meaningful features that AI models can interpret effectively. For time-series data, this might involve extracting statistical features (e.g., mean, variance, standard deviation over a sliding window), temporal features (e.g., lagged values, moving averages, rate of change), or even frequency-domain features (using techniques like Fast Fourier Transform to identify periodic patterns). The quality and relevance of these features significantly impact the model’s ability to discern anomalies, as well-engineered features can highlight deviations from normal behavior. Automated feature engineering techniques are also emerging to discover complex relationships autonomously.

- Unsupervised Machine Learning Models: Given that anomalies are, by definition, rare and often unseen during training, unsupervised learning is the cornerstone of these systems. The primary AI/ML integration typically involves built-in unsupervised learning models, which learn the “normal” patterns of data without explicit labels for anomalies. Common models include:

- Autoencoders: Neural networks trained to reconstruct their input. Data points that are difficult to reconstruct (i.e., have high reconstruction errors) are flagged as potential anomalies, as they deviate from the learned normal patterns.

- Isolation Forests: An ensemble tree-based algorithm that efficiently isolates anomalies by randomly selecting a feature and a split value. Anomalies, being “far” from normal instances, are easier to isolate and require fewer splits to be separated.

- One-Class Support Vector Machine (OCSVM): This model learns a decision boundary that encapsulates the “normal” data points in a high-dimensional feature space, marking anything significantly outside this boundary as an anomaly.

- Recurrent Neural Networks (RNNs) and LSTMs: Particularly effective for sequential or time-series data, these deep learning models learn temporal dependencies and context. They can predict the next data point in a sequence, and significant deviations from this prediction indicate an anomaly.

- Clustering Algorithms: While less common for pure unsupervised real-time anomaly detection, algorithms like DBSCAN or K-Means can identify data points that do not belong to any significant cluster, indicating unusual behavior.

- Model Deployment and Management (MLOps): Once trained and validated, models are deployed to process incoming data streams in real-time. This involves robust MLOps (Machine Learning Operations) practices for continuous integration/continuous deployment (CI/CD) of models, ensuring they are robust, scalable, and maintainable. Regular monitoring of model performance and automated retraining mechanisms are crucial to adapt to evolving data patterns.

- Alerting and Action: A key data governance feature is robust Data Quality Monitoring and Alerting Policies. Upon detecting an anomaly, the system triggers alerts through customizable dashboards, email, SMS, or integration with incident management platforms (e.g., PagerDuty, Slack). Contextual information about the anomaly (e.g., time, location, severity, affected metrics, historical patterns) is provided to enable rapid investigation and response from human operators or automated remediation systems.

Challenges and Barriers to Adoption

Despite the immense potential, implementing and maintaining effective Real-Time Data Anomaly Detection systems comes with its share of challenges:

- Data Drift and Concept Drift: The “normal” behavior of data can change over time (data drift), or the underlying relationships between data features can evolve (concept drift). Anomaly detection models must be adaptive and continuously retrained or updated to remain effective, which adds to operational complexity and requires robust MLOps pipelines.

- High False Positive Rates: Overly sensitive models can generate too many false positives, leading to “alert fatigue” where operators start ignoring warnings. Striking the right balance between sensitivity and specificity, often through careful tuning and feedback loops, is crucial to maintain trust and operational efficiency.

- Data Scarcity for Supervised Methods: While unsupervised methods are preferred for novel anomalies, specific known types of anomalies can benefit from supervised learning. However, collecting enough reliably labeled anomaly data (which by nature is rare) is often impractical and resource-intensive.

- Complexity of MLOps for Streaming: Deploying, monitoring, and updating machine learning models in a high-velocity, real-time streaming environment is inherently complex. It requires sophisticated infrastructure, stringent version control for models, robust performance monitoring, and automated retraining pipelines to handle model degradation.

- Computational Overhead: Processing vast volumes of data in real-time with sophisticated AI models demands significant computational resources and highly optimized infrastructure, which can be costly both in terms of hardware and specialized talent.

- Explainability and Interpretability: Many advanced deep learning models, while powerful, are “black boxes,” making it difficult to understand why a particular data point was flagged as anomalous. This lack of explainability can hinder investigation, complicate troubleshooting, and erode trust, particularly in regulated industries.

Business Value and ROI

The return on investment for robust Real-Time Data Anomaly Detection systems is substantial and multi-faceted, driving significant value across various business functions:

- Enhanced Cybersecurity: Early detection of suspicious network activity, unusual login attempts, data exfiltration patterns, or malware behavior can prevent costly data breaches, intellectual property theft, and sophisticated cyberattacks, safeguarding critical assets and reputation.

- Fraud Prevention and Financial Integrity: In financial services, instant identification of fraudulent transactions, abnormal account behavior, or unusual trading patterns significantly reduces financial losses, protects customer trust, and ensures regulatory compliance.

- Operational Efficiency and Predictive Maintenance: For industrial IoT, manufacturing, and critical infrastructure, detecting anomalies in sensor data can predict equipment failures, enabling proactive maintenance, significantly reducing costly downtime, and extending asset lifecycles.

- Improved System Performance and Reliability: Identifying performance bottlenecks, resource exhaustion, unusual application behavior, or infrastructure failures in IT systems before they impact users or critical services. This proactive approach ensures higher uptime and better service level agreements (SLAs).

- Superior Customer Experience: Detecting anomalies in user behavior, service usage patterns, or application performance can flag potential issues affecting customer experience, allowing for proactive intervention and improved satisfaction, thereby reducing churn.

- Data Quality Assurance: By continuously monitoring incoming data streams, these systems act as a critical gatekeeper for data quality, ensuring the integrity of analytical insights and downstream AI applications, which depend heavily on reliable input.

- Regulatory Compliance and Risk Management: Meeting compliance requirements by consistently monitoring and reporting on data integrity, security events, and operational parameters, thereby reducing regulatory fines and audit risks.

Comparative Insight: AI-Powered vs. Traditional Anomaly Detection

To truly appreciate the transformative power of AI-powered Real-Time Data Anomaly Detection, it’s essential to compare its capabilities against traditional data monitoring and anomaly detection methods. The distinctions highlight why modern enterprises are increasingly adopting AI for this critical function.

- Rule-Based Systems:

- Traditional: These systems rely on predefined thresholds and rigid rules set by human experts (e.g., “if transaction amount > $10,000, flag” or “if CPU usage > 90% for 5 minutes, alert”). They are simple to implement for known, predictable patterns and require minimal computational resources.

- AI-Powered: AI systems move beyond static rules. They learn intricate, often non-obvious patterns from vast datasets, identifying anomalies that don’t fit into simple “if-then” statements. They can detect subtle deviations, multi-dimensional anomalies, and evolving threat vectors that rules cannot capture. As underlying data patterns shift (concept drift), AI models can adapt autonomously through retraining; rule-based systems require constant, manual updates and are brittle in dynamic environments.

- Statistical Process Control (SPC):

- Traditional: Uses statistical methods like control charts (e.g., Shewhart charts, EWMA) to monitor process variations. Effective for stable processes and single-variable monitoring, identifying when a process deviates from its expected statistical behavior.

- AI-Powered: AI can handle high-dimensional, multivariate data with complex interdependencies far beyond the capabilities of most SPC methods. It can learn non-linear relationships and detect anomalies in scenarios where traditional statistical assumptions (e.g., normality, independence) do not hold. AI offers a more nuanced, holistic understanding of system behavior, identifying subtle anomalies in complex data landscapes that might appear normal under univariate statistical checks.

- Human-Driven Monitoring and Analysis:

- Traditional: Relies on analysts manually sifting through logs, dashboards, and reports to spot irregularities. This is inherently time-consuming, highly prone to human error, subjective, and completely unscalable for the unprecedented data volumes and velocities of modern digital environments.

- AI-Powered: Automates the initial detection and triage, filtering out noise and highlighting critical events based on learned patterns. This frees human analysts to focus on investigation, root cause analysis, and response rather than tedious data sifting. It provides early warnings, transforming reactive monitoring into proactive threat hunting and operational management.

- Batch Processing Anomaly Detection:

- Traditional: Anomalies are identified after data has been collected and processed in batches (e.g., end-of-day reports, weekly data dumps). This inherently means a significant delay between an event occurring and its detection.

- AI-Powered: Operates on data streams, detecting anomalies in milliseconds or seconds as data arrives. This real-time capability is paramount for critical applications like fraud detection, cybersecurity threat hunting, predictive maintenance, and critical infrastructure monitoring, where immediate action can prevent massive financial losses, system failures, or catastrophic incidents.

In essence, while traditional methods offer a baseline level of detection for known, simple anomalies, AI-powered systems provide an adaptive, scalable, and intelligent approach capable of uncovering unknown, complex, and evolving threats in the high-velocity data streams of today’s digital landscape. Leading solutions from competitors like Apache Flink, Amazon Kinesis, Datadog, and Anodot exemplify this shift, offering robust platforms built on advanced AI for Real-Time Data Anomaly Detection, pushing the boundaries far beyond what traditional methods could achieve.

World2Data Verdict: Proactive Vigilance through AI

World2Data.com asserts that the future of enterprise resilience and operational intelligence is irrevocably tied to the sophistication of its Real-Time Data Anomaly Detection capabilities. Organizations must move beyond rudimentary threshold-based alerts and embrace a comprehensive Streaming Analytics Platform integrating advanced unsupervised AI models. Our recommendation is clear: prioritize investments in adaptive, explainable AI systems that not actively detect anomalies but also provide contextual insights, thereby fostering a culture of proactive data vigilance. The strategic adoption of these technologies will not merely mitigate risks but unlock significant competitive advantages, enabling businesses to navigate an increasingly complex digital landscape with unprecedented agility and security. The continuous evolution of AI will undoubtedly continue to redefine the landscape of Real-Time Data Anomaly Detection, making our digital world safer and more efficient.