Real-Time Platform: Powering Instant Insights for Businesses

In today’s hyper-connected business landscape, the ability to act on information at the moment it emerges is no longer a luxury but a fundamental necessity. A Real-Time Platform stands as the technological backbone enabling organizations to harness streams of constantly flowing data, transforming raw input into immediate, actionable intelligence that drives smarter decisions and superior outcomes. This advanced infrastructure facilitates instantaneous data processing, offering businesses an unparalleled edge in speed and responsiveness, fundamentally reshaping operational paradigms and customer interactions.

Introduction: The Imperative of Instantaneous Data

The digital age has ushered in an era where data is generated at an unprecedented pace, from IoT sensors and social media feeds to transactional systems and user interactions. Traditional data processing methods, often characterized by batch processing and delayed reporting, are increasingly inadequate for organizations seeking to maintain competitiveness and deliver superior customer experiences. The objective of this deep dive is to explore how a robust Real-Time Platform addresses these modern demands, transforming raw, high-velocity data streams into actionable insights almost instantaneously. By adopting a Real-Time Platform, businesses can move beyond reactive strategies to embrace proactive decision-making, leveraging every emerging data point to optimize operations, personalize services, and mitigate risks in real time. This article will dissect the core architecture, capabilities, and strategic advantages of such platforms, highlighting their transformative impact across diverse industries.

Core Breakdown: Architecture, Capabilities, and Strategic Value

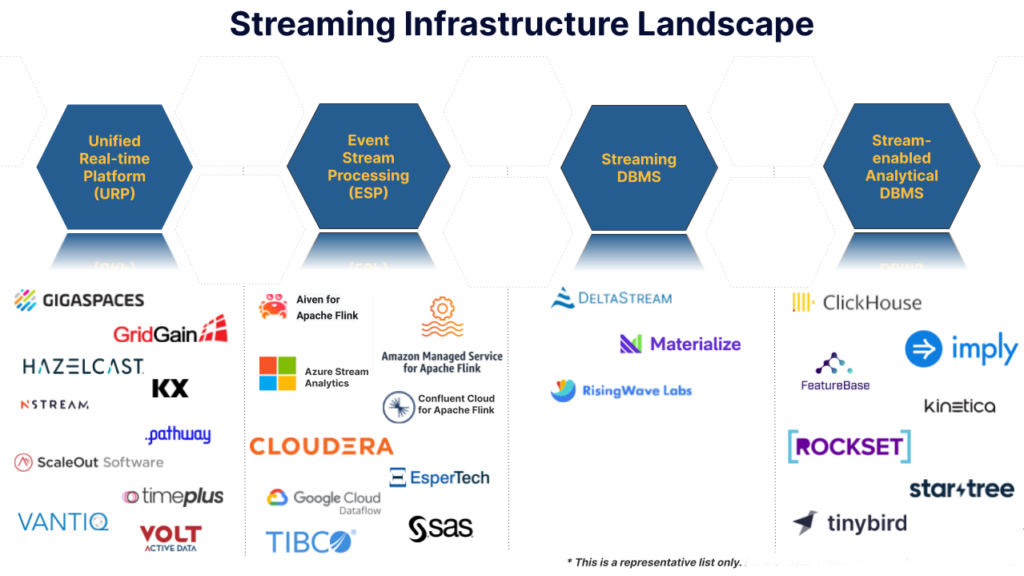

At its heart, a Real-Time Platform is an integrated suite of technologies designed to ingest, process, analyze, and act upon data as it is generated. It fundamentally shifts the paradigm from analyzing historical data to reacting to current events, making it an indispensable tool for modern enterprises. This category of platforms encompasses a variety of solutions, including Real-time Data Streaming Platforms, Real-time Analytics Platforms, and advanced Operational Data Stores, all working in concert to minimize data latency and maximize operational agility.

Underlying Technologies and Architecture

The robust capabilities of a Real-Time Platform are built upon a foundation of sophisticated technologies and a pervasive Event-driven architecture. This architecture enables components to react to events (data changes, user actions) as they occur, providing the agility necessary for continuous data flow. Key technical pillars include:

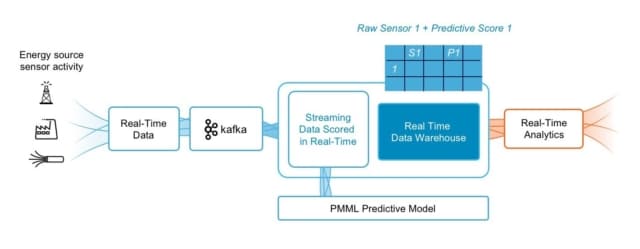

- Stream processing engines: Essential for the continuous querying, aggregation, and transformation of data in motion. Solutions like Apache Flink and Apache Kafka Streams (often operating atop Apache Kafka, a leading Distributed messaging queue) are pivotal. These engines facilitate complex event processing (CEP) and real-time analytics, identifying patterns and deriving insights from unbounded data streams.

- In-memory computing: For ultra-low latency operations, In-memory computing solutions store frequently accessed data directly in RAM. This drastically reduces retrieval times and accelerates analytical queries, providing near-instantaneous access to critical data.

- Distributed messaging queues: Platforms like Apache Kafka serve as the central nervous system for real-time data ingestion and distribution. They reliably capture, store, and deliver event streams, decoupling data producers from consumers and ensuring scalability and fault tolerance.

- Low-latency data storage: Specialized databases and caches are designed for high-speed writes and reads, ensuring that processed insights and operational data are immediately available. This includes key-value stores, time-series databases, and in-memory data grids optimized for rapid data ingestion and querying, forming the core of an Operational Data Store.

Key Data Governance Features for Real-Time Environments

In a world of constant data flow, robust governance is not just a best practice but a critical necessity for maintaining trust, compliance, and data integrity. A sophisticated Real-Time Platform integrates several critical features:

- Real-time data quality monitoring: Systems continuously validate incoming data streams for accuracy, completeness, and consistency, flagging or correcting anomalies before they propagate. This ensures that decisions are based on reliable information.

- Fine-grained access control: Implementing both Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC) ensures that sensitive real-time data is only accessible to authorized personnel or applications. This is crucial for maintaining data security, privacy, and compliance with regulations.

- Stream data lineage: Tracking the origin, transformations, and destinations of data points as they move through the platform provides complete transparency and auditability. This is essential for debugging issues, validating data pipelines, and demonstrating regulatory compliance.

- Audit logging for data events: Comprehensive, immutable logs of who accessed what data, when, and how, along with any modifications, provide a vital record. This feature is indispensable for security audits, forensic analysis, and demonstrating accountability.

Primary AI/ML Integration

The true power of a Real-Time Platform often lies in its seamless integration with artificial intelligence and machine learning capabilities, transforming raw data into predictive and prescriptive intelligence:

- Real-time ML inference on data streams: Trained machine learning models can score incoming data points instantly, enabling immediate predictions or classifications. This is vital for applications like instantaneous fraud detection, personalized product recommendations, or dynamic pricing adjustments.

- Anomaly detection with ML: Machine learning algorithms continuously monitor data streams to identify unusual patterns or outliers. This capability signals potential issues such as system failures, security breaches, manufacturing defects, or sudden changes in customer behavior, allowing for proactive intervention.

- Stream-based model training: Some advanced platforms support continuous learning, where ML models are updated and refined using new data streams as they arrive. This ensures models remain relevant and accurate by adapting to evolving data distributions and business environments.

- Integration with real-time feature stores: These specialized data repositories provide consistently defined, low-latency features for ML models. By centralizing and serving features in real time, they ensure that models performing real-time inference have access to the most current and relevant data attributes, enhancing prediction accuracy and consistency.

Challenges and Barriers to Adoption

While the transformative benefits of a Real-Time Platform are significant, implementing and managing such sophisticated systems comes with its own set of challenges that organizations must proactively address:

- Complexity and Scalability: Designing, deploying, and maintaining highly available, fault-tolerant, and scalable real-time systems requires deep technical expertise in distributed systems, stream processing, and event-driven architectures. Managing extremely high data volumes and velocities can strain infrastructure and necessitate careful resource provisioning and optimization.

- Data Consistency and Reliability: Ensuring data consistency across distributed systems, especially in the event of failures, is a non-trivial task. Achieving guarantees like “exactly-once processing” (where each data record is processed neither more nor less than once) is critical for accuracy but notoriously complex to implement.

- Cost of Infrastructure: The demands of In-memory computing, high-performance storage, and continuous processing can lead to substantial infrastructure costs, particularly for large-scale deployments that require significant computational resources and specialized hardware.

- Talent Gap: There’s a significant global demand for data engineers and architects proficient in stream processing, distributed systems, real-time analytics, and MLOps, making recruitment and retention of skilled personnel a considerable challenge for many organizations.

- Integration Hurdles: Integrating a new real-time system with existing legacy applications, diverse data sources, and established data silos can be complex and time-consuming. It often requires robust API management, data synchronization strategies, and careful planning to avoid disrupting ongoing operations.

Business Value and ROI

Despite the inherent challenges, the Return on Investment (ROI) from a well-planned and effectively implemented Real-Time Platform is substantial and multifaceted, directly impacting a business’s bottom line and strategic position:

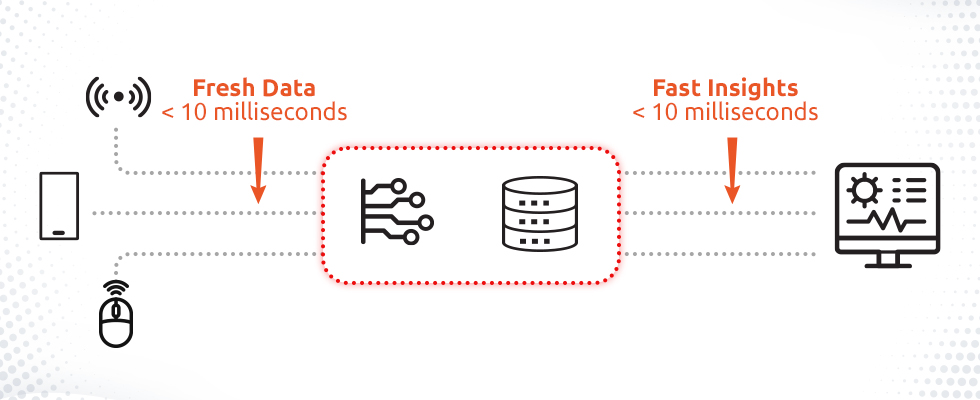

- Instant Decision-Making: Businesses can make critical decisions in milliseconds rather than hours or days. This agility allows organizations to react to market shifts, customer needs, and operational issues with unprecedented speed, seizing opportunities before competitors.

- Enhanced Customer Experience: Real-time personalization of offers, instantaneous fraud detection, immediate customer service responses, and dynamic content delivery lead to significantly higher customer satisfaction and loyalty.

- Operational Efficiency and Optimization: Continuous monitoring of manufacturing lines, logistics networks, IT infrastructure, or energy grids in real time enables predictive maintenance, dynamic resource optimization, and rapid issue resolution, thereby reducing downtime, waste, and operational costs.

- Competitive Advantage: The unparalleled ability to leverage the freshest data for insights and immediate actions provides a significant and sustainable edge over competitors who are still relying on batch processing for their analytical needs.

- Fraud Detection and Risk Management: Financial institutions and e-commerce platforms can detect and prevent fraudulent transactions as they happen, minimizing financial losses, safeguarding customer assets, and enhancing overall security postures.

- New Revenue Opportunities: Real-time data and insights can power the creation of innovative new products and services, unlock dynamic pricing models, and facilitate novel business models, thereby generating new revenue streams.

Comparative Insight: Real-Time Platform vs. Traditional Data Lakes/Warehouses

Understanding the unique value and operational domain of a Real-Time Platform often benefits from a clear comparison with its predecessors: the traditional data lake and data warehouse. While these architectures have served businesses well for decades, their fundamental design philosophies differ significantly from real-time systems, particularly concerning latency, processing paradigms, and primary use cases.

Traditional Data Warehouses

Data warehouses are meticulously designed for structured, historical data analysis. They typically ingest data from various operational systems through Extract, Transform, Load (ETL) processes, which are often batch-oriented and run on a scheduled basis (e.g., daily, weekly, or monthly). This process cleanses, transforms, and loads data into a highly optimized relational database for reporting and business intelligence. Their strengths lie in consistent, high-quality historical reporting, complex analytical queries over vast, historical datasets, and providing a consolidated view of past business performance. However, their inherent latency—often measured in hours or days—makes them fundamentally unsuitable for use cases requiring immediate action or instantaneous insights.

Traditional Data Lakes

Data lakes emerged to handle the explosion of diverse data types – structured, semi-structured, and unstructured – at petabyte scale. They store raw data in its native format, typically on inexpensive storage like HDFS or cloud object storage (e.g., Amazon S3, Azure Data Lake Storage). The “schema-on-read” approach offers immense flexibility, allowing data to be processed and analyzed as needed, often using big data frameworks like Apache Spark for batch processing or ad-hoc queries. Data lakes are excellent for exploratory data science, machine learning model training on vast datasets, and storing historical archives of all enterprise data. However, similar to data warehouses, their primary processing paradigm is still largely batch-oriented. While a data lake can serve as a valuable source or sink for stream processing, it is not, by itself, a Real-time Analytics Platform.

The Real-Time Platform Distinction

In stark contrast, a Real-Time Platform is purpose-built for velocity and immediacy. Its core distinction lies in processing data in motion rather than data at rest. Key differentiators include:

- Latency: Real-Time Platforms minimize the time from data ingestion to insight delivery to milliseconds, enabling near-instantaneous reactions. Traditional systems operate with latencies of hours or days.

- Processing Paradigm: A Real-Time Platform employs continuous Stream processing engines, handling unbounded streams of data as they arrive. Data warehouses and data lakes typically process bounded datasets in discrete batches.

- Primary Use Cases: Real-Time Platforms excel in operational decision-making, fraud detection, real-time personalization, IoT monitoring, anomaly detection, and predictive maintenance. Traditional systems are best suited for historical reporting, strategic analysis, and large-scale data science research.

- Data Flow: Characterized by an Event-driven architecture and continuous data flow, responding instantly to every new event. Traditional systems rely on scheduled and periodic data movements.

- Technology Stack: Leans heavily on Event-driven architecture, Stream processing engines, Distributed messaging queues, and In-memory computing, alongside specialized Low-latency data storage. Data warehouses rely on robust relational databases, while data lakes leverage distributed file systems and batch processing engines.

It’s important to note that these systems are not mutually exclusive but often complementary within a modern data ecosystem. Many forward-thinking data architectures adopt a hybrid approach, using a Real-Time Data Streaming Platform for immediate operational insights and feeding processed, historical data into data warehouses or data lakes for long-term storage, comprehensive strategic analysis, and large-scale AI/ML model training. This integrated strategy allows organizations to leverage the best of both worlds, achieving both instant agility and deep historical understanding.

World2Data Verdict: The Future is Now for Real-Time Agility

The imperative for instantaneous insights has unequivocally cemented the Real-Time Platform as a cornerstone of modern data strategy. For businesses striving to thrive in an era defined by rapid change and fierce competition, delaying adoption is no longer a viable option. World2Data.com asserts that organizations must prioritize the development or acquisition of a robust Real-Time Platform to unlock unparalleled operational efficiency, foster genuine customer intimacy, and maintain a decisive competitive edge. The future of business lies in proactive, data-driven action, powered by the ability to perceive, process, and respond to events the moment they unfold. Investing in this critical technology is not merely about staying current; it’s about pioneering the next wave of business innovation. Embracing leading solutions such as those offered by Apache Kafka, Apache Flink, Amazon Kinesis, Google Cloud Dataflow, Azure Stream Analytics, Confluent Platform, Rockset, or Tinybird is no longer a strategic choice but an operational necessity for anyone aiming to power instant insights for their business.